Generate product embeddings

Now that we have our product catalog, the next step is to generate embeddings for each item.

Before we create the embeddings for each bag, let's take a closer look at AWS Titan multimodal embeddings model. Since it is a multimodal embeddings model, you can both convert text and images to embeddings.

This means that you can both use texts like "red bag" and the actual image of a red bag as input. To be able to choose between both scenarios, let's create a function where you can select which type of embedding we'd like to generate.

Embedding Generation Function

In a new cell of your Jupyter Notebook, add the following function:

def generate_embeddings(image_base64=None, text_description=None):

input_data = {}

if image_base64 is not None:

input_data["inputImage"] = image_base64

if text_description is not None:

input_data["inputText"] = text_description

if not input_data:

raise ValueError("At least one of image_base64 or text_description must be provided")

body = json.dumps(input_data)

response = bedrock_runtime.invoke_model(

body=body,

modelId="amazon.titan-embed-image-v1",

accept="application/json",

contentType="application/json"

)

response_body = json.loads(response.get("body").read())

finish_reason = response_body.get("message")

if finish_reason is not None:

raise EmbedError(f"Embeddings generation error: {finish_reason}")

return response_body.get("embedding")

This function takes either an image (in base64 encoding) or a text description and generates embeddings using AWS Titan. Let's go through what the code does.

First, we're just making sure that we're receiving input data and otherwise raising an error:

if image_base64 is not None:

input_data["inputImage"] = image_base64

if text_description is not None:

input_data["inputText"] = text_description

if not input_data:

raise Exception("At least one of image_base64 or text_description must be provided")

The following snippet dumps the input data and then calls Amazon Titan Multimodal Embeddings to generate a vector of embeddings:

body = json.dumps(input_data)

response = bedrock_runtime.invoke_model(

body=body,

modelId="amazon.titan-embed-image-v1",

accept="application/json",

contentType="application/json"

)

response_body = json.loads(response.get("body").read())

If in case the generation fails, we'll make sure to raise an error:

if finish_reason is not None:

raise EmbedError(f"Embeddings generation error: {finish_reason}")

And finally, we'll return the generated embedding:

return response_body.get("embedding")

Base64 encoding function

Before generating embeddings for images, we need to encode the images in base64.

Add this function to a new cell in your Jupyter Notebook:

def base64_encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode('utf8')

This is necessary because Amazon Titan Multimodal Embeddings G1 requires a base64-encoded string for the inputImage field:

inputImage – Encode the image that you want to convert to embeddings in base64 and enter the string in this field.

Learn more here: Amazon Titan Multimodal Embeddings G1

Generating Vector Embeddings

We're ready to generate vector embeddings. We'll need to call the generate_embeddings function for each product in our product catalog, so let's iterate over each product in a new cell in your Jupyter Notebook:

embeddings = []

for index, row in df.iterrows():

For each row in the DataFrame, we'll first need to base64 encode the image:

embeddings = []

for index, row in df.iterrows():

full_image_path = os.path.join(image_path, row['image'])

image_base64 = base64_encode_image(full_image_path)

Go ahead and call the function we just created to generate a vector embedding and append the embedding to the list:

embeddings = []

for index, row in df.iterrows():

full_image_path = os.path.join(image_path, row['image'])

image_base64 = base64_encode_image(full_image_path)

embedding = generate_embeddings(image_base64=image_base64)

embeddings.append(embedding)

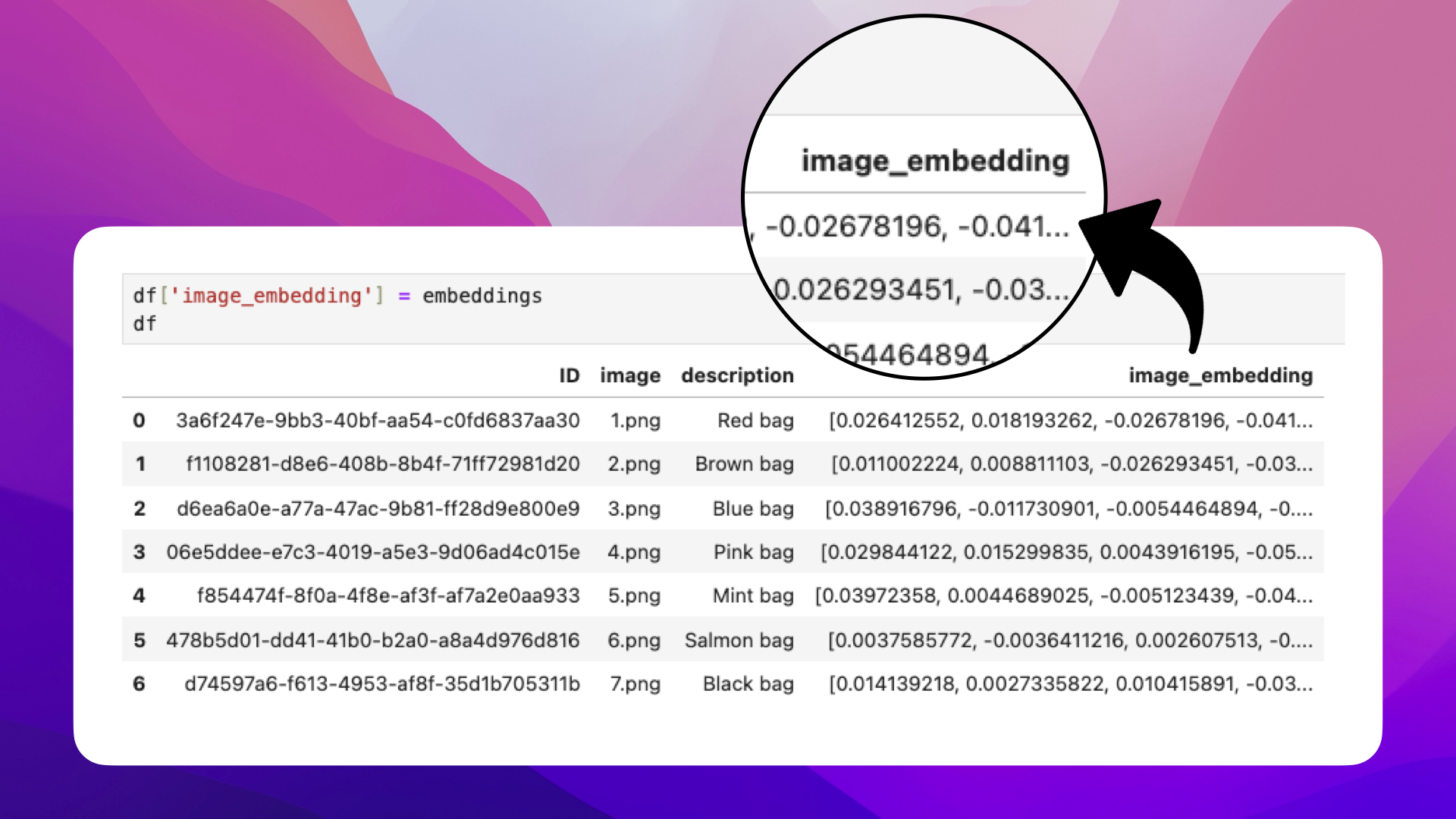

Once we have the embeddings for each product in our product catalog, let's add them to the DataFrame:

df['image_embedding'] = embeddings

Your DataFrame should now look something like this:

Let's generate the embedding for the customer inquiry in the next section.