Vectorize product data

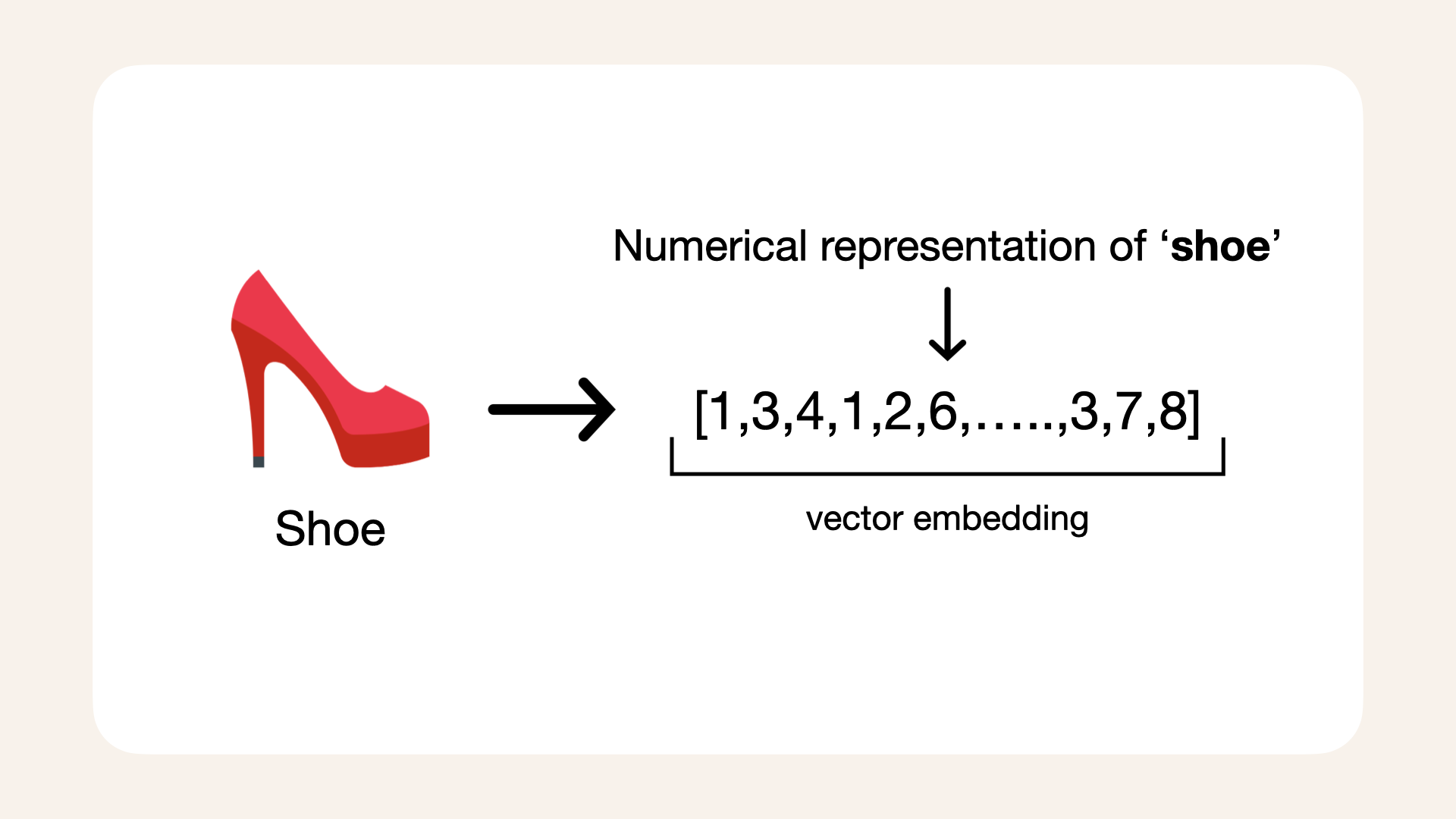

The next step is to vectorize the shoe product data:

We need to generate a vector, the numerical representation of each shoe in our shoe database

Cost of vectorization

Vectorizing datasets with AWS Bedrock and the Titan multimodal model involves costs based on the number of input tokens and images:

-

Text embeddings: $0.0008 per 1,000 input tokens

-

Image embeddings: $0.00006 per image

The provided SoleMates shoe dataset is fairly small, containing 1306 pair of shoes, making it affordable to vectorize.

For this dataset, I calculated the cost of vectorization and summarized the token counts below:

- Token count:

12746 tokens - Images:

1306 - Total cost:

$0.0885568

Calculations:

(12746 tokens/1000)*0.0008 + (1306*0.00006) =

0.0885568

Pre-embedded dataset

This step is entirely optional and designed to accommodate various levels of access and resources.

If you prefer not to generate embeddings or don't have access to AWS, you can use a pre-embedded dataset included in the repository.

This file contains all the embeddings and token counts, so you can follow this guide without incurring additional costs.

For hands-on experience, I recommend running the embedding process yourself to understand the workflow better.

The pre-embedded dataset is located at:

data/solemates_shoe_directory_with_embeddings_token_count.csv

To load the dataset in your Jupyter Notebook, use the following code:

df_shoes_with_embeddings = pd.read_csv('data/solemates_shoe_directory_with_embeddings_token_count.csv')

# Convert string representations to actual lists

df_shoes_with_embeddings['titan_embedding'] = df_shoes_with_embeddings['titan_embedding'].apply(ast.literal_eval)

By using this pre-embedded dataset, you can skip the embedding step and continue directly with the rest of the walkthrough.

This step is entirely optional and designed to accommodate various levels of access and resources.

For hands-on experience, I recommend running the embedding process to understand the workflow.

Let's get started with Amazon Bedrock in the next lesson.