Naive RAG is dead - long live agents

Naive RAG (retrieval augmented generation) is becoming obsolete - AI agents are taking over.

We'll talk about the 4 main limitations of naive RAG and why agents are taking over.

Introduction

We'll dive into the limitations of naive RAG and how all its limitations are solved by agents. To make this guide super hands-on, we'll build it on a made-up e-commerce.

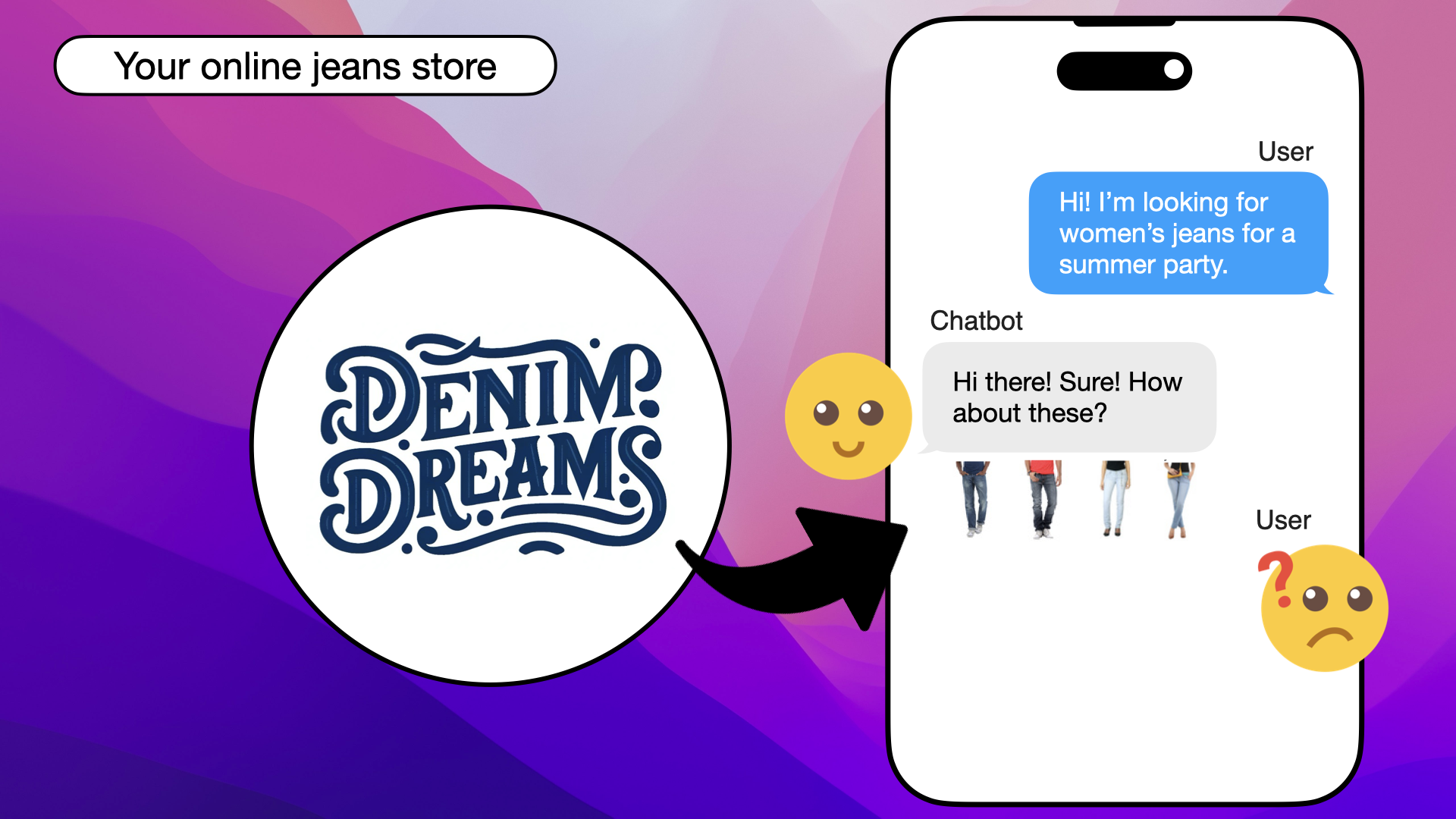

World, meet Denim Dreams:

Meet your online jeans store: Denim Dreams

Denim Dreams is your made-up online jeans store that implemented naive RAG and is now facing issues when users start to chat with their chatbot:

Your online store, Denim Dreams, facing naive RAG challenges

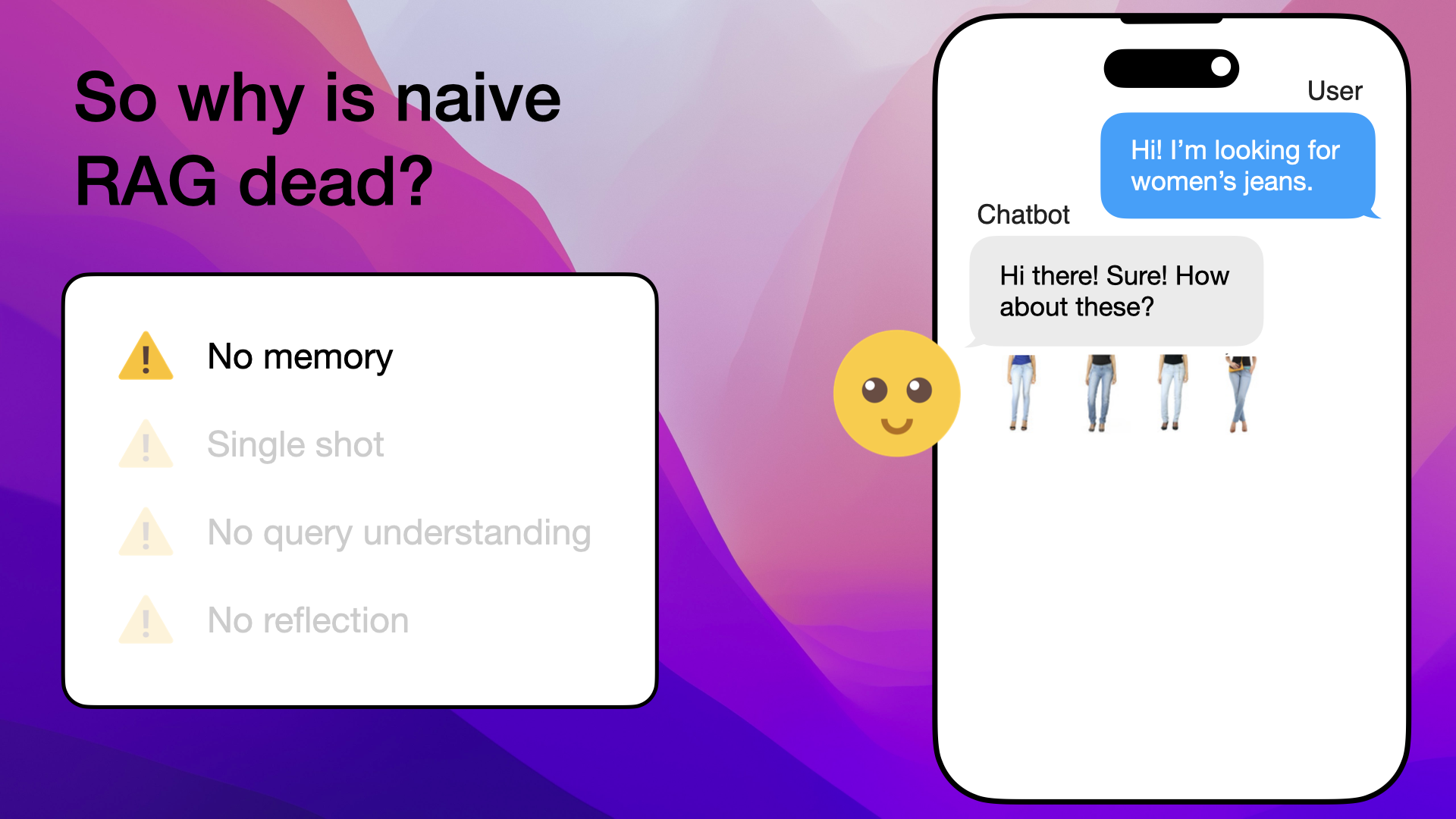

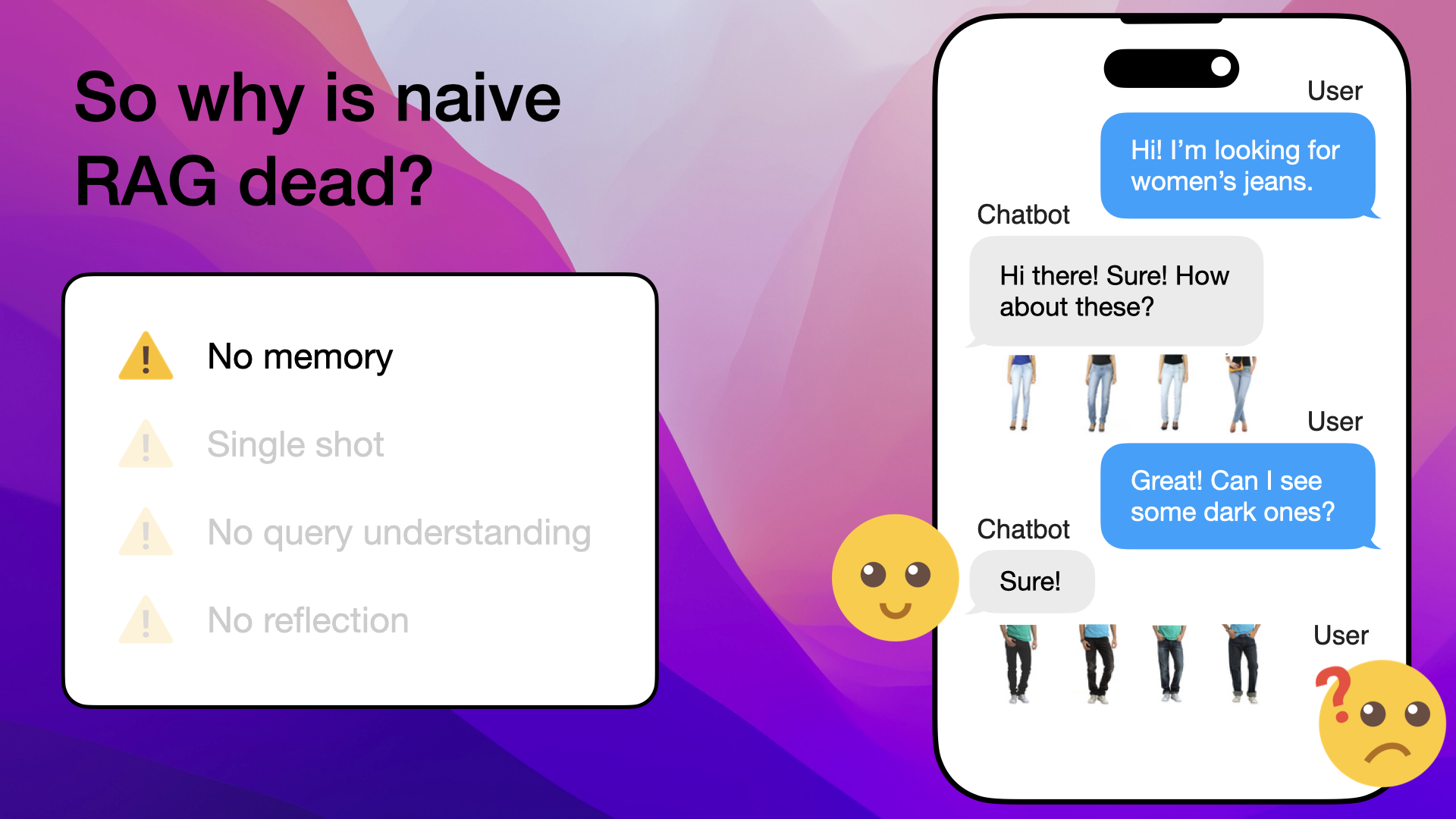

The Main Limitations of Naive RAG

There are four main limitations to why naive RAG is becoming obsolete:

![]()

No memory

![]()

Single shot

![]()

No query understanding

![]()

No reflection

Want to implement AI agents for your e-commerce business?

I specialize in using AI agents to improve product recommendations and customer interactions. Let me show you how agents can transform your e-commerce experience.

Book a free call to discuss how we can work together to boost your e-commerce with AI agents:

Naive RAG Issues

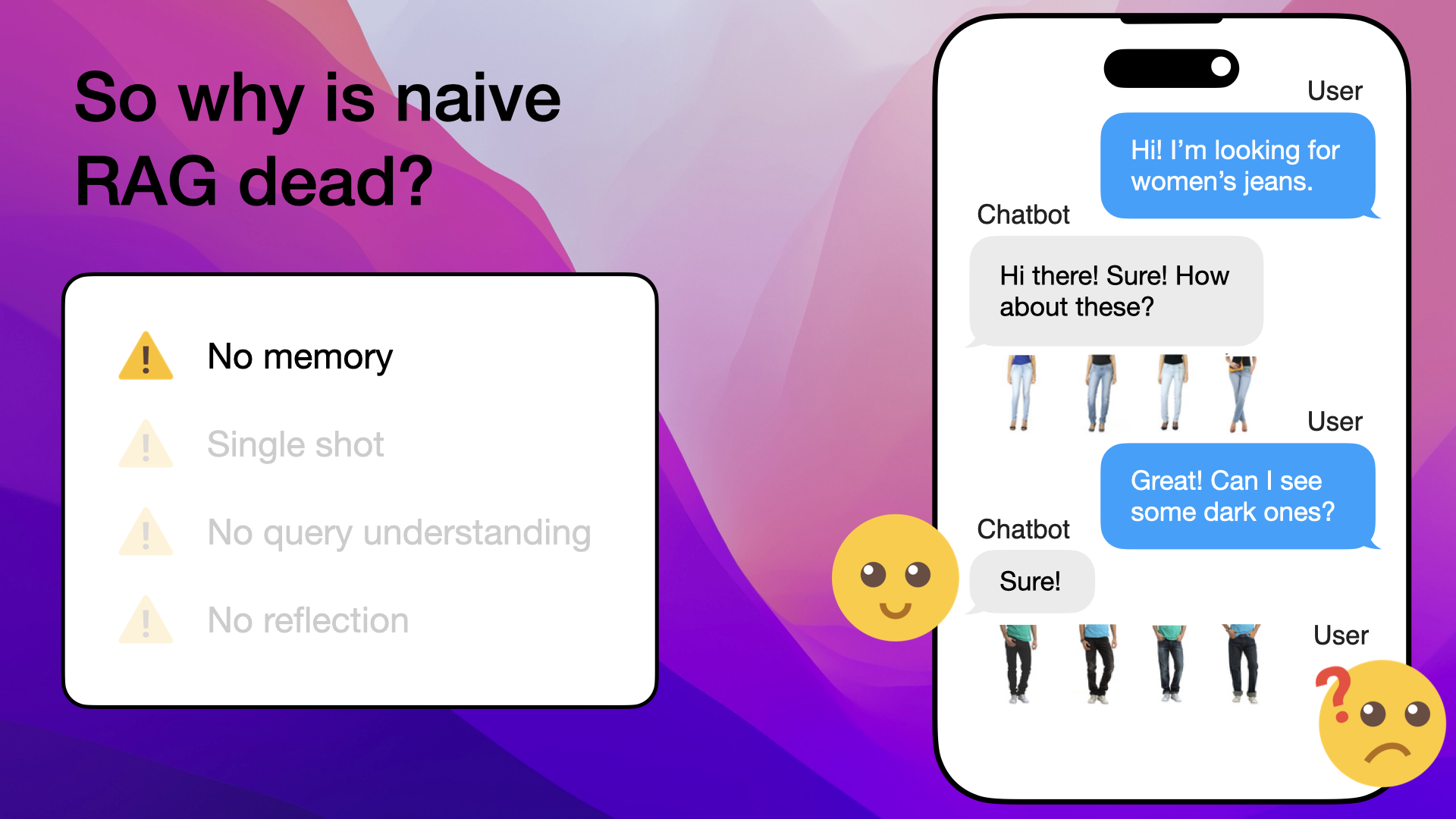

1. No Memory

Imagine a customer coming to your jeans store and asking your chatbot "Hi! I'm looking for women's jeans". This is an easy one for your RAG chatbot, it'll confidently pull 5 neighbors and give the customer an answer:

Customer asks for "women's jeans" and gets some fitting neighbors

Problem arises

If the customer is happy and leaves your chatbot now, all would've been alright and your chatbot would've done its job.

But it doesn't end here, the customer has another question and further says "Great! Can I see some dark ones?".

Here's where the problem arises, your naive RAG chatbot has no memory, so instead of pulling neighbors for dark women's jeans, it'll pull neighbors for dark jeans and end up with men's jeans:

Naive RAG has no memory and pulls irrelevant neighbors

Well, perhaps you mostly have dark men's jeans in your e-commerce so your naive RAG chatbot pulls those neighbors from the vector database when it searches for dark jeans.

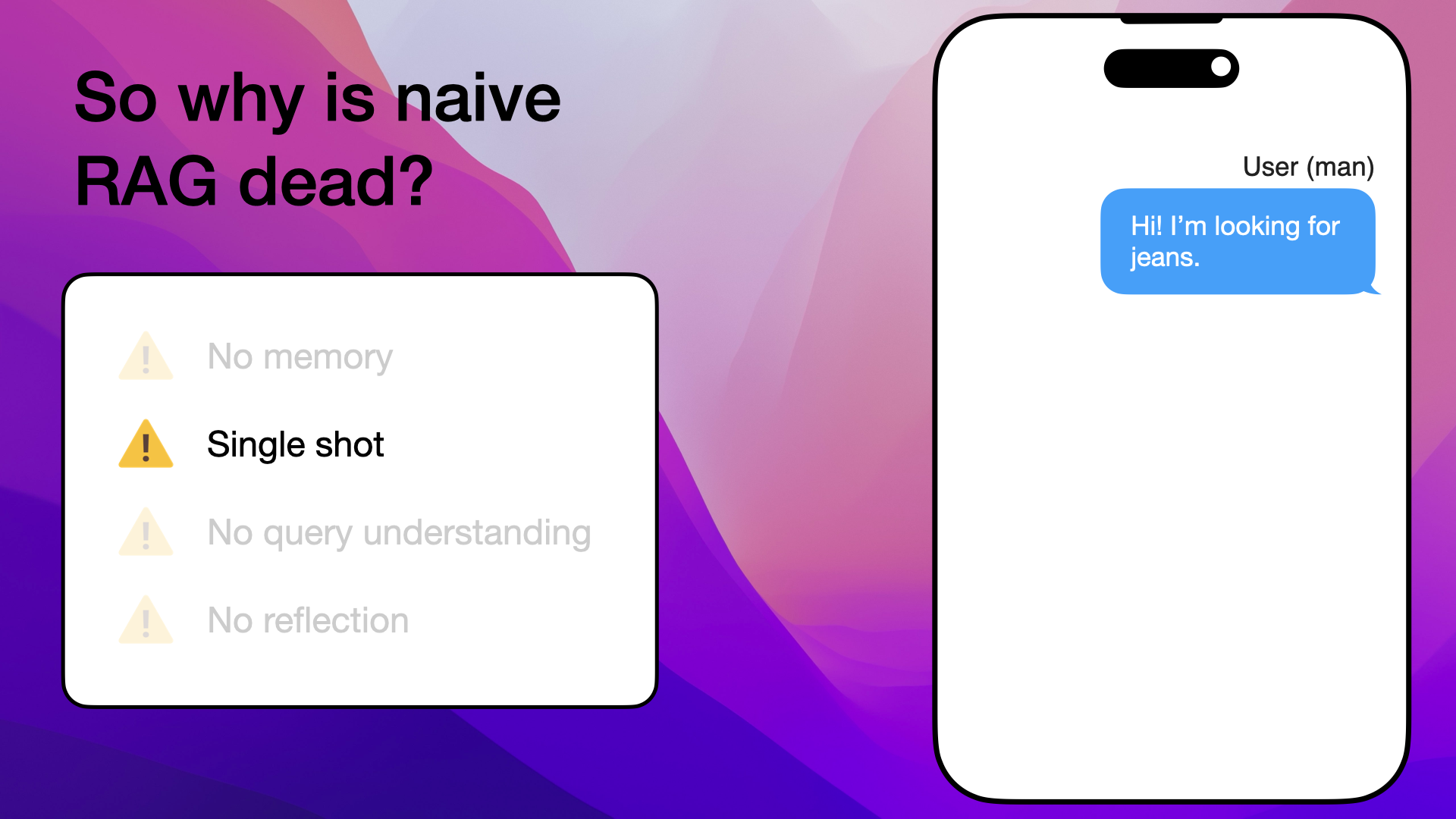

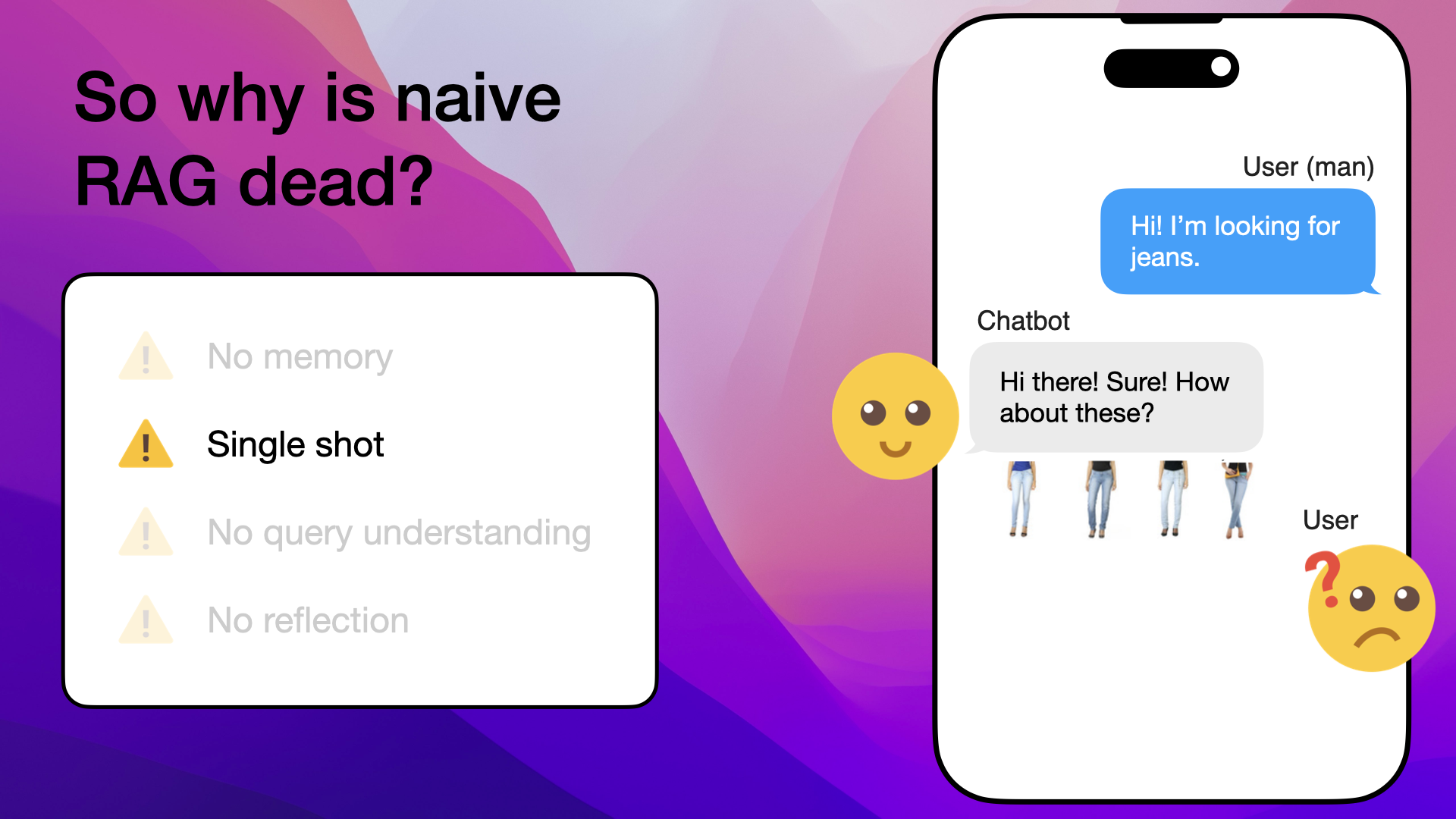

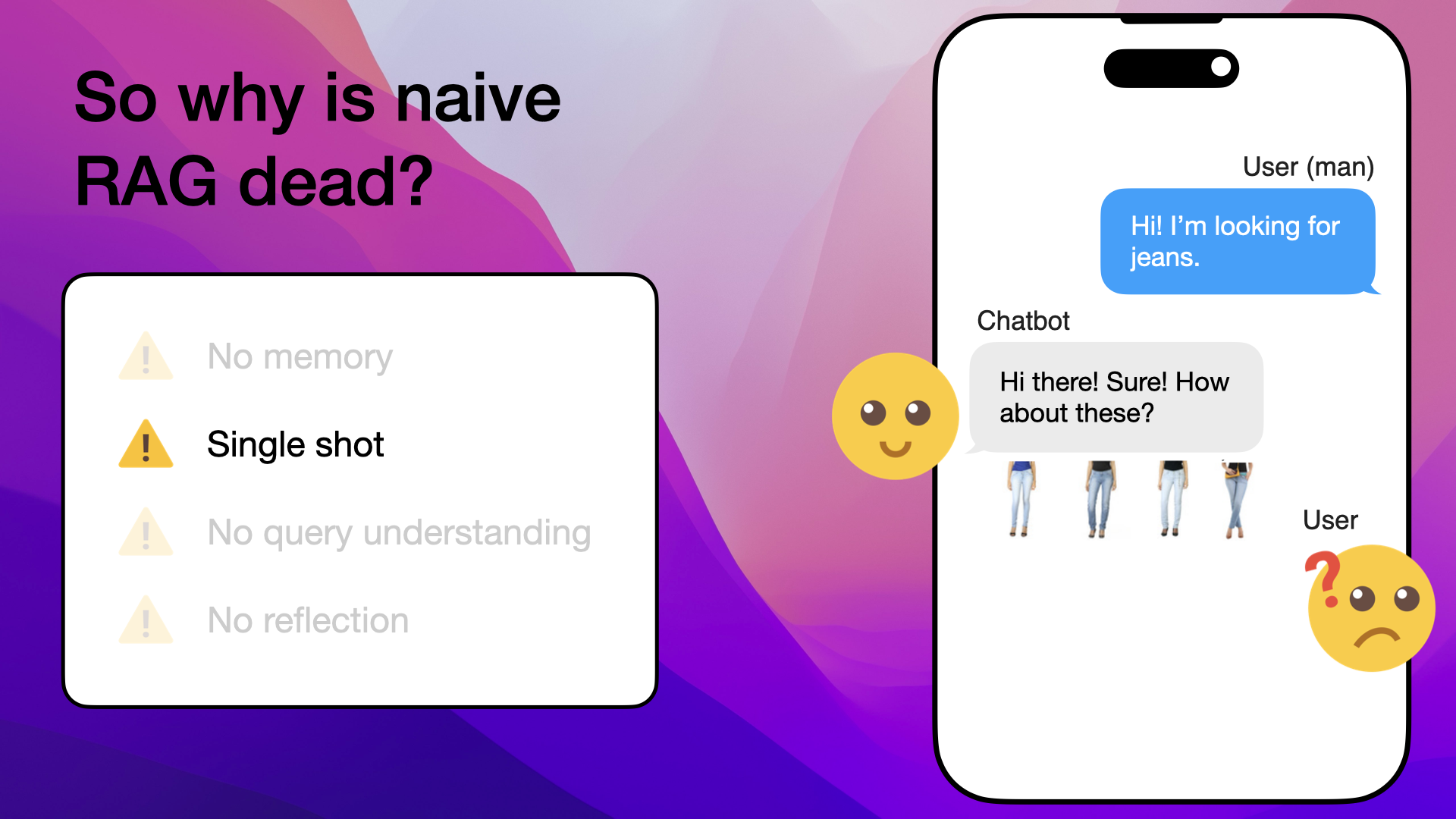

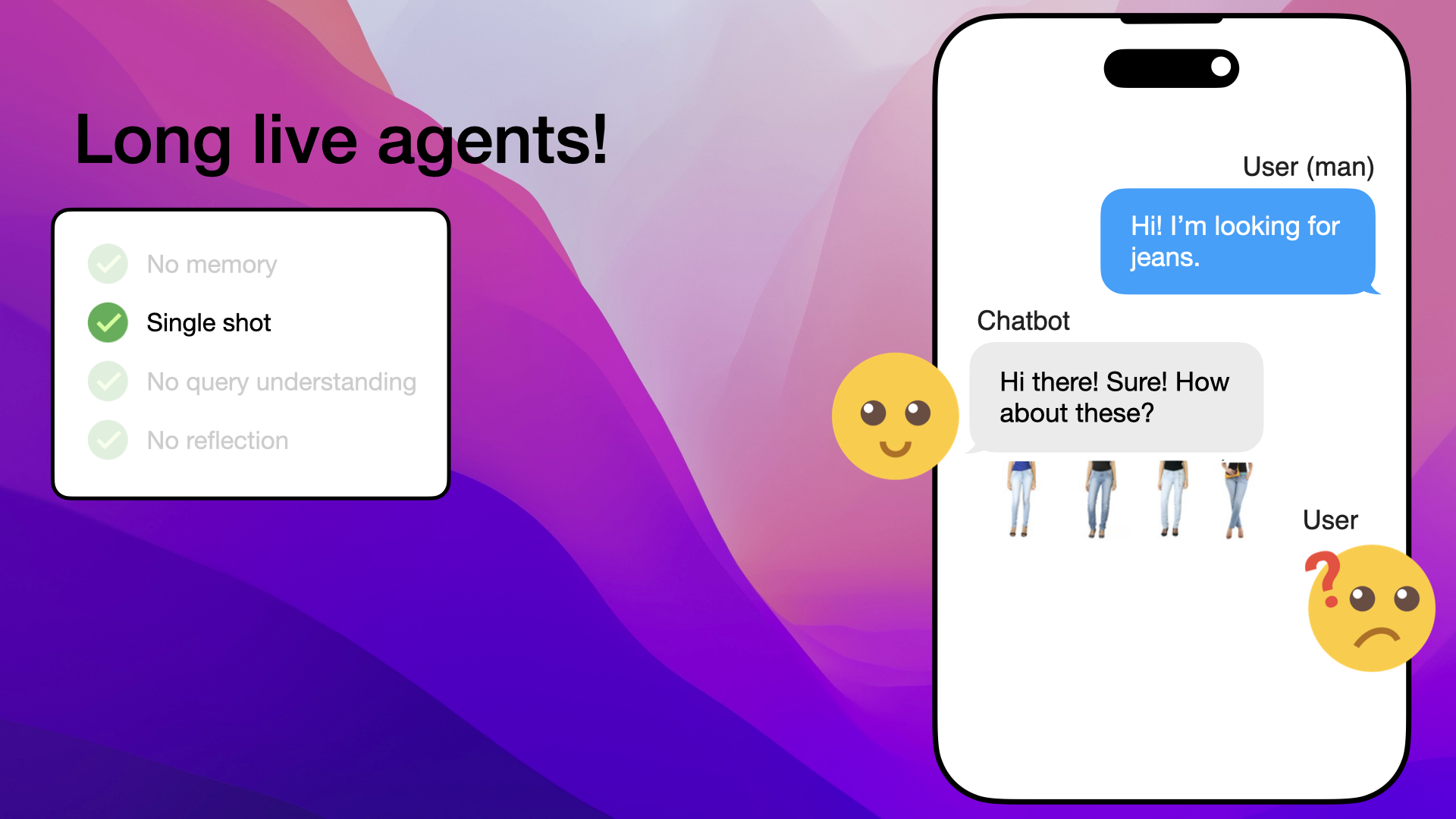

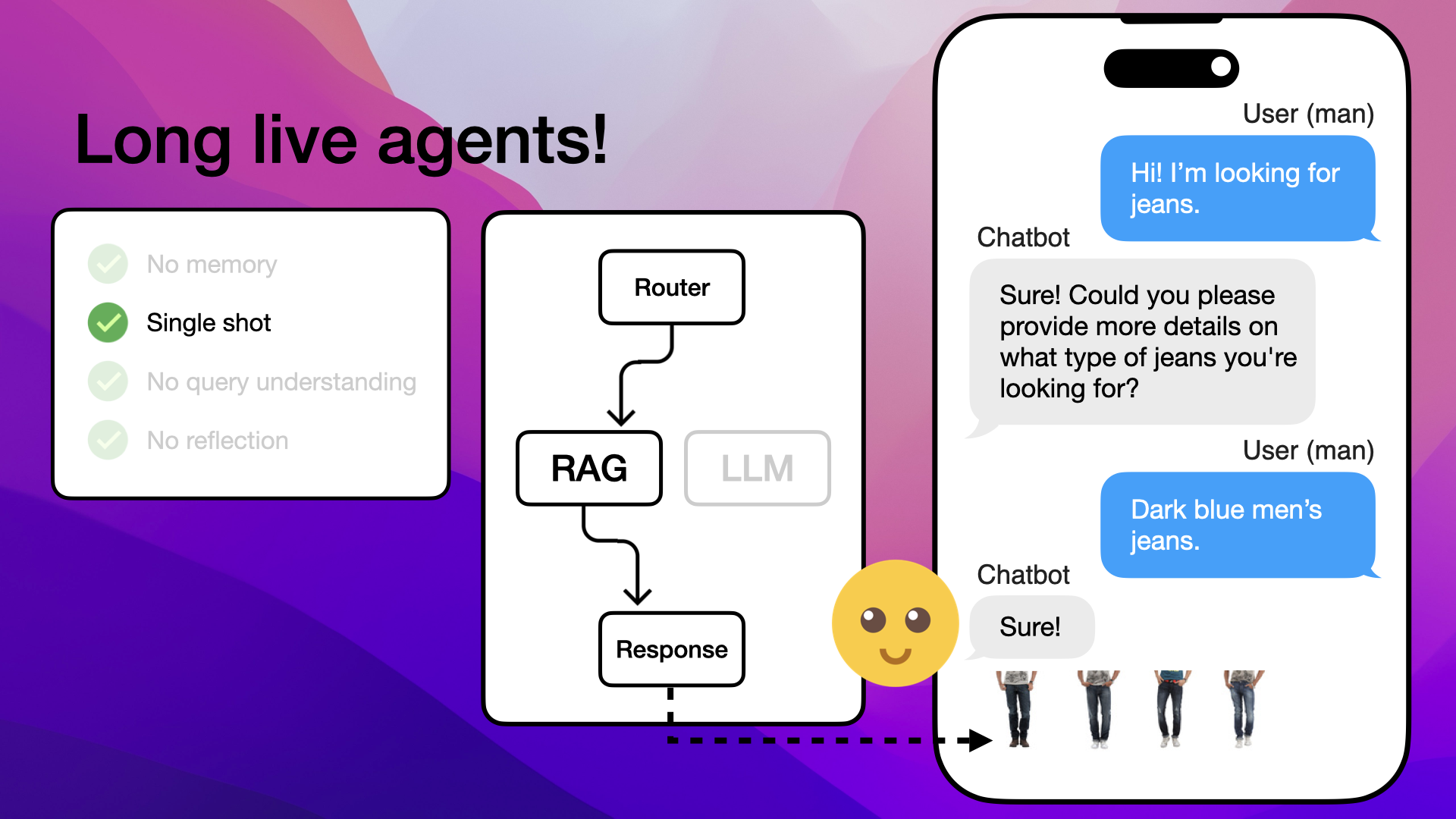

2. Single Shot

Another limitation is that naive RAGs are limited to, is single-shot generation. Look at this next customer, who happens to be a man, looking for jeans, who says "Hi! I'm looking for jeans":

A customer is vaguely looking for jeans, what will the naive RAG pull?

This is a vague inquiry, so let's think of it as humans, if someone asked you that, you might respond by asking for more information. You might ask about colors or occasion or similar.

But naive RAGs don't have that option, they are limited simply to pulling neighbors close to "Hi! I'm looking for jeans" and might therefore respond with irrelevant jeans:

Naive RAG are limited to pulling irrelevant neighbors to vague customer inquiries

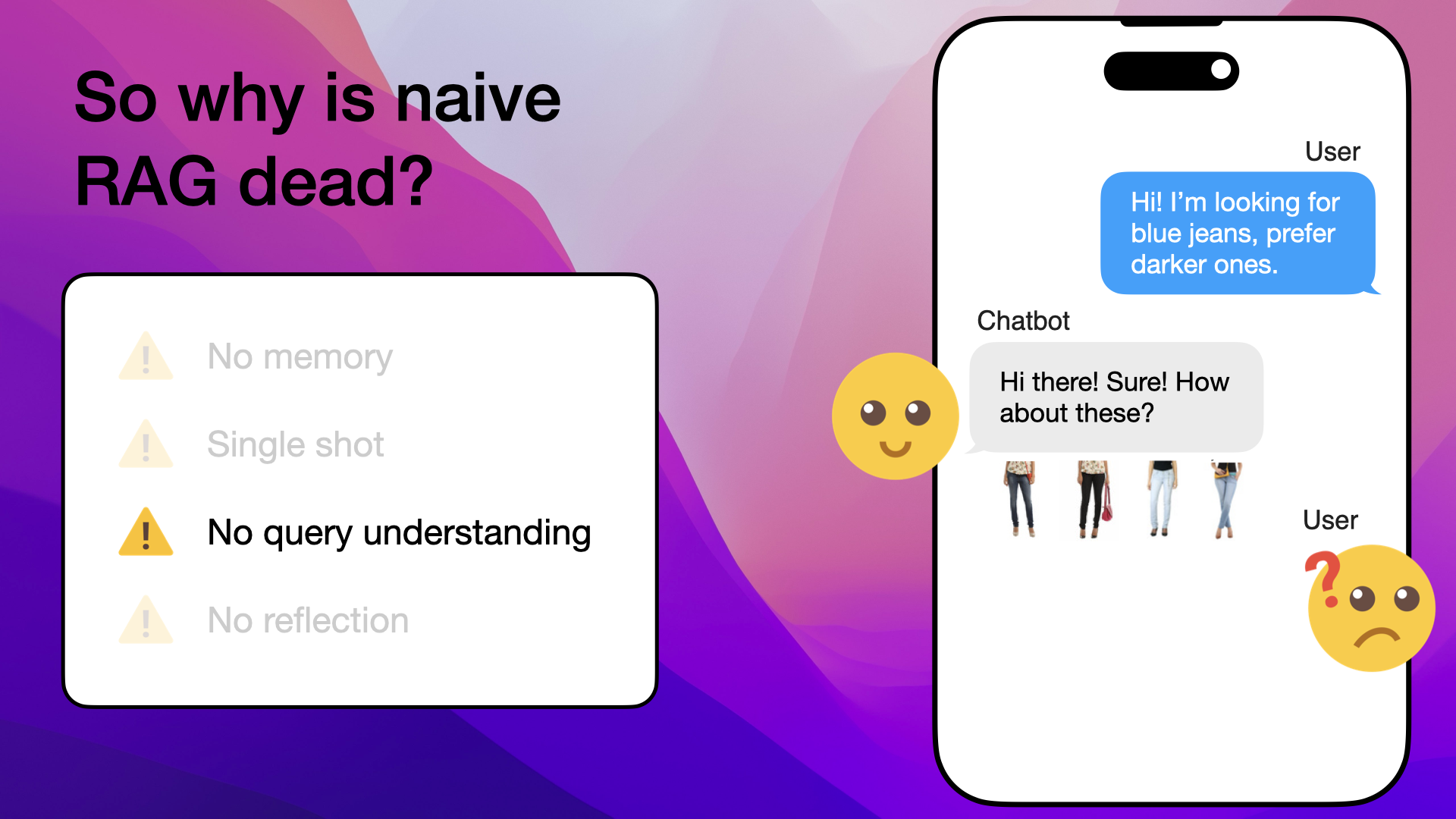

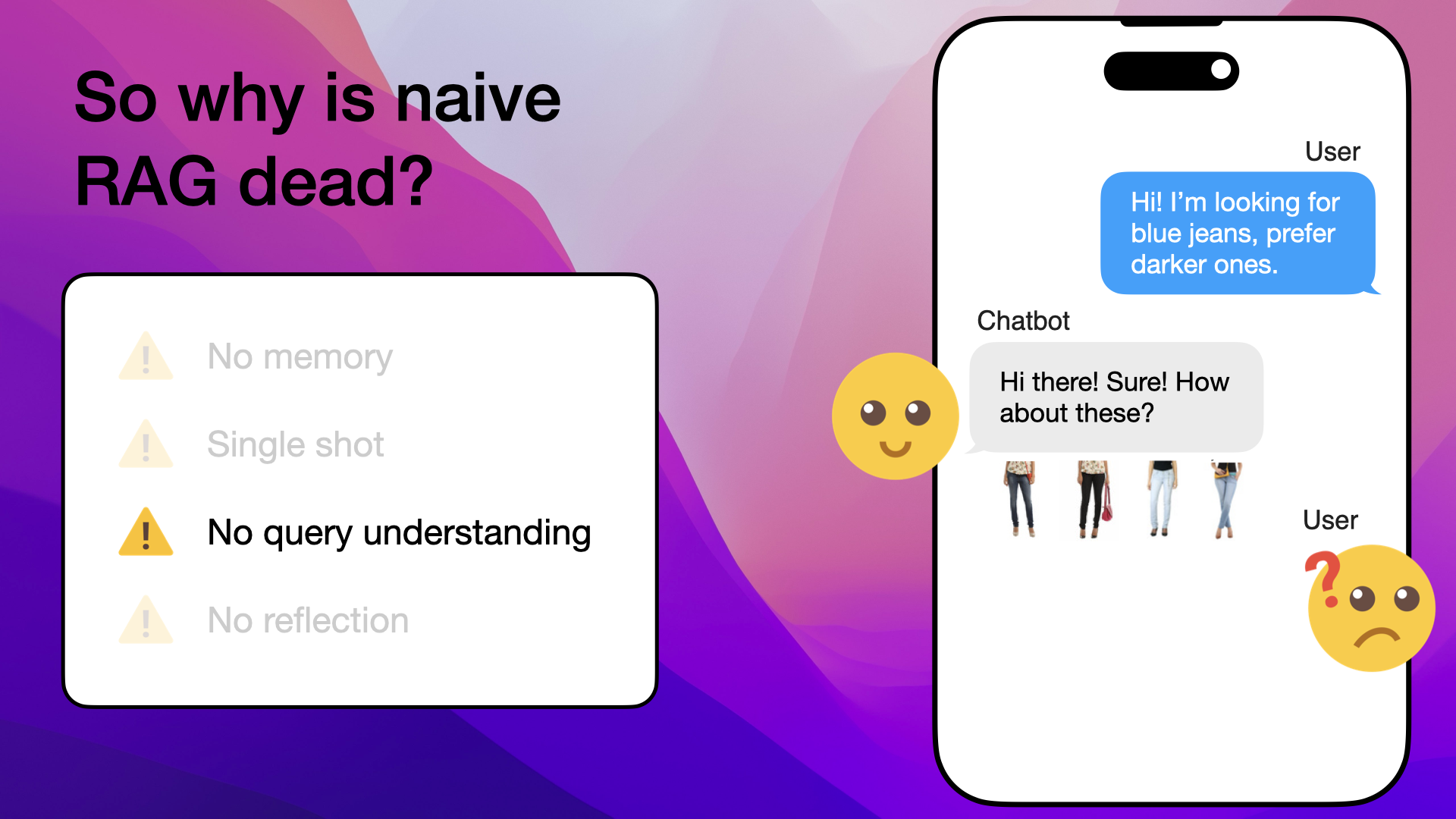

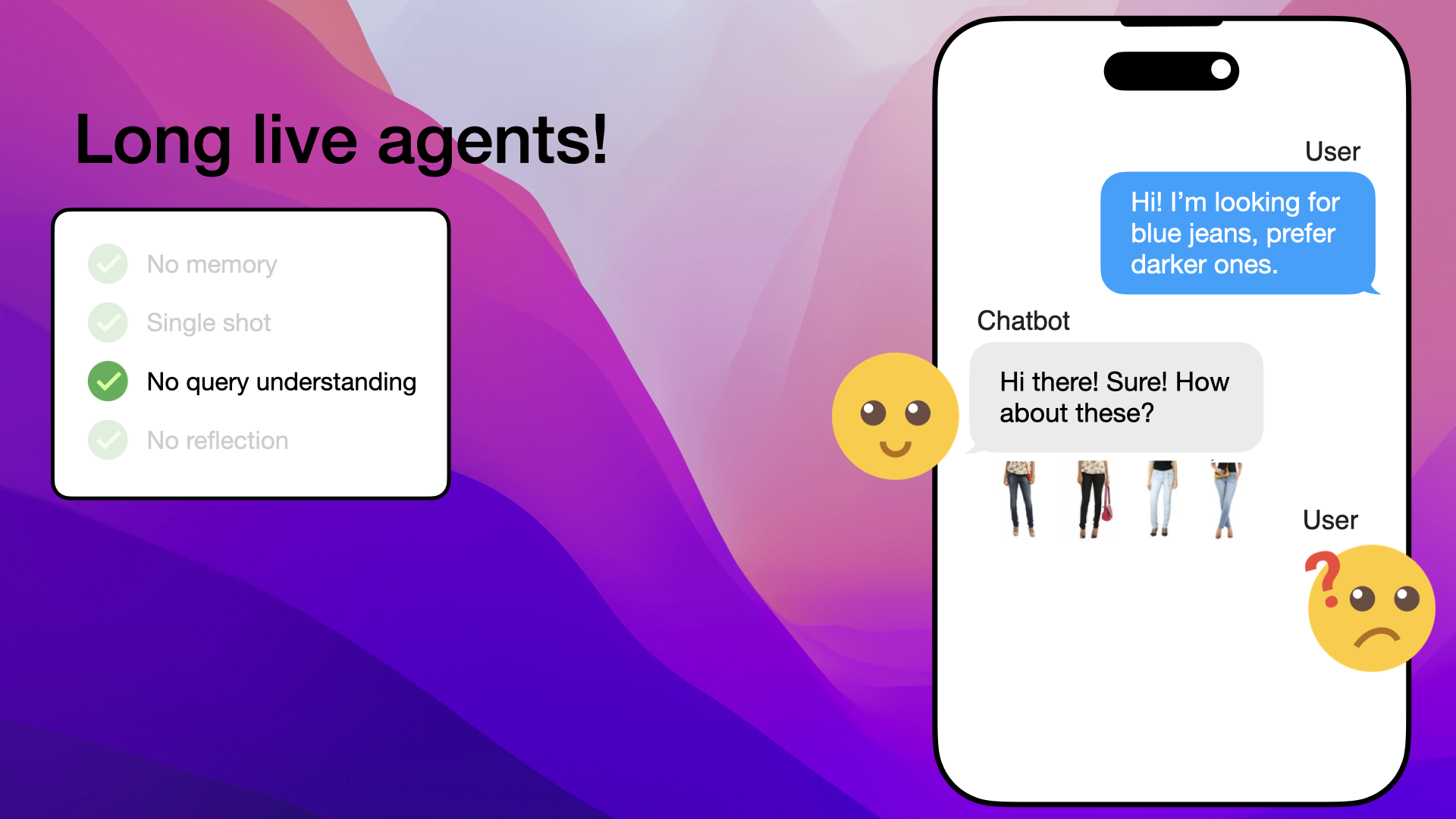

3. No Query Understanding

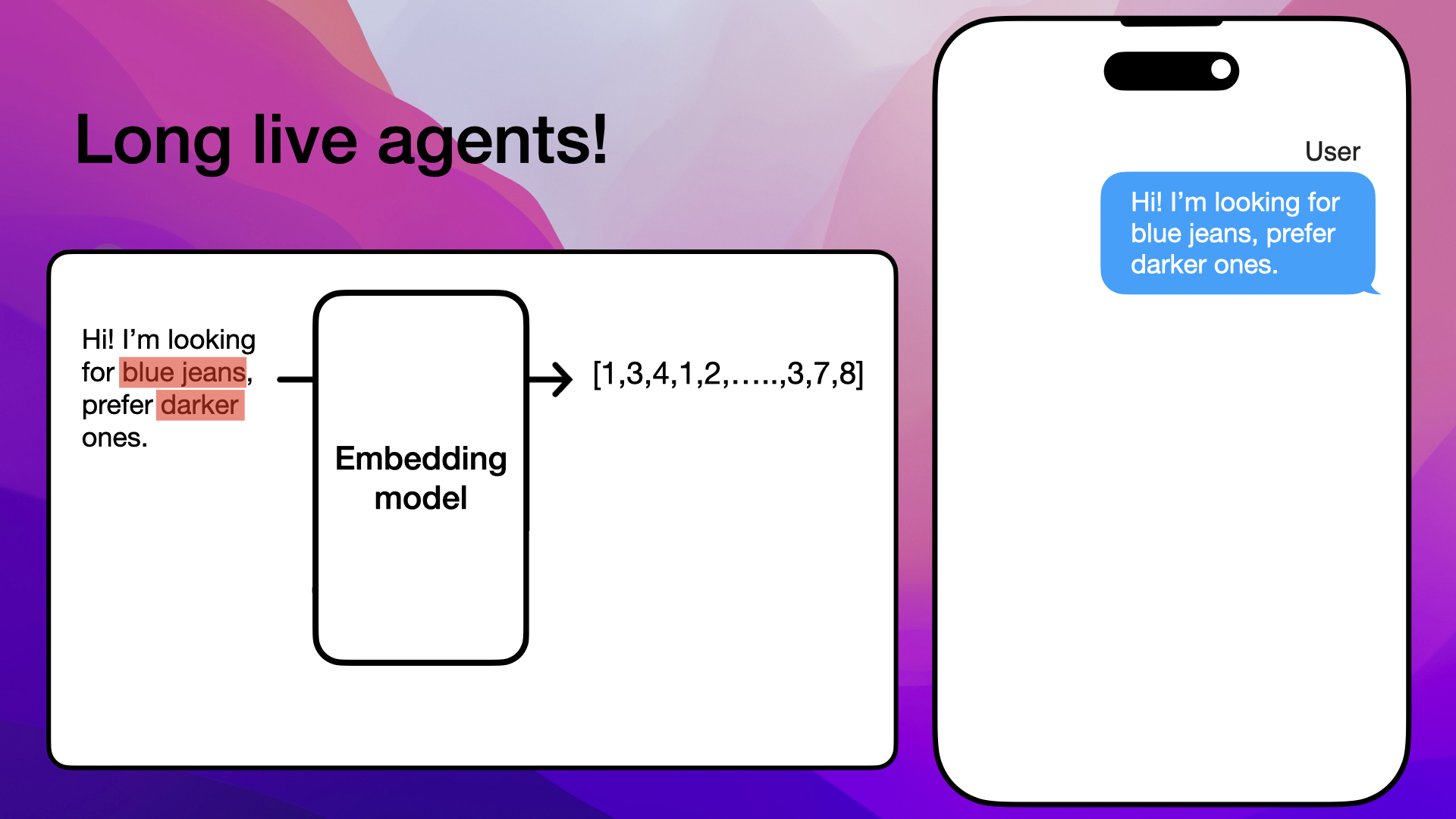

Another limitation of naive RAGs is their inability to query understanding. Here we have a new customer chatting with your bot asking "Hi! I'm looking for blue jeans, prefer darker ones". Naive RAGs can't rephrase the vector query and are forced to pull neighbors to the whole customer inquiry.

The consequence is a mix of potentially irrelevant product recommendations, some are technically correct products since they are both "blue" and "dark blue" jeans:

Naive RAGs are not able to rephrase the vector query search, giving a mix of irrelevant product recommendations

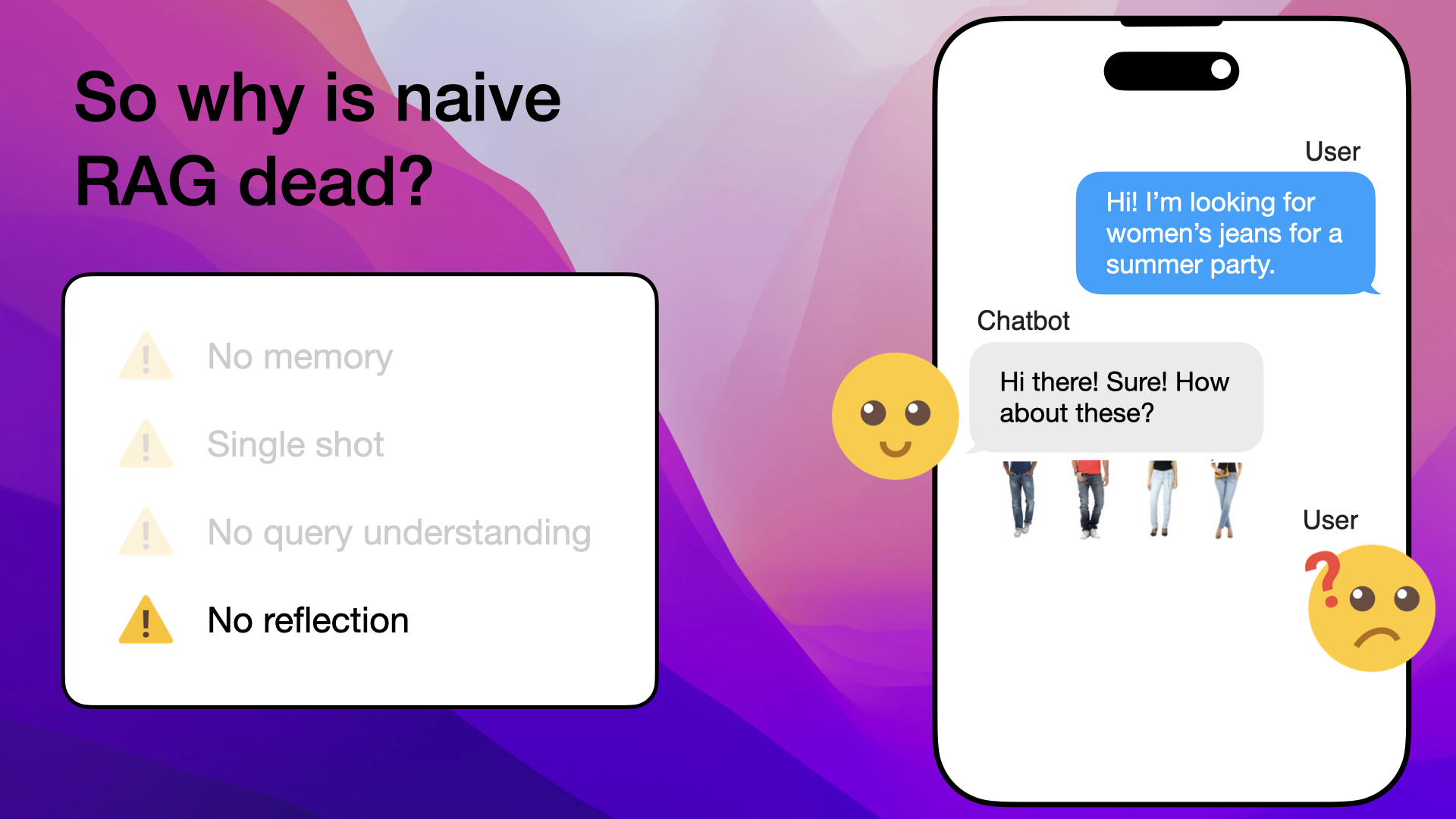

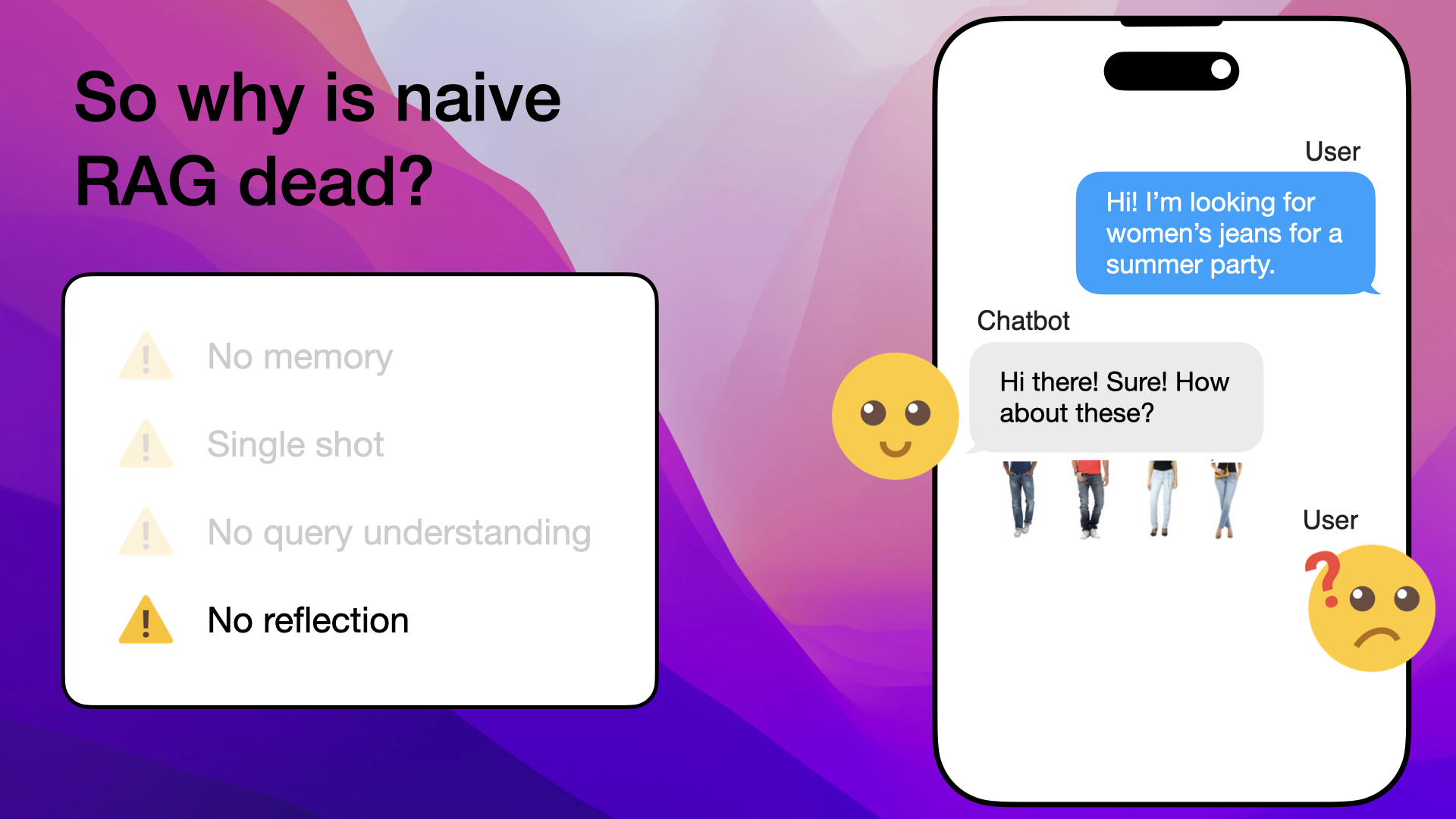

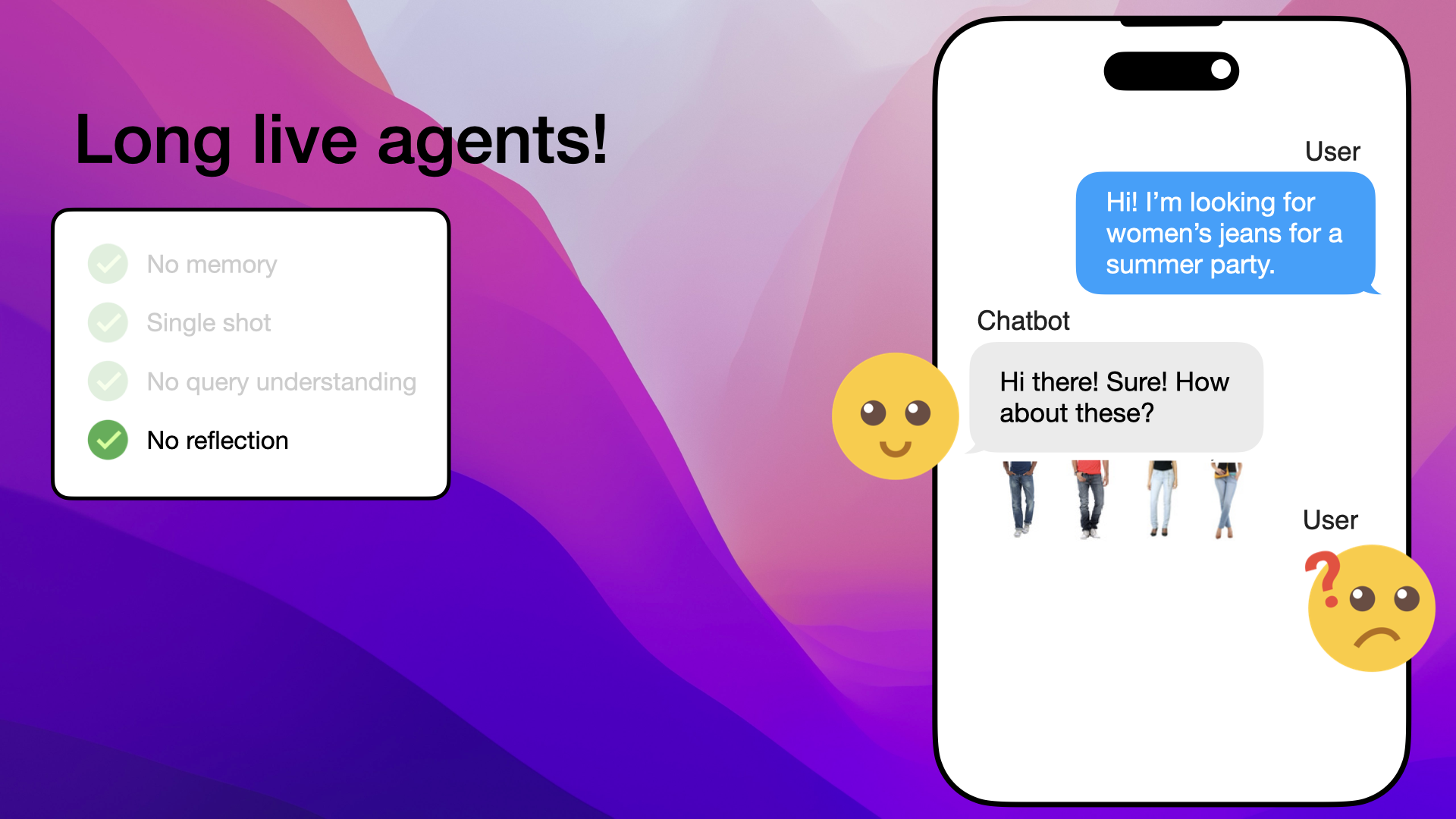

4. No Reflection

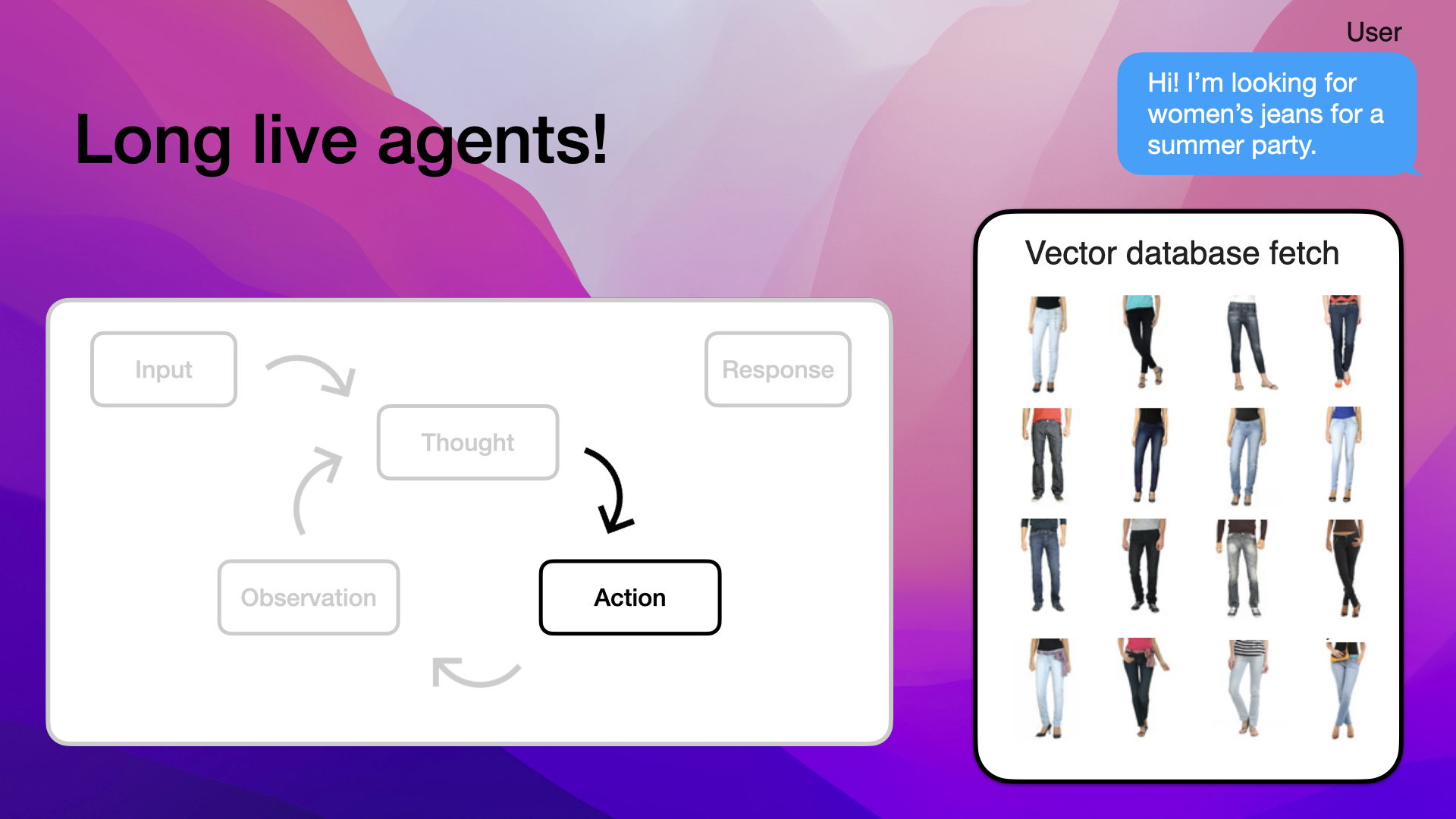

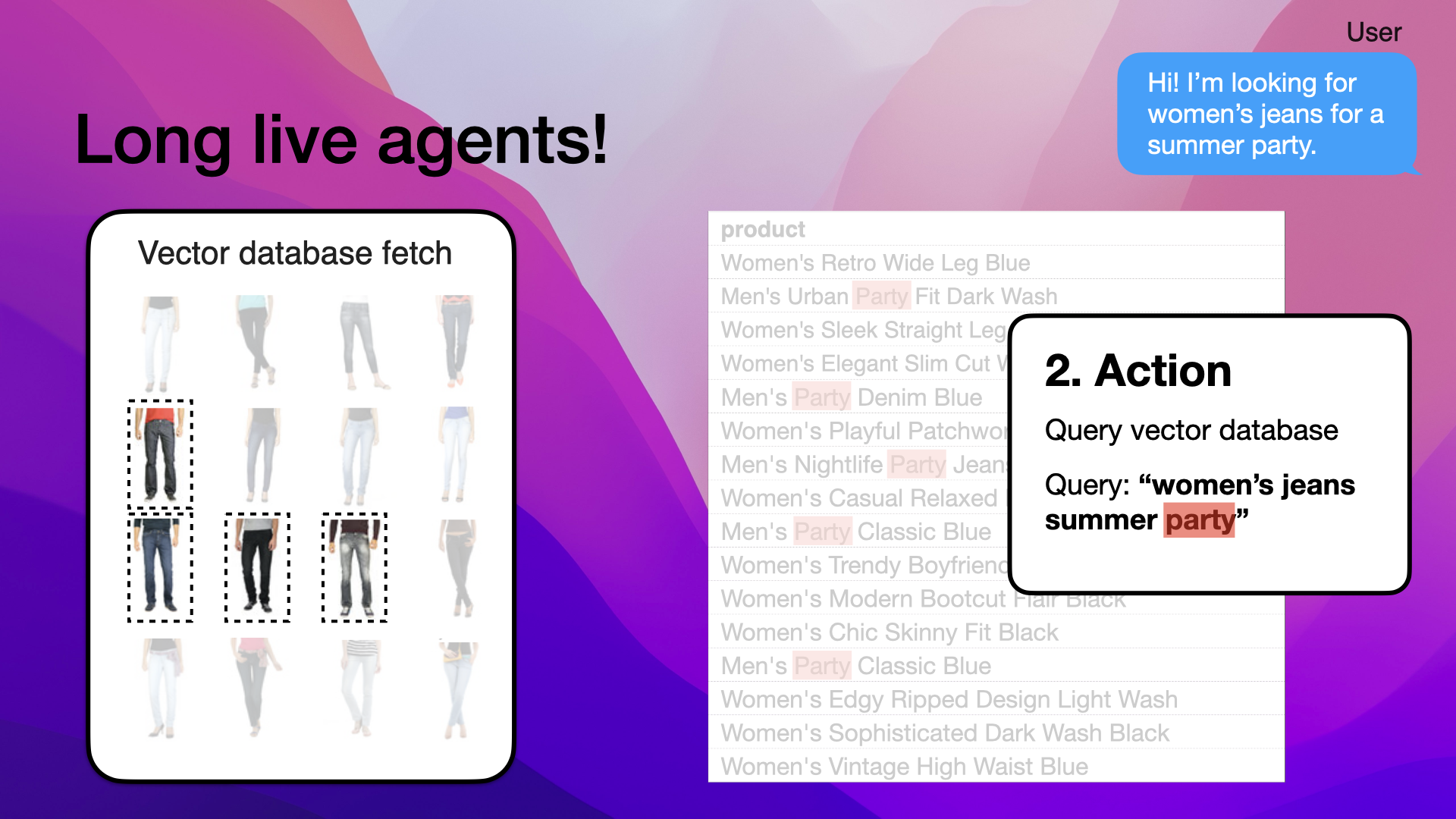

Here's a tricky customer inquiry, we have a person chatting with your chatbot and says "Hi! I'm looking for women's jeans for a summer party":

Tricky customer inquiry, what kind of jeans are suitable for a summer party?

This inquiry is tricky in itself, if you think of it as a human, what kind of jeans are suitable for a summer party according to yourself? Well, first of all, you might know that it's warm during the summer, so you might suggest light colors, and since it is warm, you might want breezy or flowy fabrics.

So the inquiry is tricky since there is so much more that goes into this customer question. Basically, you need to know something about the world to be able to give good product recommendations:

How a summer party might look like to an LLM

Alright, back to the customer inquiry, a naive RAG pipeline doesn't have the ability to reflect on what jeans for a summer party could entail, so it will just pull the closest neighbors to that exact query:

Naive RAGs can't reflect or think about what jeans are suitable for a summer party

Summary: 4 Naive RAG Limitations

In summary, these are the four main limitations of naive RAG:

- 1. No memory

- 2. Single shot

- 3. No query understanding

- 4. No reflection

Let's look at how agents can solve all these shortcomings.

But first, what is naive RAG anyway?

What is Naive RAG Anyway?

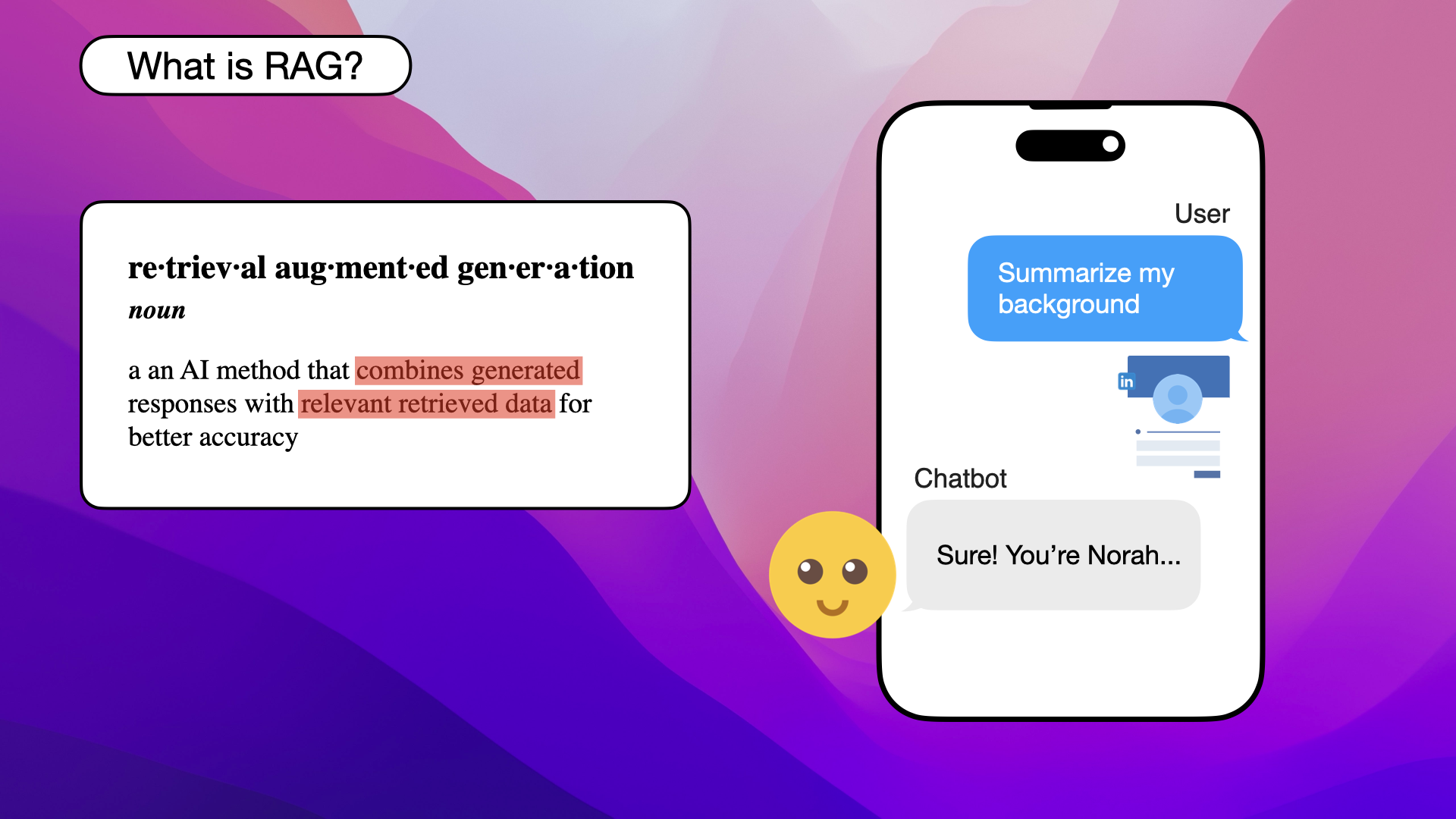

Let's quickly talk about what RAG actually is.

RAG is an abbreviation of retrieval augmented generation, it's an AI method where you combine the generated response with retrieved data. You do this for better accuracy:

The definition of RAG

You can think of RAG this way. Let's pretend you ask ChatGPT to summarize your professional background. The problem with this is that ChatGPT doesn't know anything about your previous workplaces or education:

ChatGPT doesn't know anything about your previous work experiences

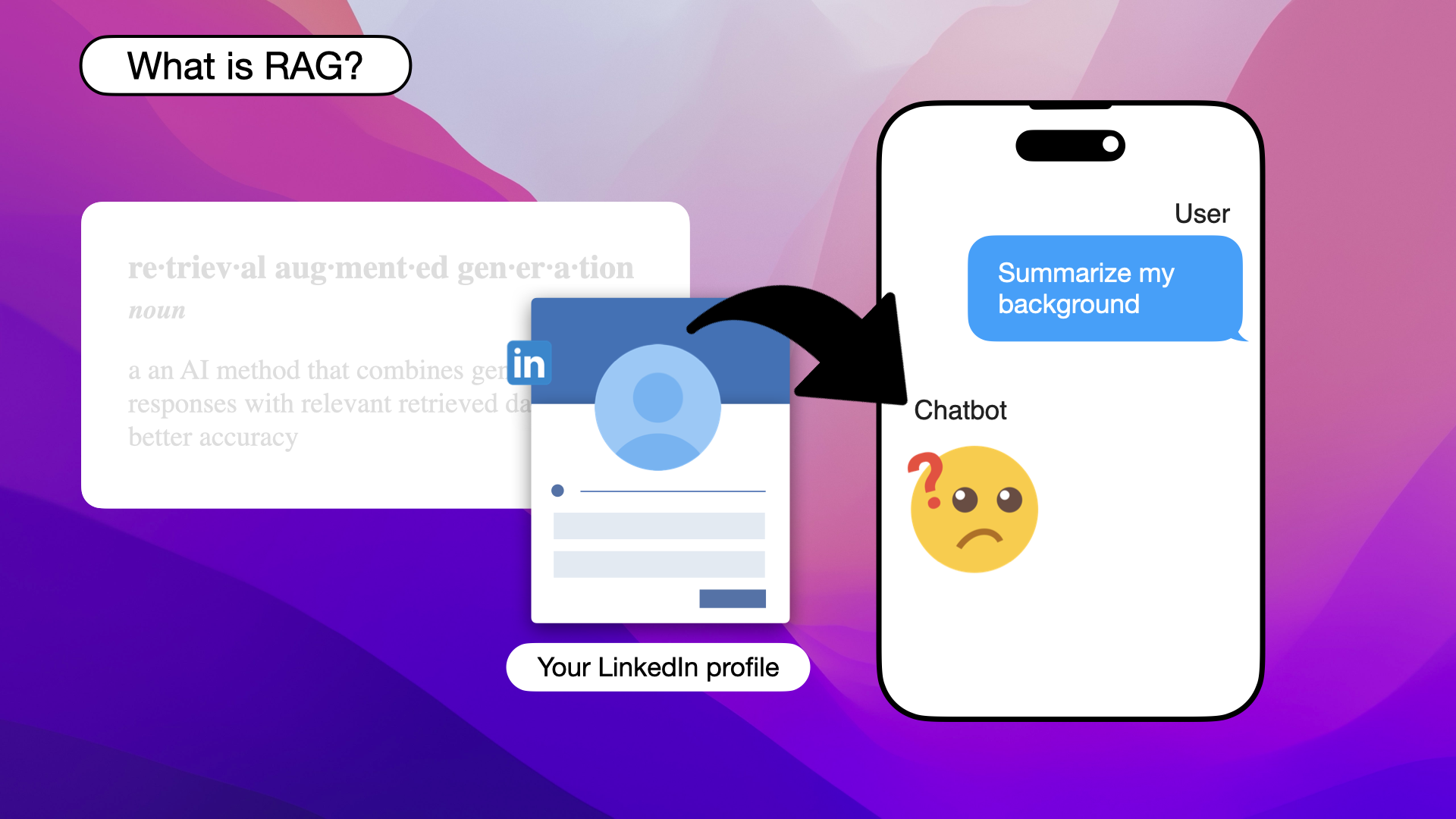

But you still want ChatGPT to summarize your work experience, so what you do is give ChatGPT your LinkedIn profile so that ChatGPT has access to all your previous experience:

Give ChatGPT your Linkedin profile

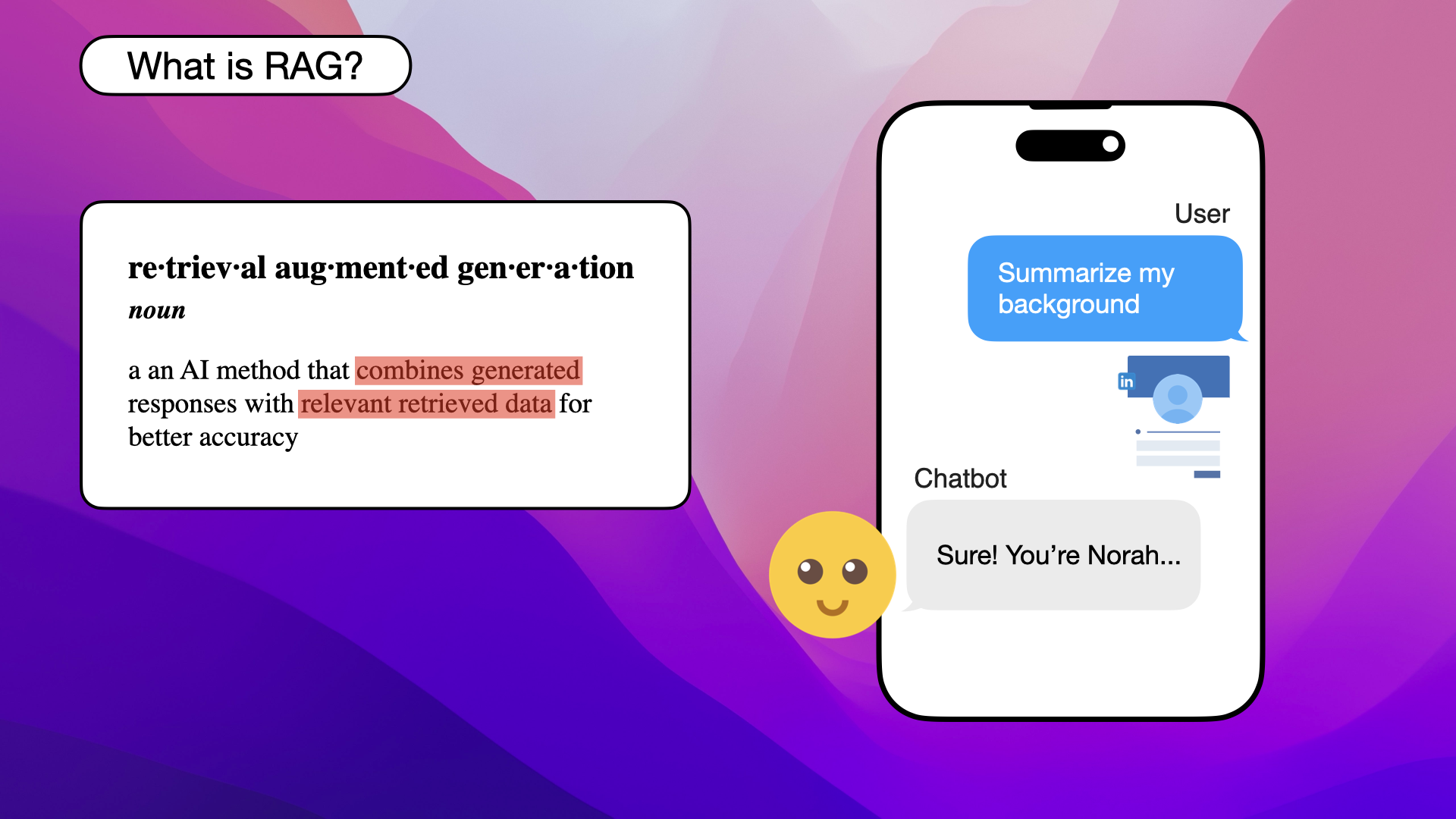

Now that you've given ChatGPT access to your LinkedIn profile, it can easily summarize your professional background and give you an accurate summary:

ChatGPT can easily summarize your professional background once it has access to your Linkedin profile

In conclusion, RAG is an AI method that combines what the AI model already knows in their parametric memory, with relevant retrieved data to achieve better accuracy.

So a very simple RAG pipeline is some kind of input, in our case asking ChatGPT to summarize our professional background. Then adding our LinkedIn profile and finally, getting a response.

A naive RAG pipeline combines the generated response with retrieved data without any advanced optimization:

A naive RAG pipeline combines the generated response with retrieved data without any advanced optimization

So that's RAG and naive RAG, but before we move on to agents and how they solve the four main naive RAG limitations, let's quickly talk a little bit about vectorization, because it is a central part of RAGs.

RAG limitations:

![]()

No memory

![]()

Single shot

![]()

No query understanding

![]()

No reflection

What is Vectorization?

Let's go back to your made-up online jeans store Denim Dreams again:

Your made-up online jeans store Denim Dreams

Here's what we're trying to achieve, a friendly AI agent that helps answer customer inquiries:

The friendly AI agent we're trying to build

If we remember the previous example we had when we asked ChatGPT to summarize our professional background, we could easily add our LinkedIn profile to get an accurate summary:

ChatGPT can easily summarize your professional background once it has access to your Linkedin profile

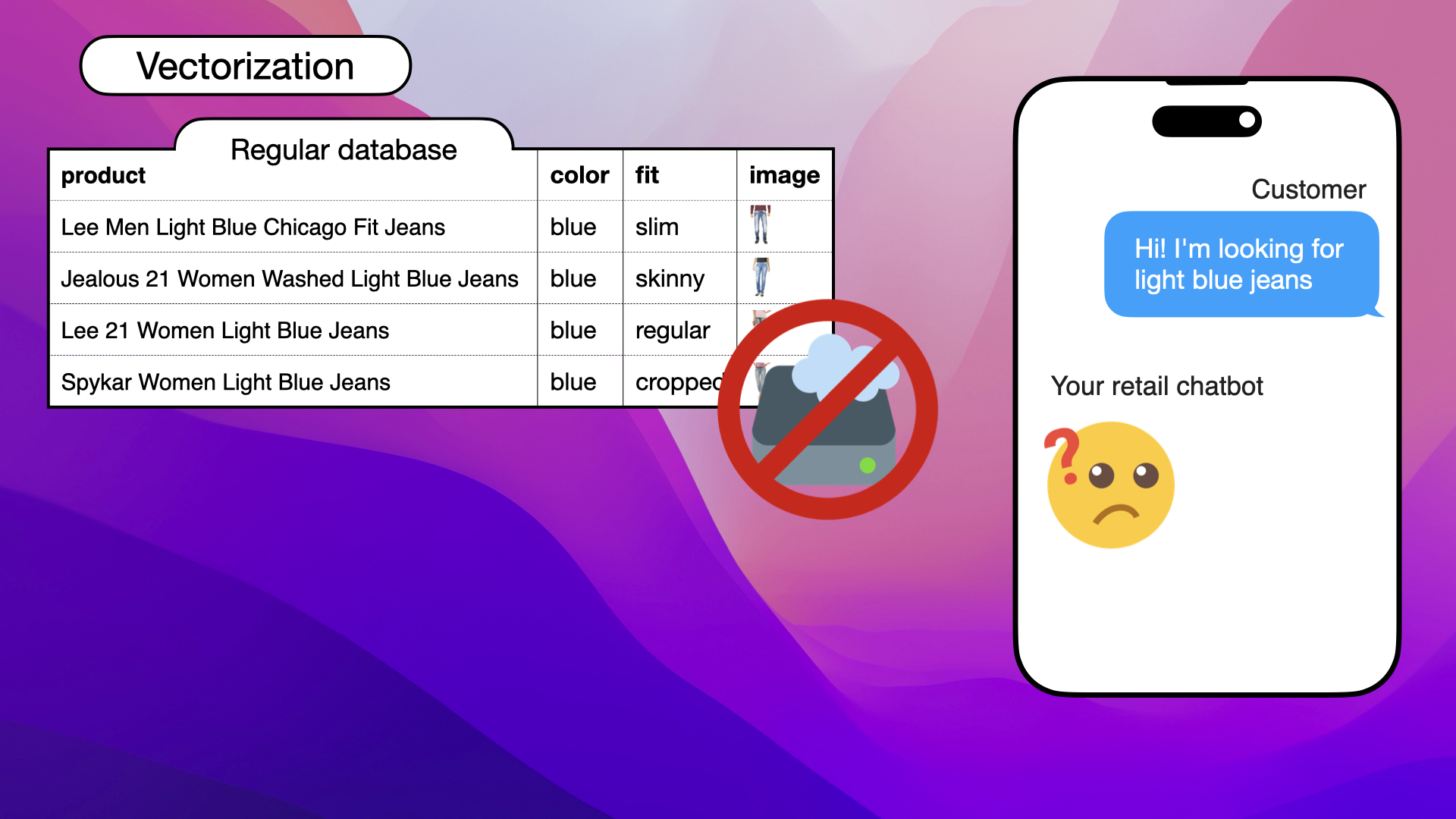

Now it gets a bit trickier, because when we have hundreds, or thousands, of jeans, we can no longer just give all our jeans or products to the chatbot:

It gets trickier when you have hundreds or thousands of products

We can no longer give all of our products to the chatbot, they won't fit in the context. Even if they would, it's both inefficient and costly to fill the context with all our jeans. The AI model would have a hard time sifting through the products and accurately recommending jeans.

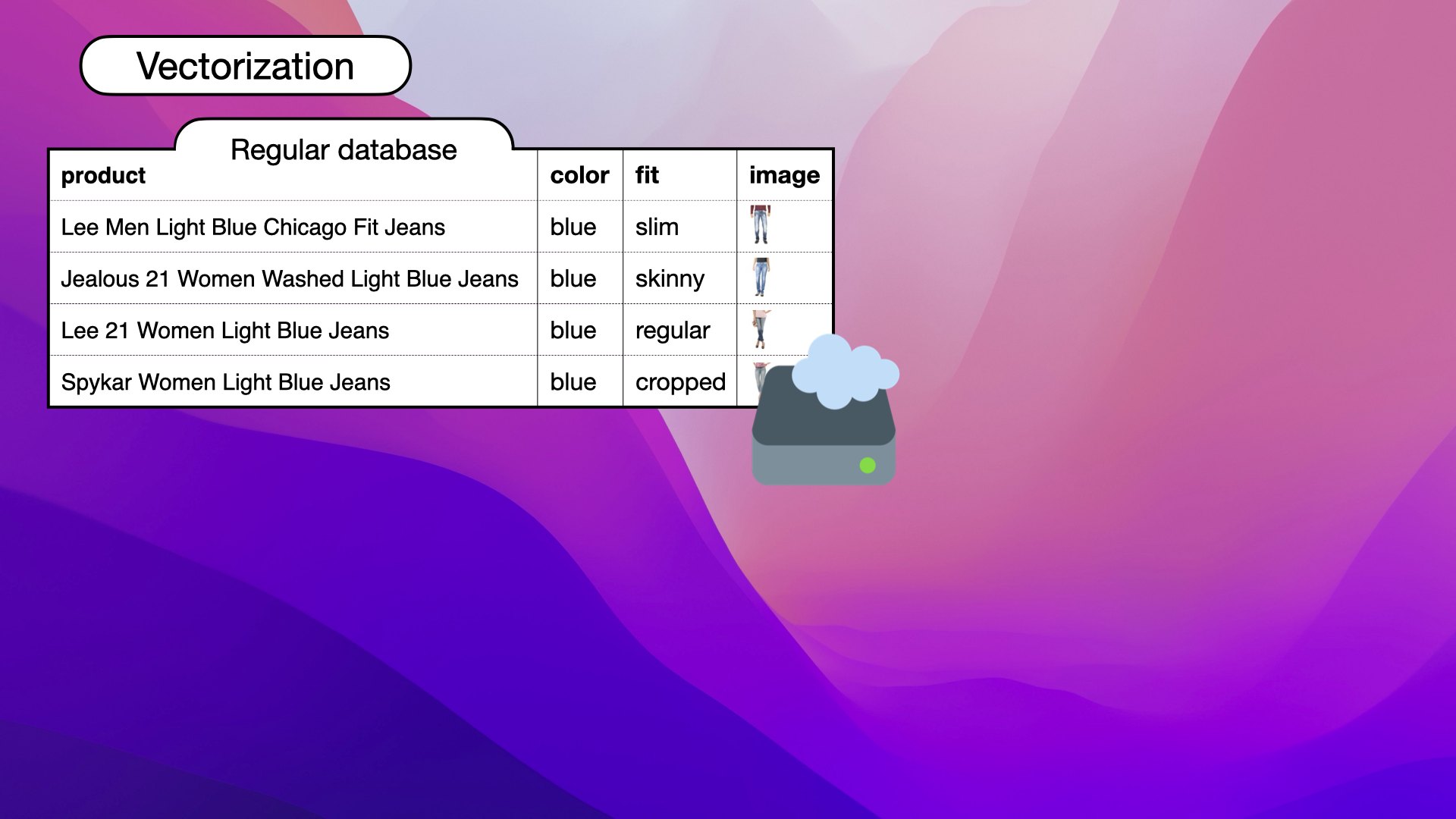

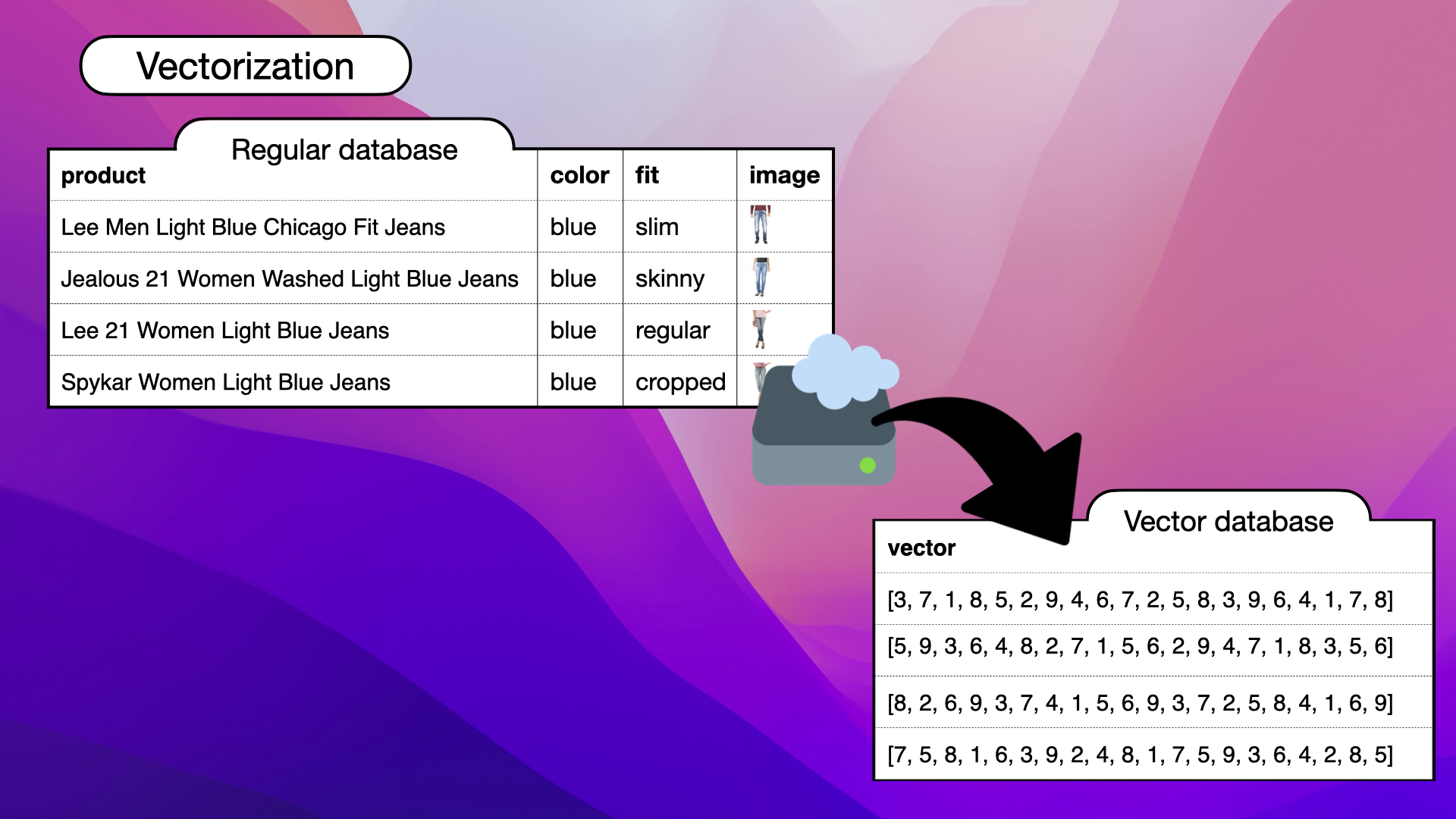

So what do you do? Well, you probably already have your jeans in a regular database:

You already have your jeans in a regular database

But we can't use the regular database directly with our AI chatbot, since there's no way for it to sift through that data natively either:

Your chatbot can't natively sift through your regular database

Instead, what we want to do, is populate a new type of database with our jeans data:

We want to store our jeans data in a new type of database

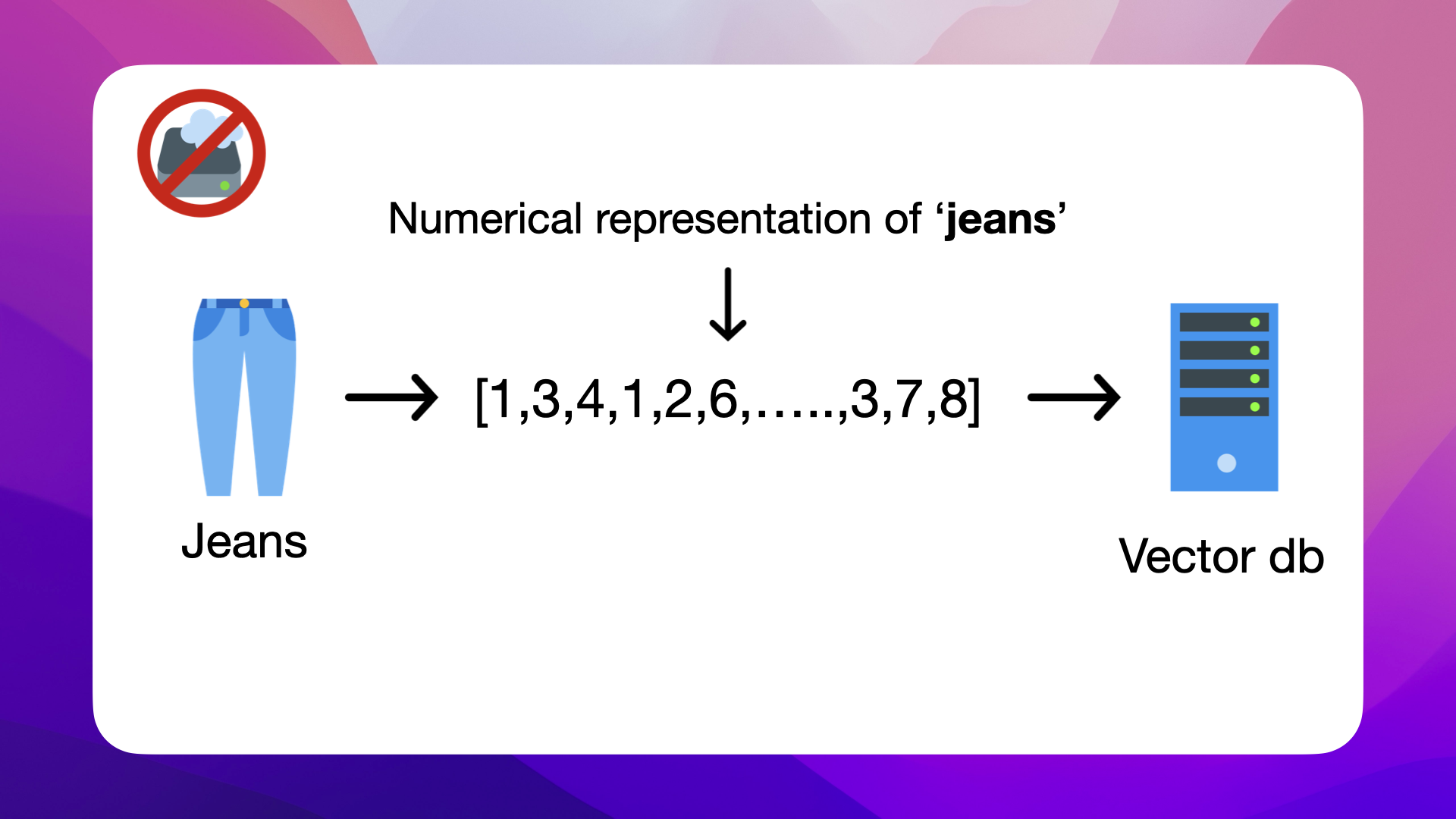

The new database we're using is a vector database. To store our jeans data in this new format, we need to convert each pair of jeans into a vector, as vectors are the format required by the vector database:

A vector is the numerical representation of each product, we want to store the jeans as vectors in a vector database

What are Vector Embeddings?

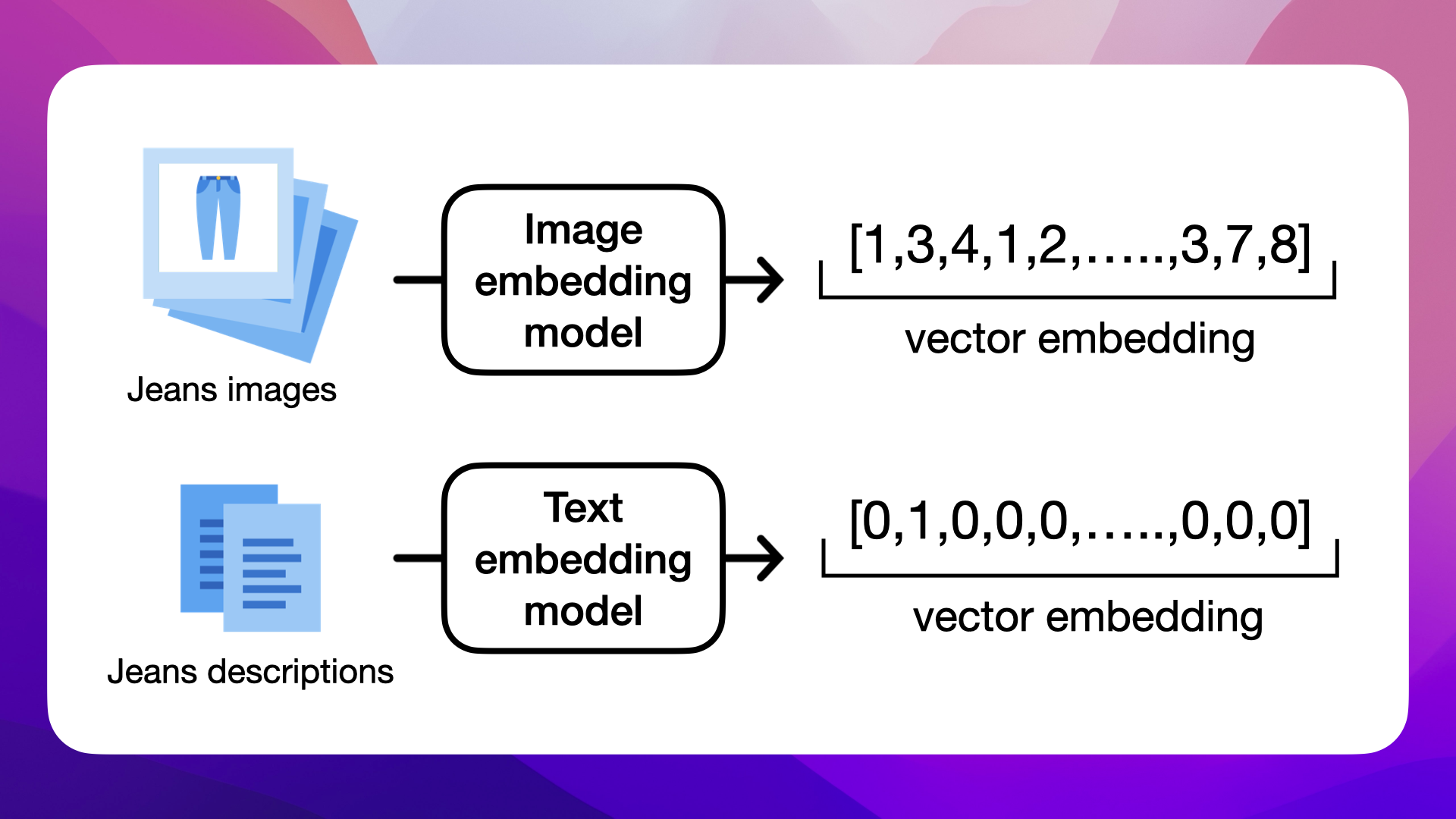

A vector embedding is a way to represent images or texts as numbers. These vectors capture the meaning and relationships inherent in the data. For instance, we can create vectors for both the jeans images and their descriptions.

The embedding model is an LLM and performs this conversion:

Vectors are the numerical representation of an image or a text

Numerous embedding models are available, each with unique capabilities. For example, ADA from OpenAI is a powerful model for text embeddings.

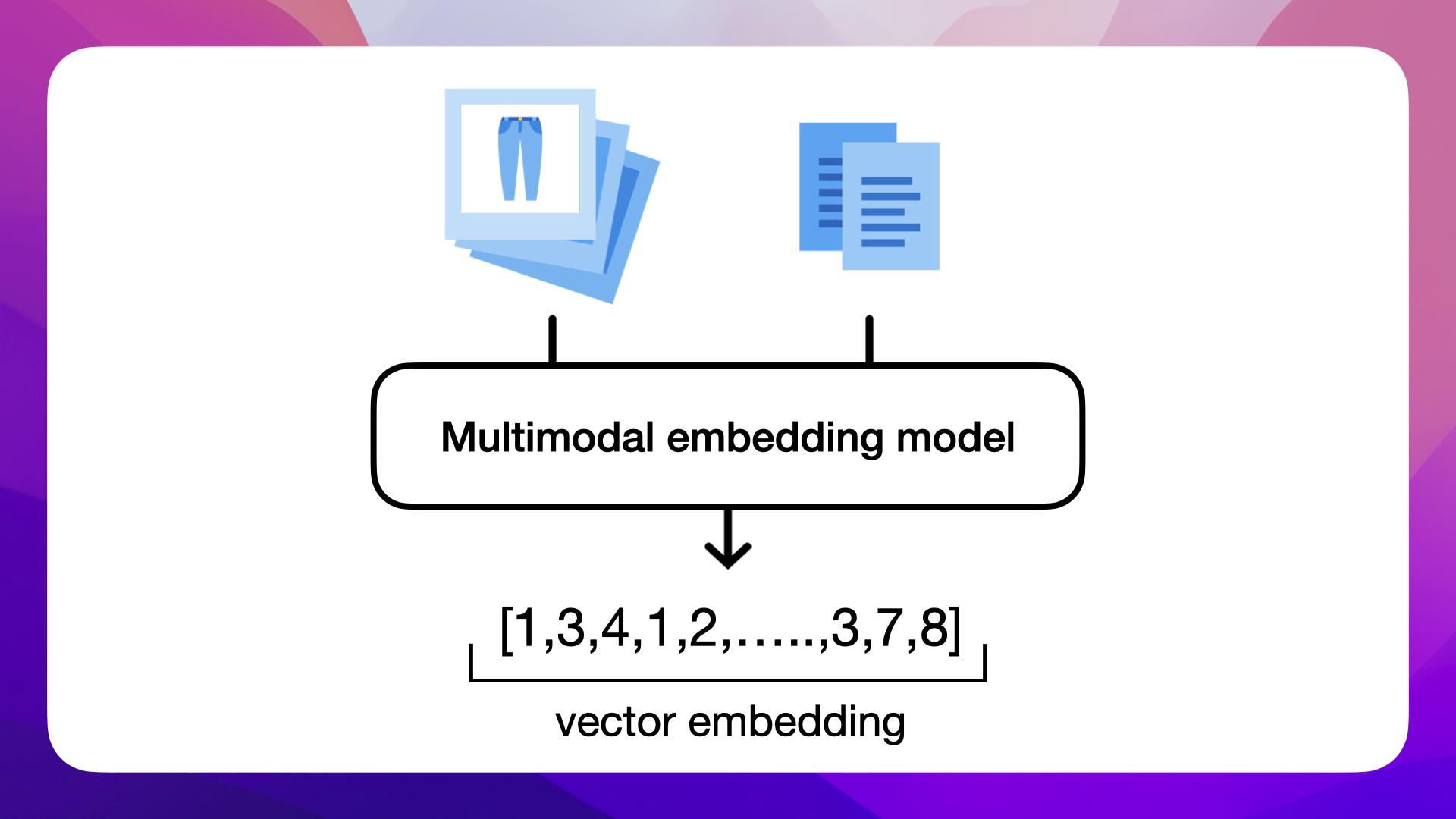

Multimodal Embedding Models

Multimodal embedding models are capable of converting texts, images, or a combination of both into vectors. These models can even handle video data, all within the same LLM:

Multimodal embedding models can vectorize texts, images, or a combination of both

AWS Titan is an example of a multimodal model from AWS.

This multimodal capability enables you to match product images with textual inquiries.

For example, if a customer searches for red jeans, multimodal embeddings will help you find images of red jeans rather than just descriptions mentioning red jeans.

https://docs.aws.amazon.com/bedrock/latest/userguide/titan-multiemb-models.html

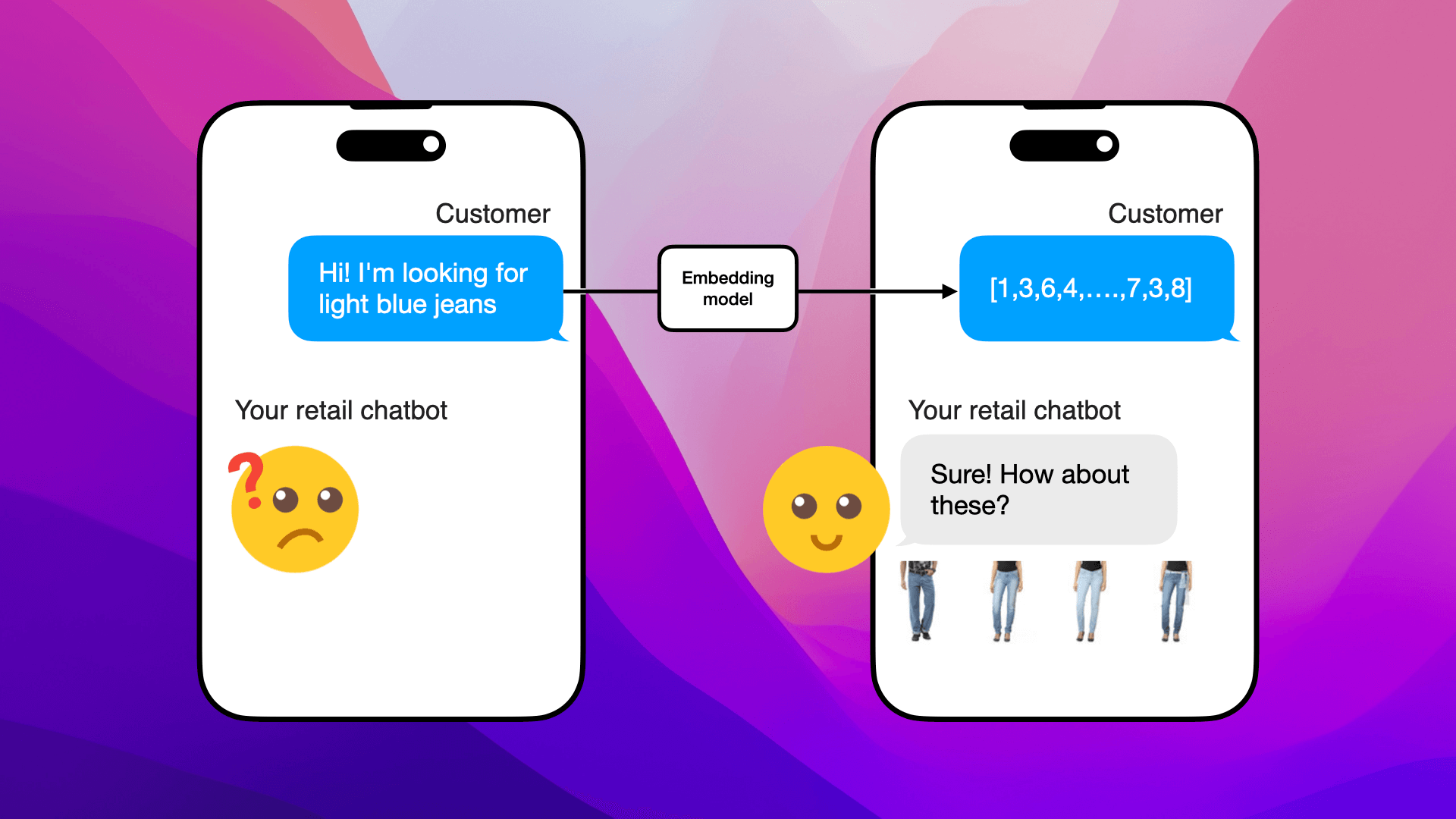

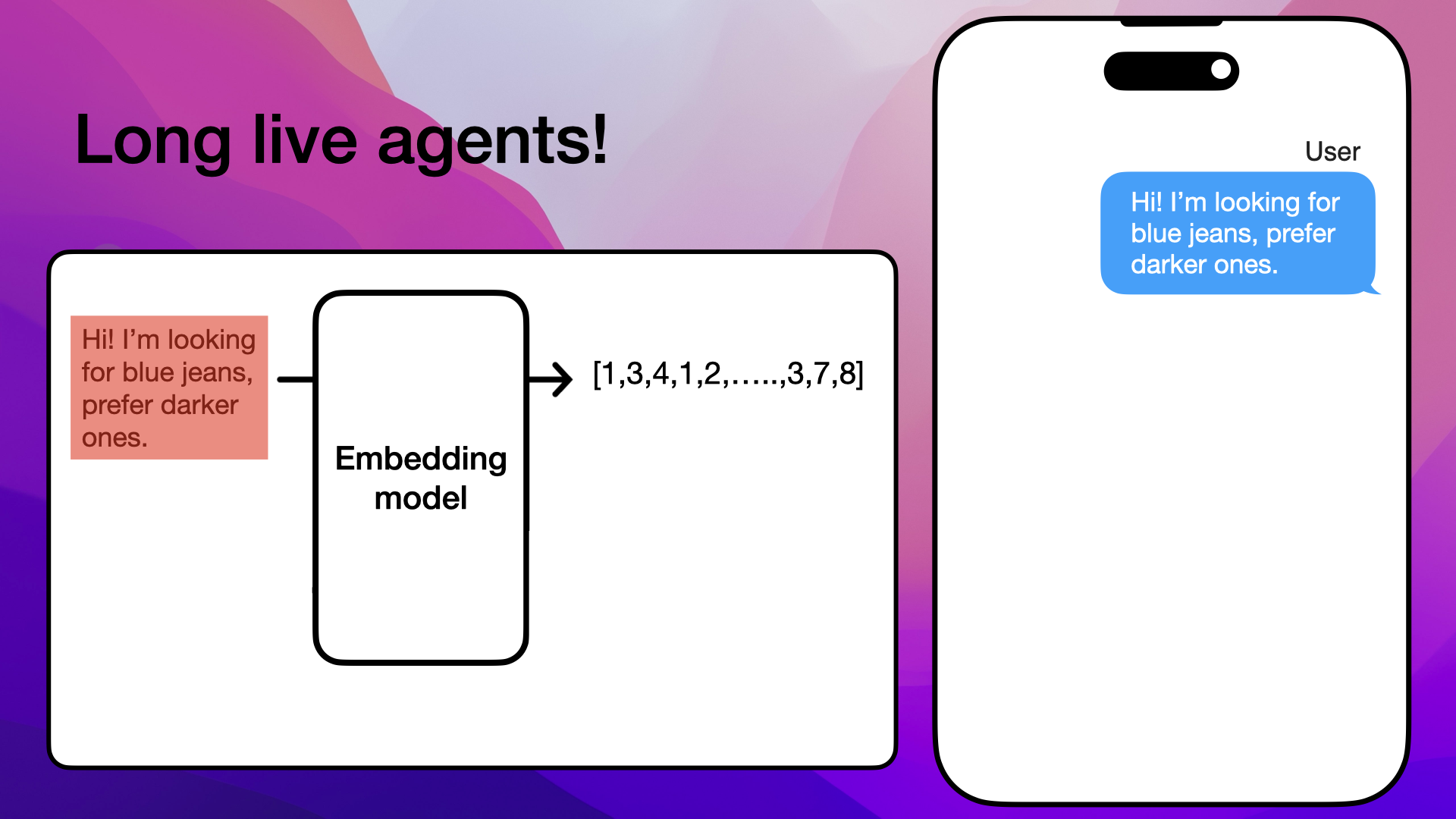

Vectorizing Customer Inquiries

Customer inquiries also need to be converted into vectors using the same type of language model we use for our jeans data:

Embedded customer inquiries need to be vectorized to be matched with the most relevant products

Now that we've discussed vectors—numerical representations of images, texts, or both—let's dive deeper into the concept of vector space.

The vector space is a multidimensional space where our jeans data, represented as vectors, are positioned based on their similarities, with similar items clustered closer together.

What is the Vector Space?

We want to vectorize our jeans because the language model that converts product descriptions and images into vectors will cluster related products closer to each other in a high-dimensional vector space.

Visualizing Jeans in Vector Space

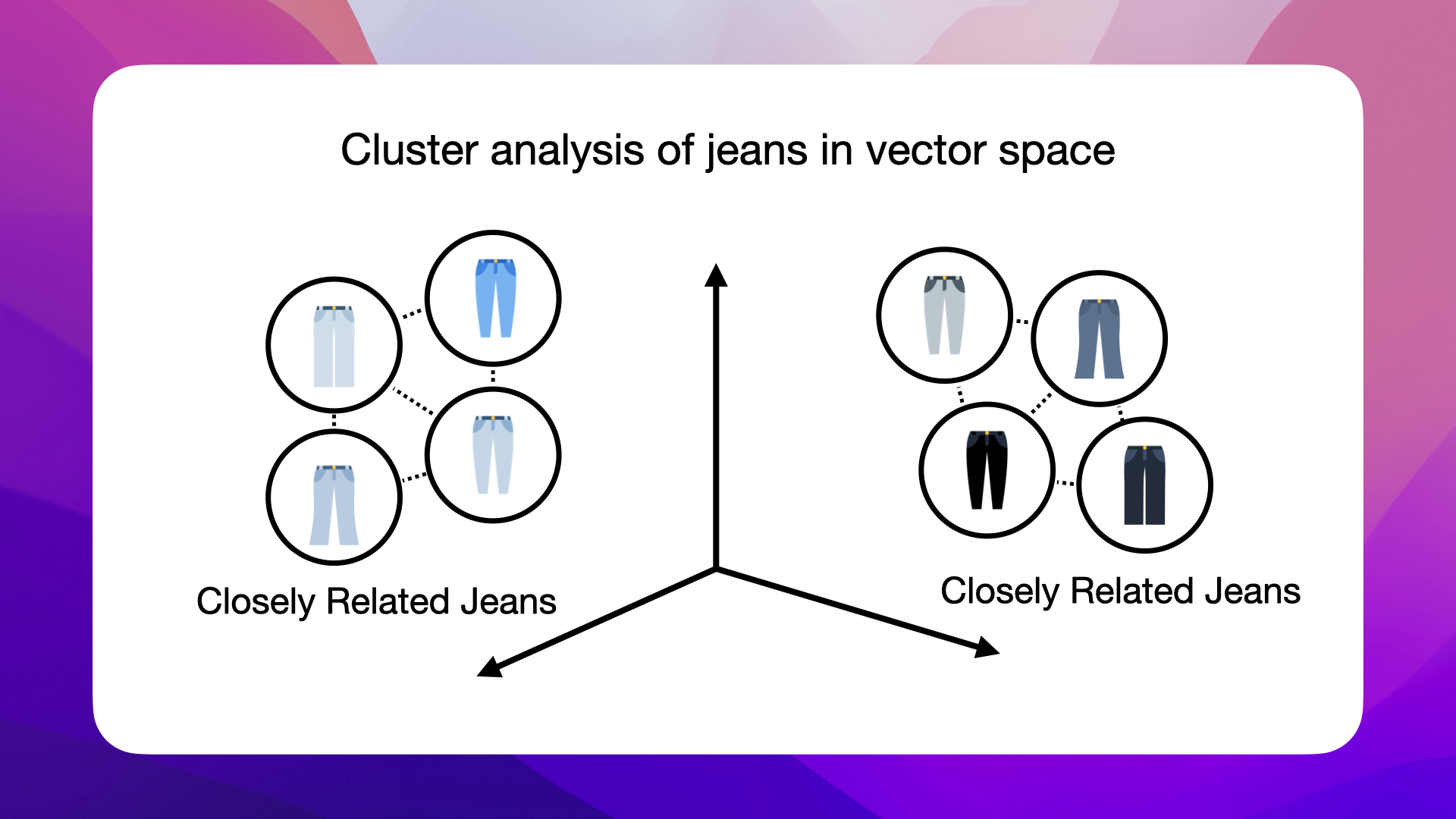

Here's an illustration of how closely related jeans are clustered in a high-dimensional space:

Closely related jeans are clustered by the embedding model in a high-dimensional vector space

Light blue jeans form one cluster, indicating their similarity to each other, while being distinct from clusters of dark blue and grey jeans. The vector space also reveals the relationships between different styles - notice how slim-fit jeans are positioned relative to boot-cut ones, reflecting their shared attributes and differences.

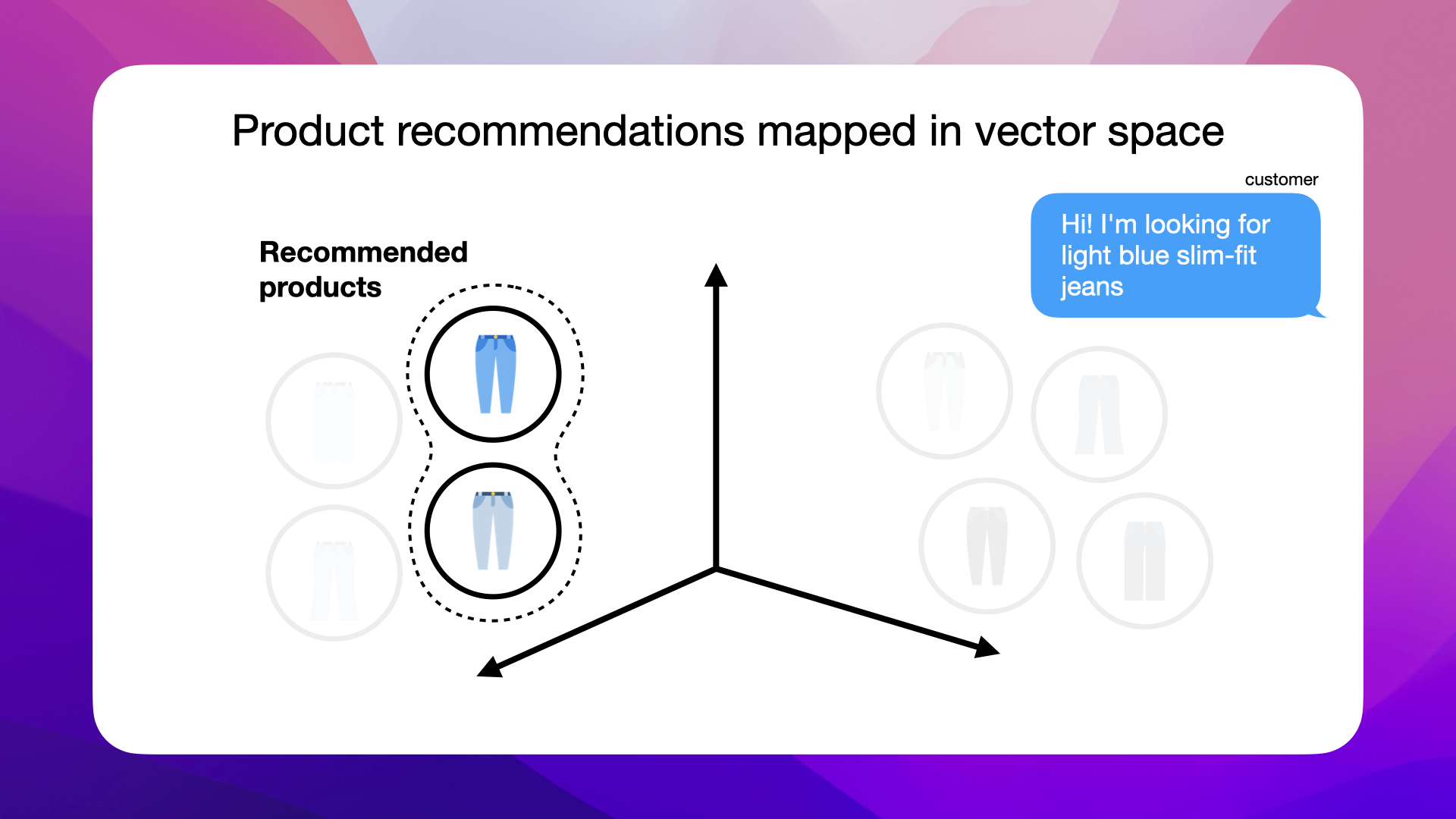

If a customer asks about light blue slim-fit jeans, we can recommend jeans that fit that description because their vectors are similar and therefore close to each other in the high-dimensional vector space:

The vector of light blue slim-fit jeans is similar to jeans with that description, hence close to each other in the high-dimensional vector space

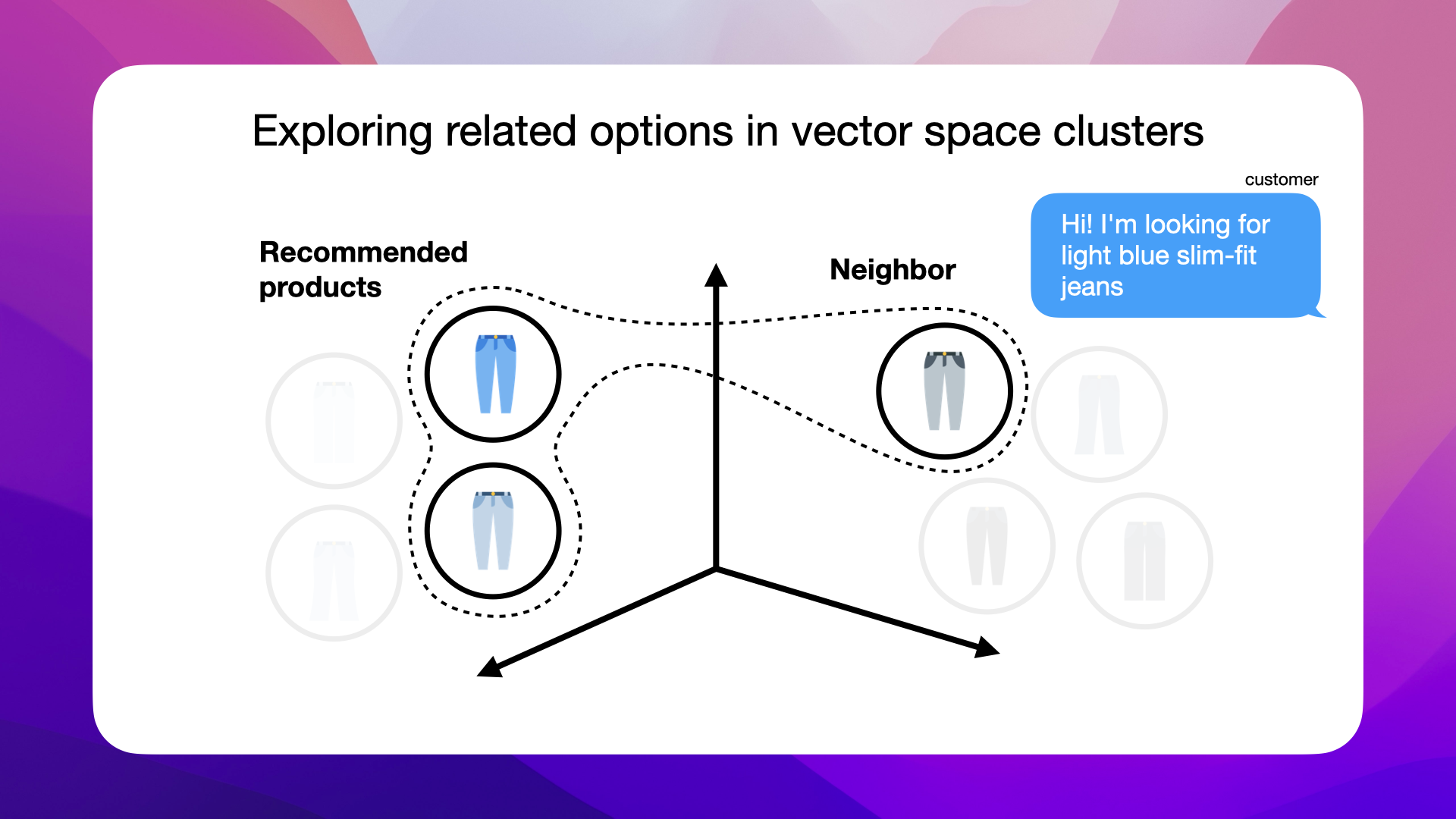

Another benefit is that we'll be able to recommend related options that are not immediately obvious. For example, if a customer is asking for light blue slim-fit jeans, the vector clusters might also suggest grey slim-fit jeans that could be a great fit too, known as neighbors:

The vector clusters can recommend similar options, like grey slim-fit jeans, highlighting the power of semantic similarity

These kinds of recommendations are not obvious because the products are semantically similar. The meaning behind the products is similar, and capturing this semantic similarity is challenging with regular databases.

This is why we're vectorizing our jeans.

Why Not Just Use Keyword Search?

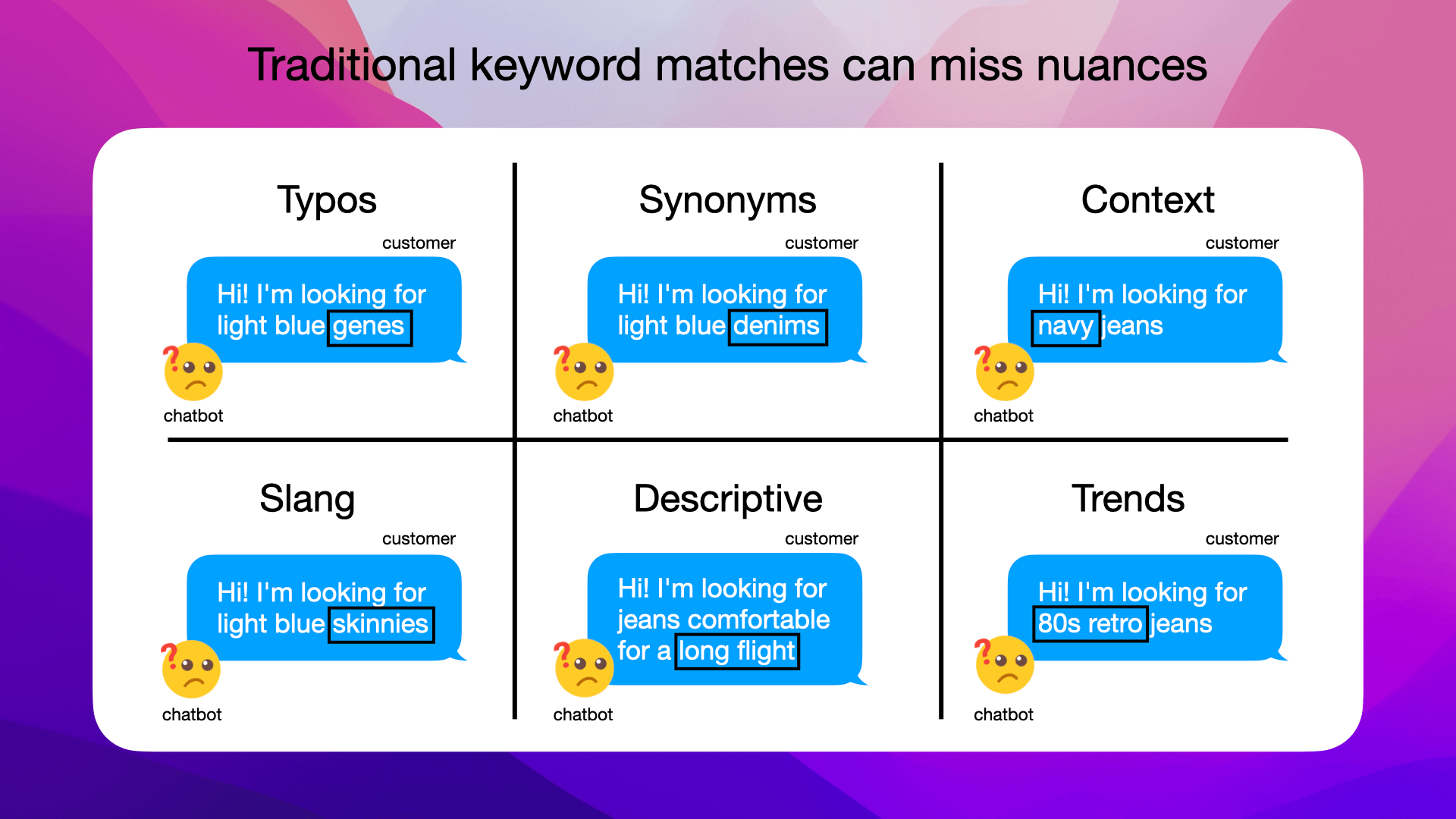

Keyword matches can fail or underperform in several scenarios:

Relying only on keyword matches can lead to poor customer experiences. Here's why:

1. Typos

Even a small typo can derail a search. When "genes" is typed instead of "jeans," a keyword match might return irrelevant products or no results at all.

2. Synonyms

Different words for the same item, like "denim" for "jeans," might not be recognized by a strict keyword match system, narrowing the search results.

3. Context

A color search for "navy jeans" could be misinterpreted as a military uniform if the system doesn't understand "navy" as a color in this context.

4. Slang

Fashion terms evolve, and what's known as "skinnies" might not be matched if the system only knows "skinny jeans."

5. Descriptive Searches

A request for "jeans comfortable for a long flight" aims for a specific use-case which keyword search isn't nuanced enough to understand.

6. Trends

Keywords like "80s retro jeans" imply a style that might be lost on a simple search algorithm not tuned to fashion trends.

Each of these examples shows the common problems of basic keyword-matching systems. They highlight the need for a smarter approach that can understand and process the nuances of human language and intent in retail.

Overcoming Keyword Limitations with Vectorization

By vectorizing data, we can overcome these limitations. Vectors allow us to create multi-dimensional spaces where products are not just isolated keywords but points in relation to others, capturing the subtleties of meaning, use cases, and customer inquiries.

This is why our online jeans store will use the power of vectorization for an improved and intuitive shopping experience.

Conclusion

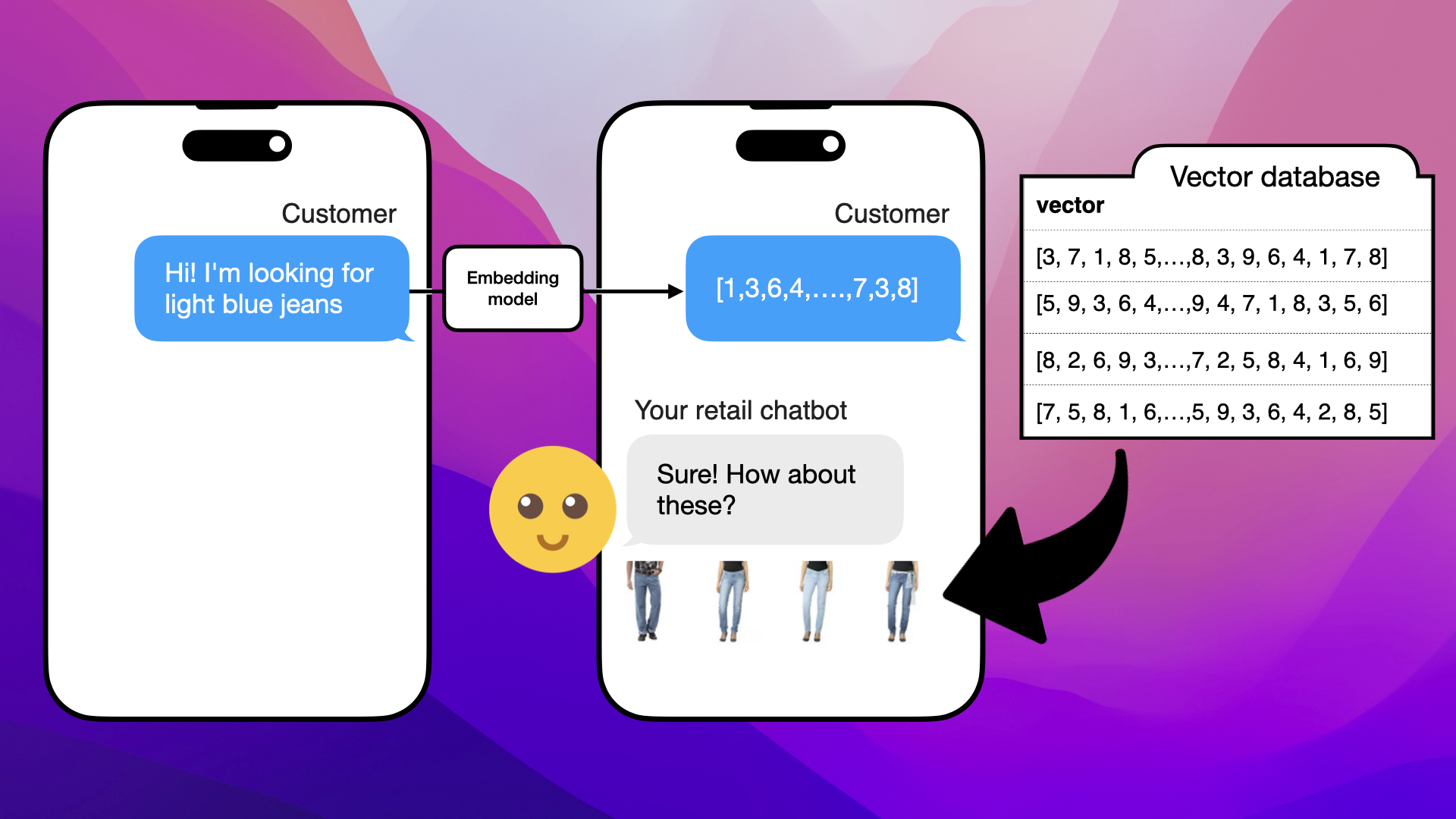

So when we have our vectors, we can upsert them to our vector database and let our chatbot use the vectors to recommend jeans:

Upserting vectors of jeans data into the vector database

Behind the scenes, we're also vectorizing the customer inquiry. For instance, we'll take "Hi! I'm looking for light blue jeans" and turn that into a vector, then match that vector to product vectors in the vector database:

Vectorizing customer inquiries and matching them with product vectors

The reason I wanted to talk about vectorization in depth is that in a naive RAG pipeline, the RAG part usually involves querying some kind of vector database. So you have an input, the RAG process, and finally the response:

The naive RAG process: input, vector database, and response

Alrighty, we talked about RAG (combining retrieved data with what an LLM already knows) and vectorization (being a numerical representation of a text, image, or both) being a central part of RAGs. Now, let's discuss how agents solve the limitations of naive RAG.

The Rise of Agents

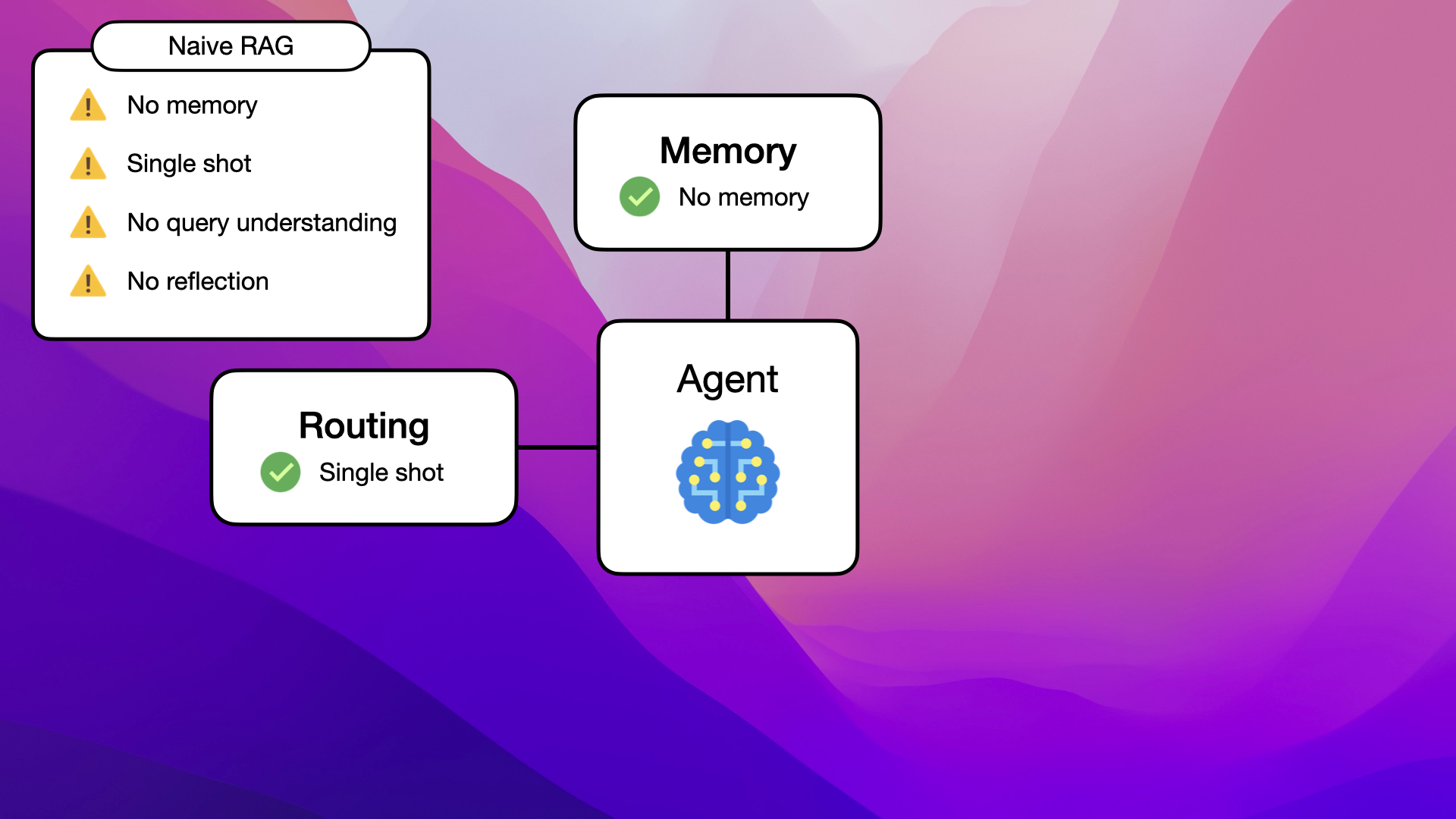

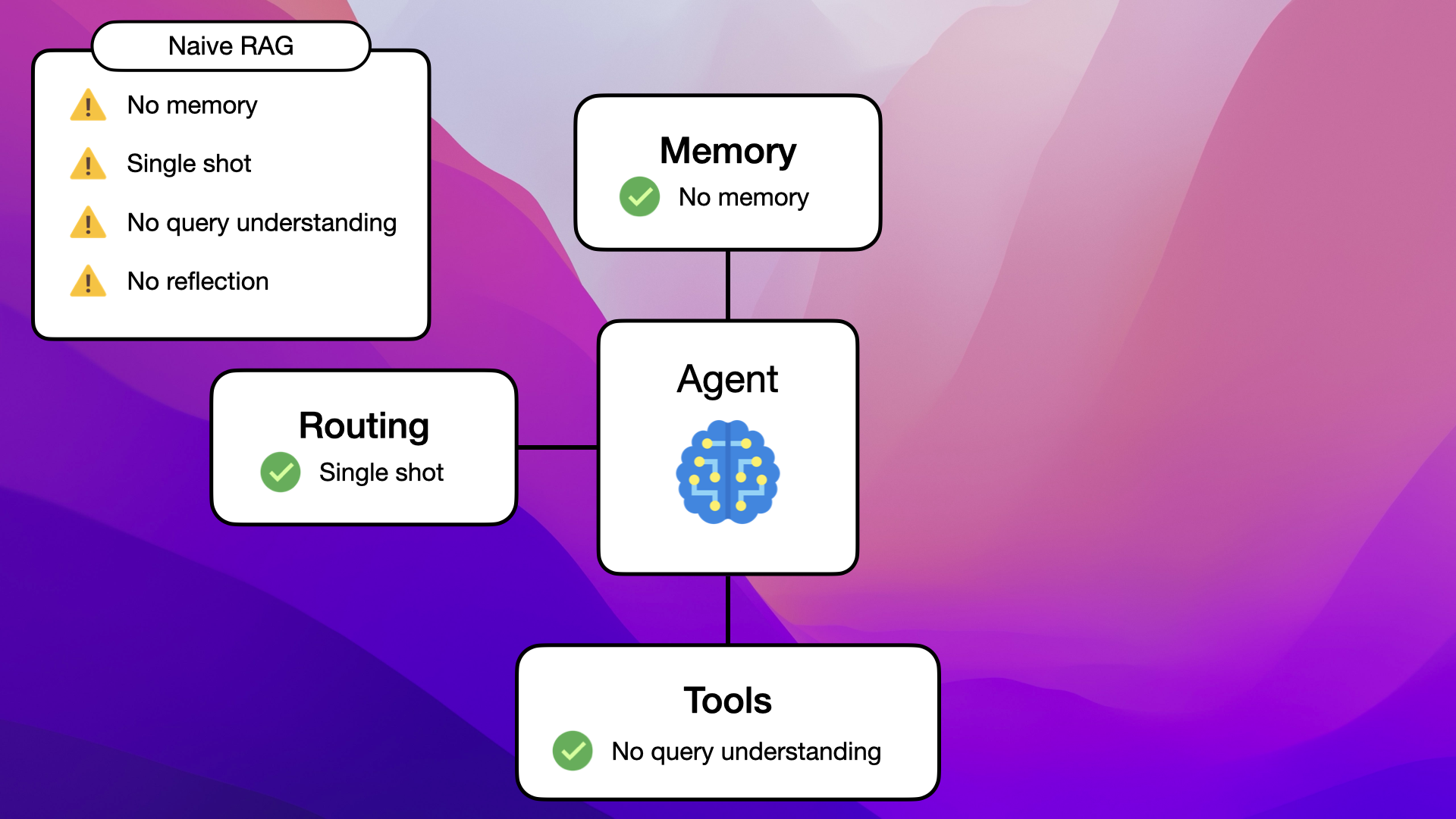

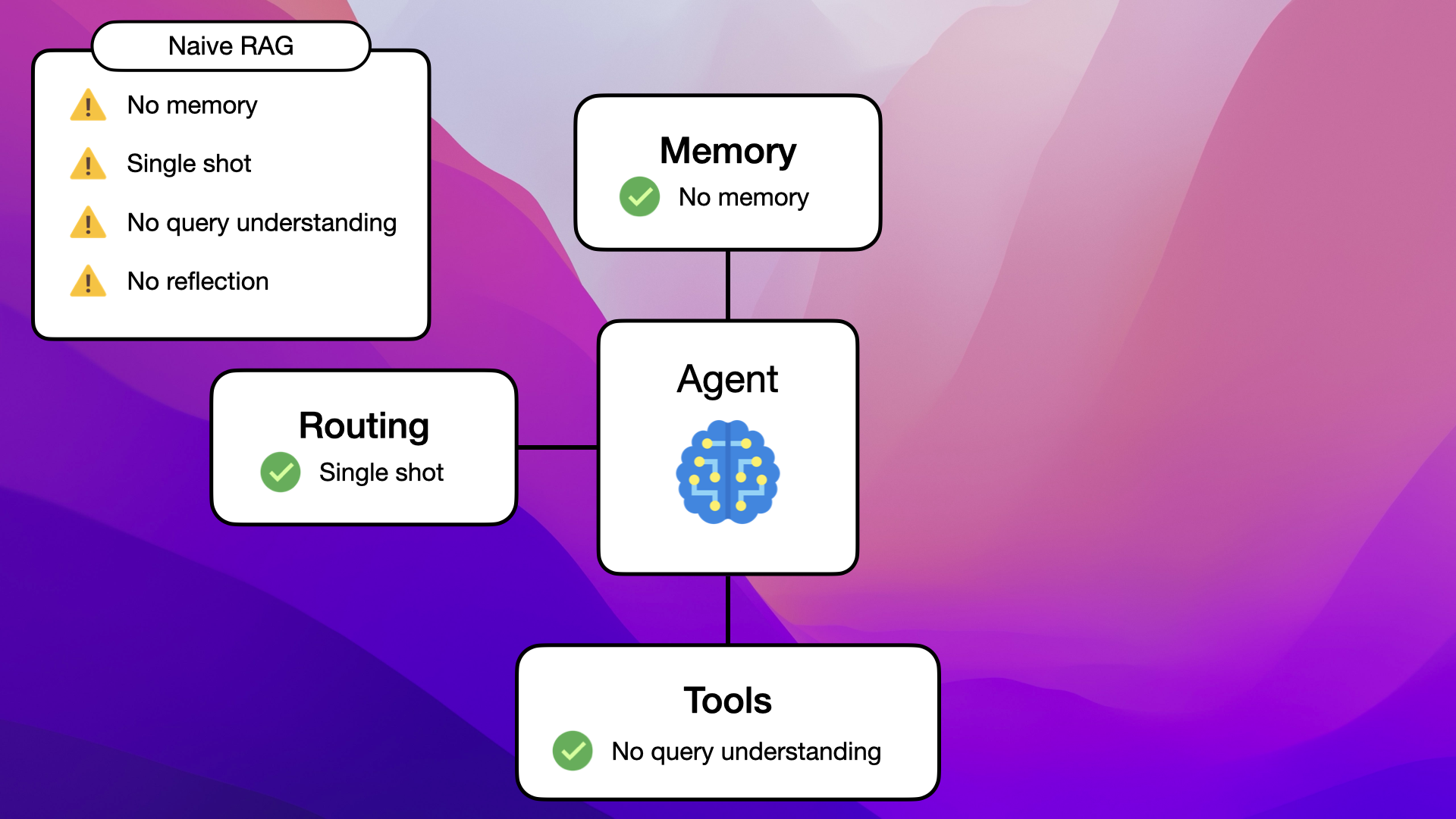

Let's revisit the 4 main limitations of naive RAG and examine how agents are solving the limitations:

- No memory

- Single shot

- No query understanding

- No reflection

The naive RAG process: input, vector database, and response

These limitations are the reason we're seeing the rise of agents and agents replacing naive RAG pipelines. All these limitations are addressed and more or less solved with agents.

Agent Pipelines

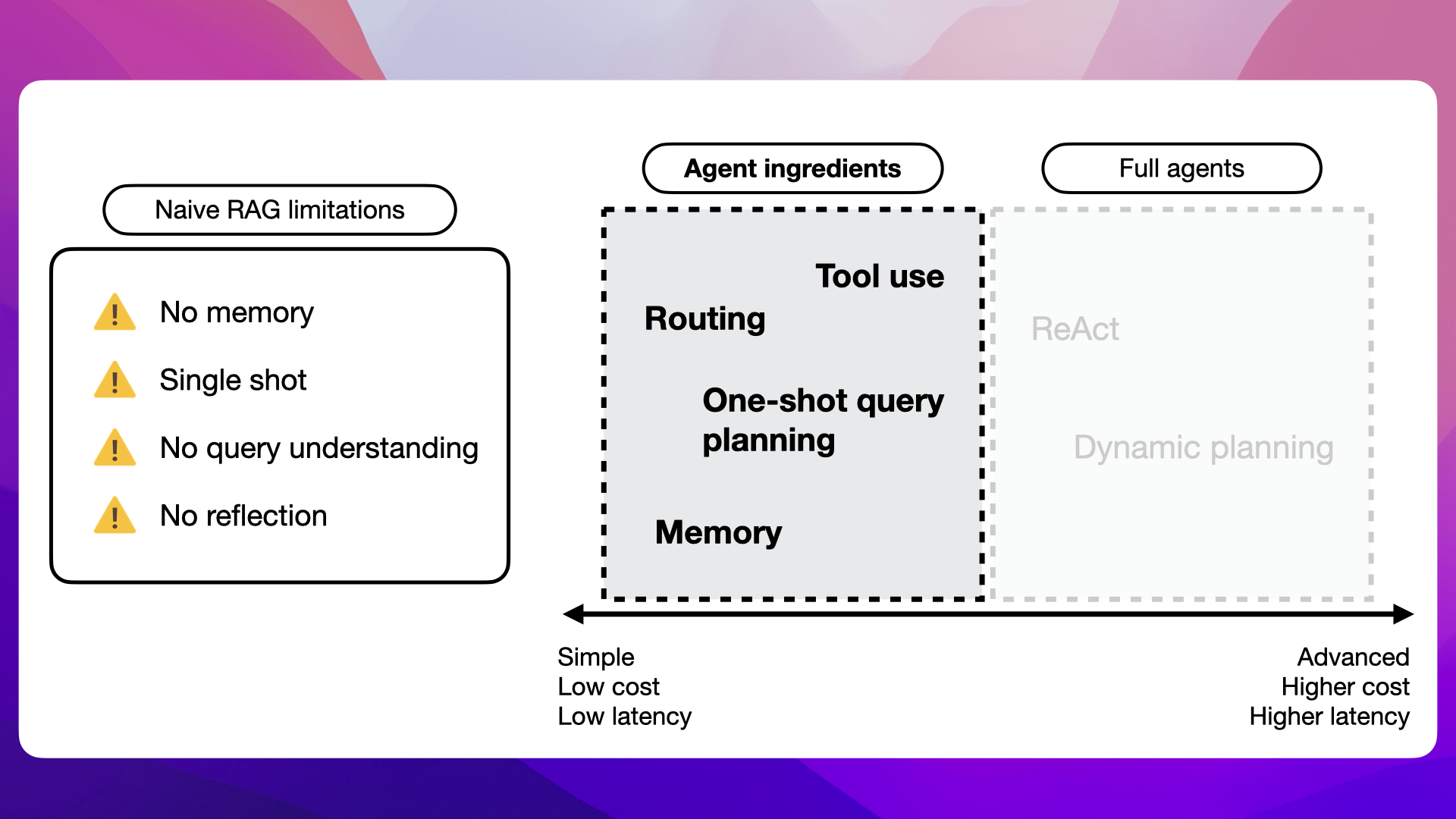

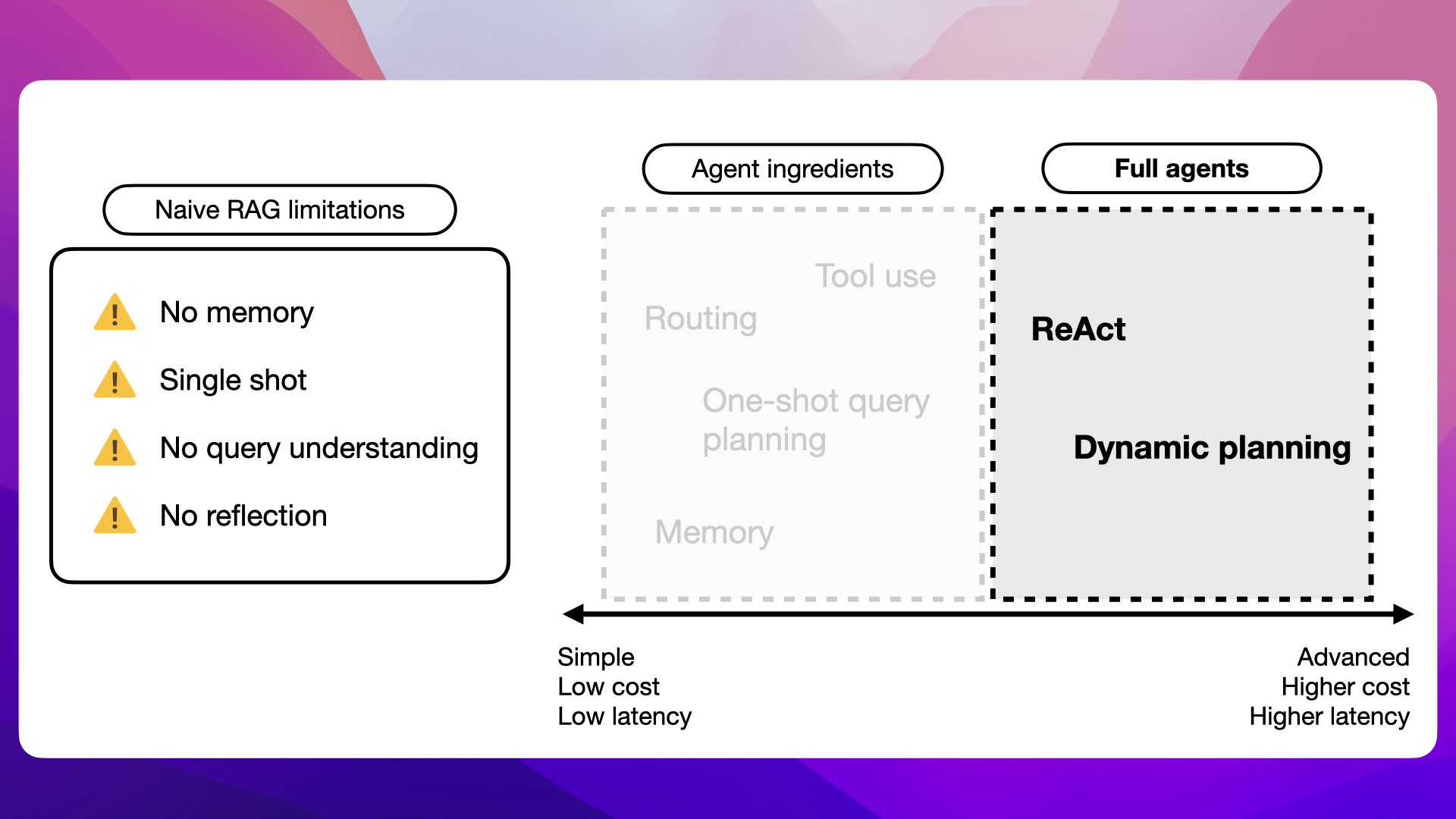

At a high level, when we talk about agents, they span from simple pipelines that are low cost and low latency, all the way to advanced applications that are more costly to run and have significantly higher latency:

Agent pipelines span from low-cost and low latency to advanced and costly applications

Further, we can categorize the agentic pipeline into two distinct categories: Agent ingredients and Full agents

Agent Ingredients

The first category, "agent ingredients", refers to pipelines or products that have basic agentic behavior, such as memory, some ability for query planning, and access to tools:

Agent Ingredients: Basic elements of agent behavior including memory, query planning, and tool use

Full Agents

The other category is full agents, which are applications capable of dynamic planning and autonomously deciding how to solve the problem they are given:

Full Agents: Advanced applications with capabilities for dynamic planning and autonomous problem-solving

How Agents Solve RAG Limitations

Let's first look at how the limitations of naive RAGs are solved with agents. We'll start with a quick overview before tying this back to the conversations we had between a customer and naive RAGs.

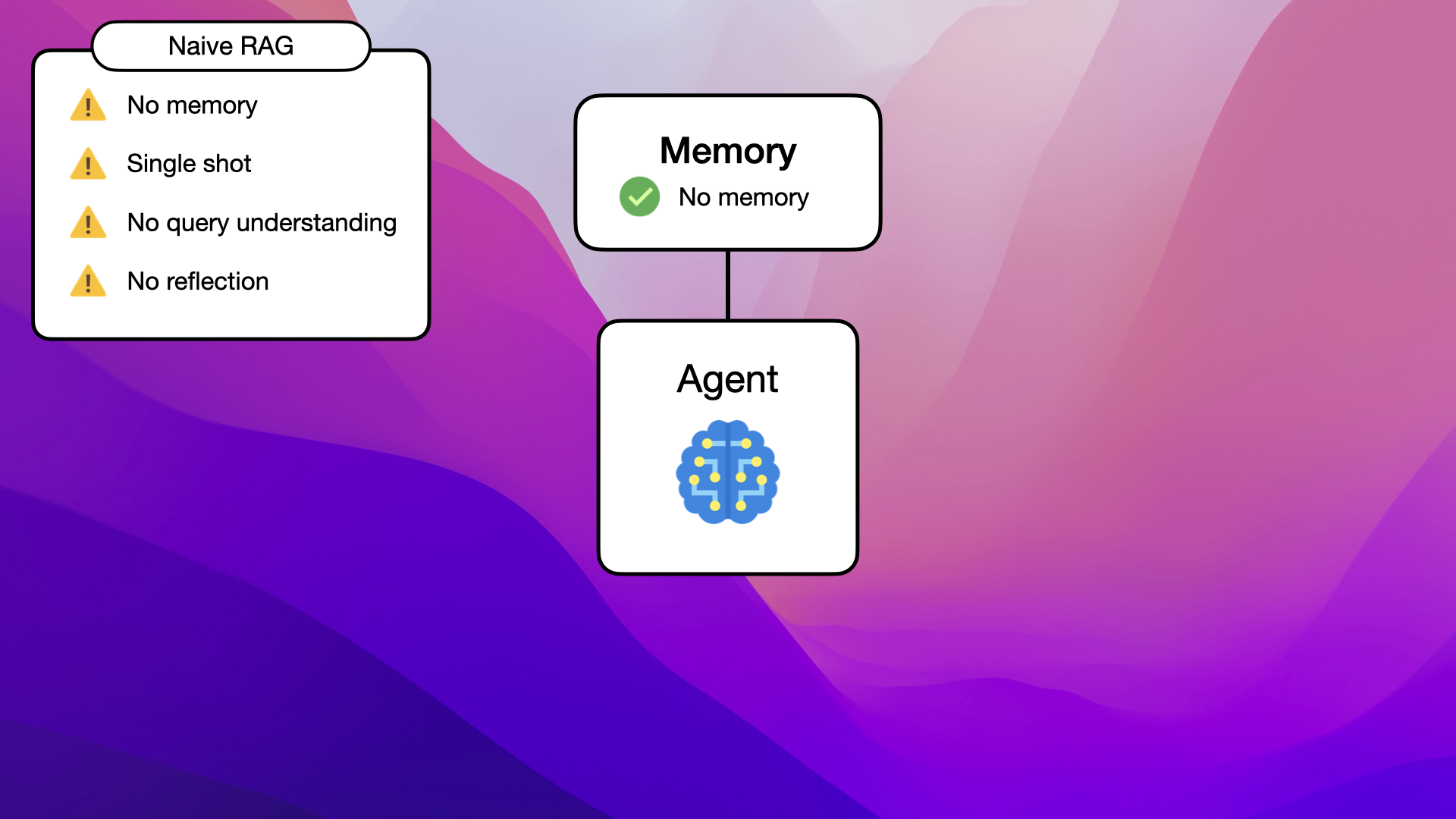

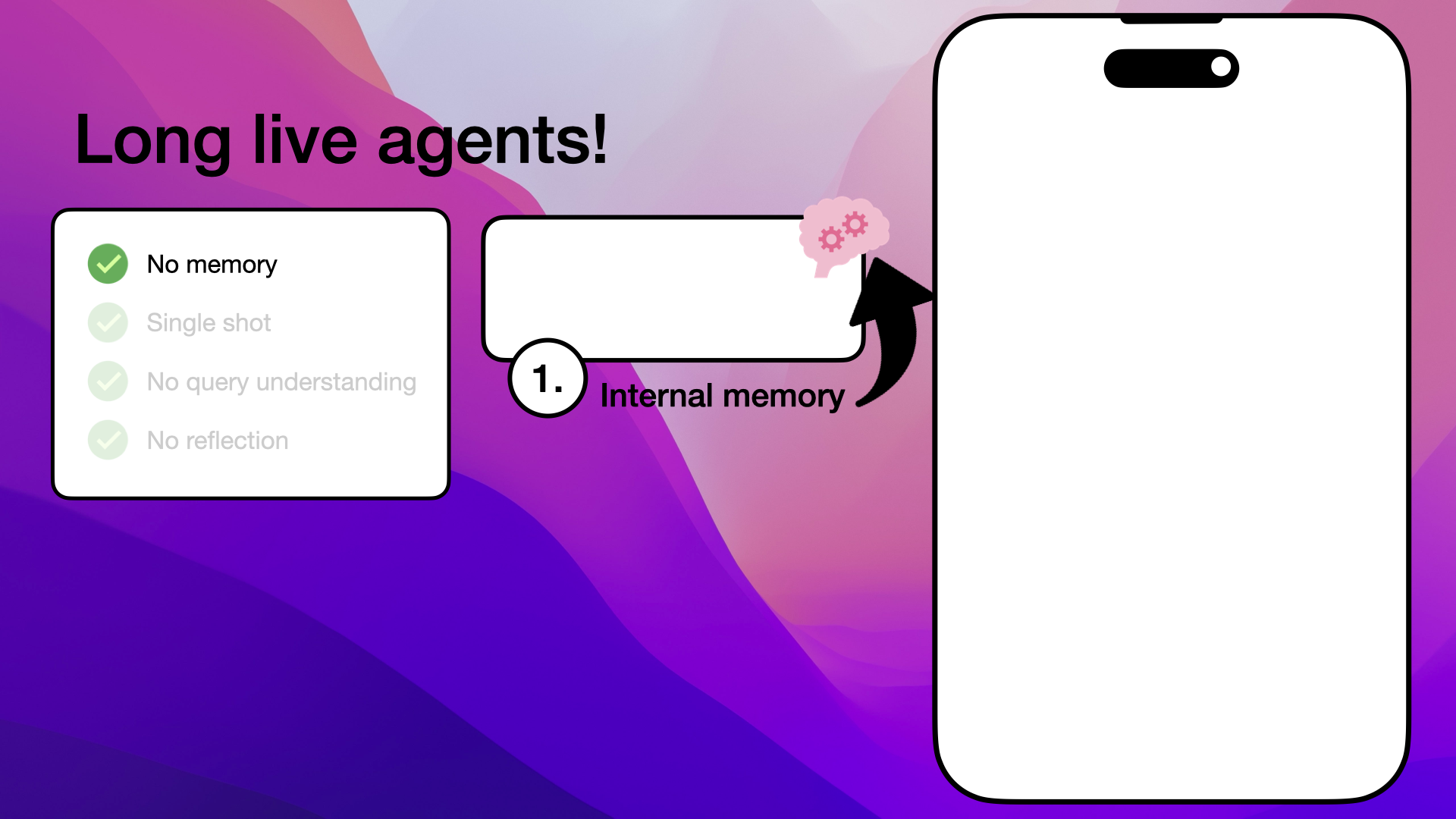

1. No Memory

Starting with memory, agents have access to conversation memory, allowing them to revisit what has been said and done earlier in the conversation with a user:

Agents utilize conversation memory to maintain context throughout interactions

2. Single Shot

The single-shot limitation is solved with routing, where the agent can choose to use internal tools to better understand a challenge it is given:

Agents enhance query understanding by accessing various external tools

3. No Query Understanding

The lack of query understanding in naive RAGs is solved by giving an agent access to different kinds of external tools:

Agents use routing to deploy internal tools for better understanding and problem-solving

4. No Reflection

And finally, the lack of reflection is solved by allowing the agent to actually plan out how they want to solve the problem you're giving it:

Agents solve problems by dynamically planning their approach

Let's go back to the tricky customer cases the naive RAG struggled with and see how an agent would tackle each challenge.

How Agents Solve Naive RAG Limitations

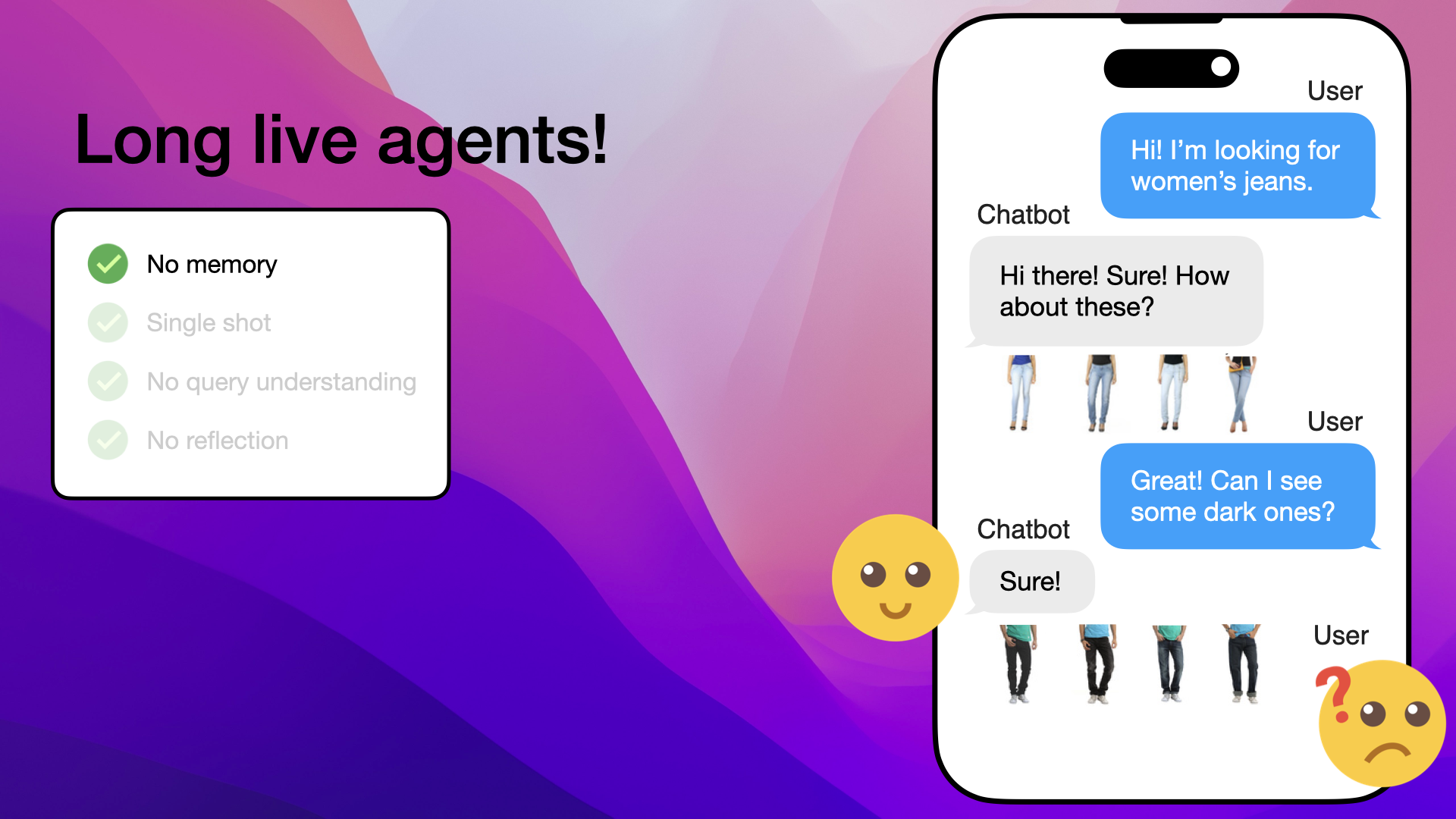

1. The Solution to No Memory Limitation

The first limitation we encountered was the lack of memory in naive RAGs. In this example, the naive RAG is unable to remember anything from the conversation and therefore recommends men's jeans, even though the customer is clearly looking for women's jeans:

Naive RAG fails to recall previous context, recommending men's jeans instead of women's jeans

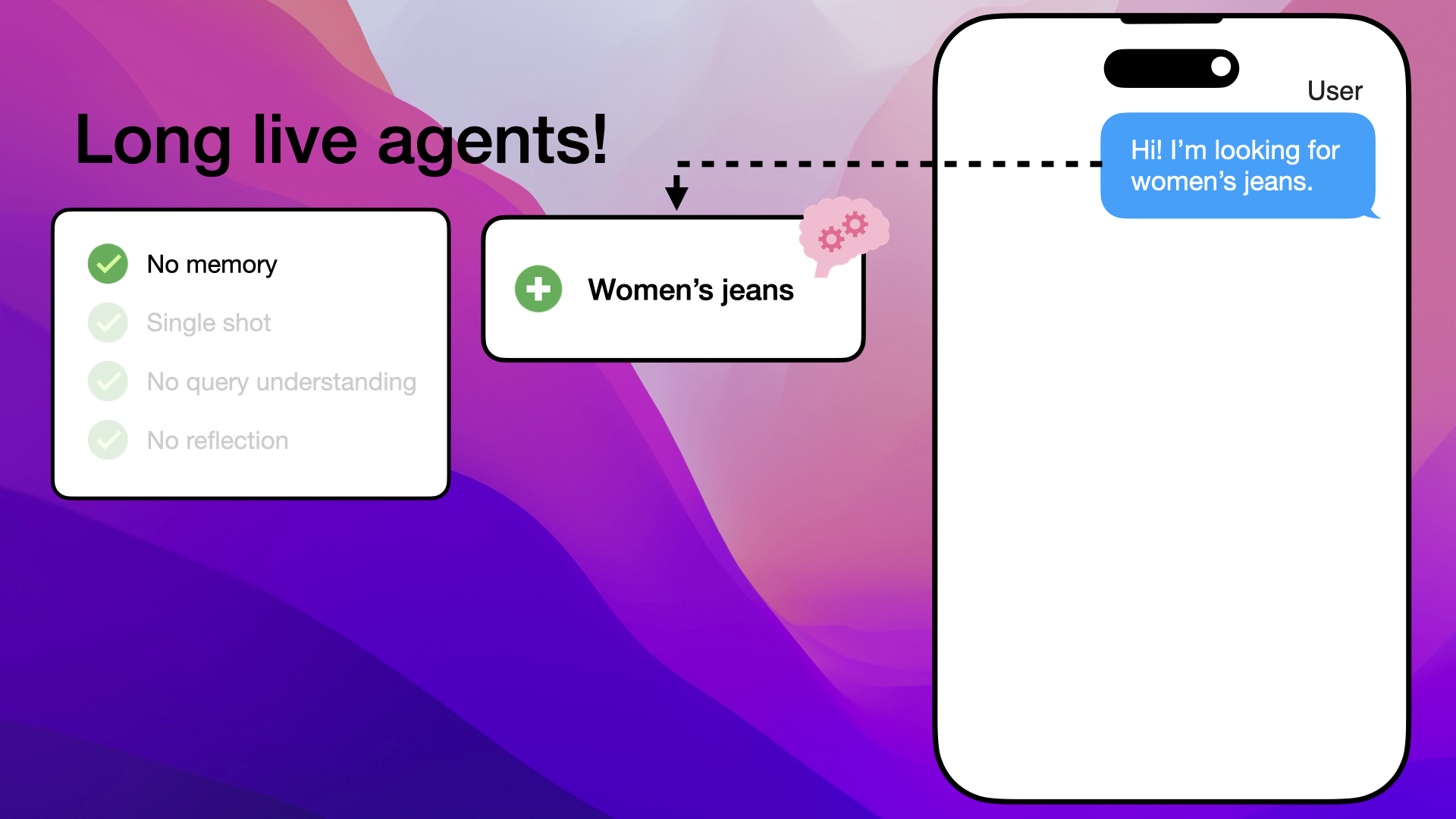

When it comes to agents, the conversation memory issue is solved by having an internal memory to which each message in the conversation gets added:

Agents utilize internal memory to store each message in the conversation

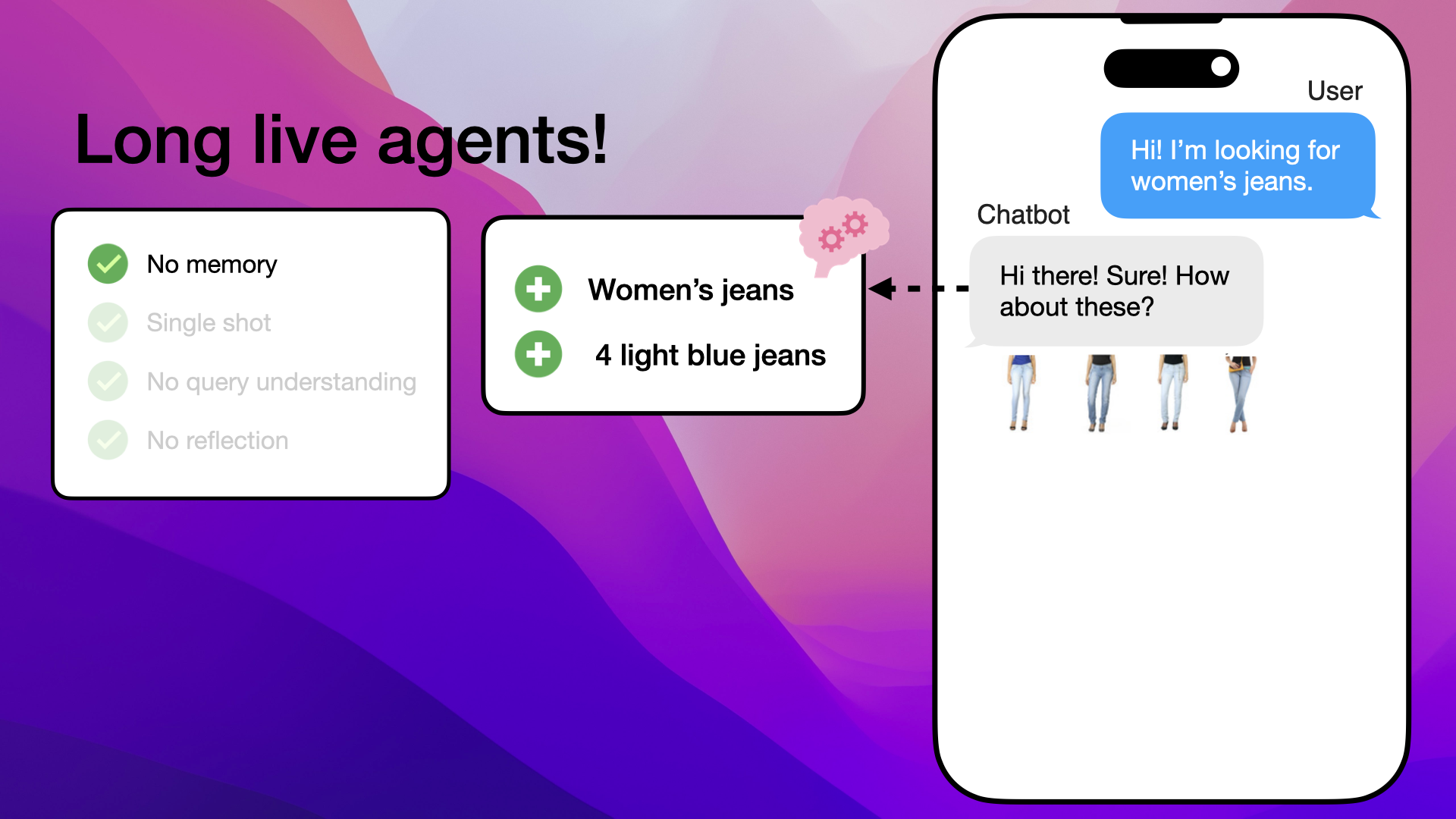

We had a customer saying "Hi! I'm looking for women's jeans", this user input would then be saved in the internal memory of the agent:

Customer's request for women's jeans is stored in the agent's internal memory.

The agent would then respond with a set of jeans, and these recommended jeans would also be saved to the agent's memory:

Agent's response and recommended products are saved to memory

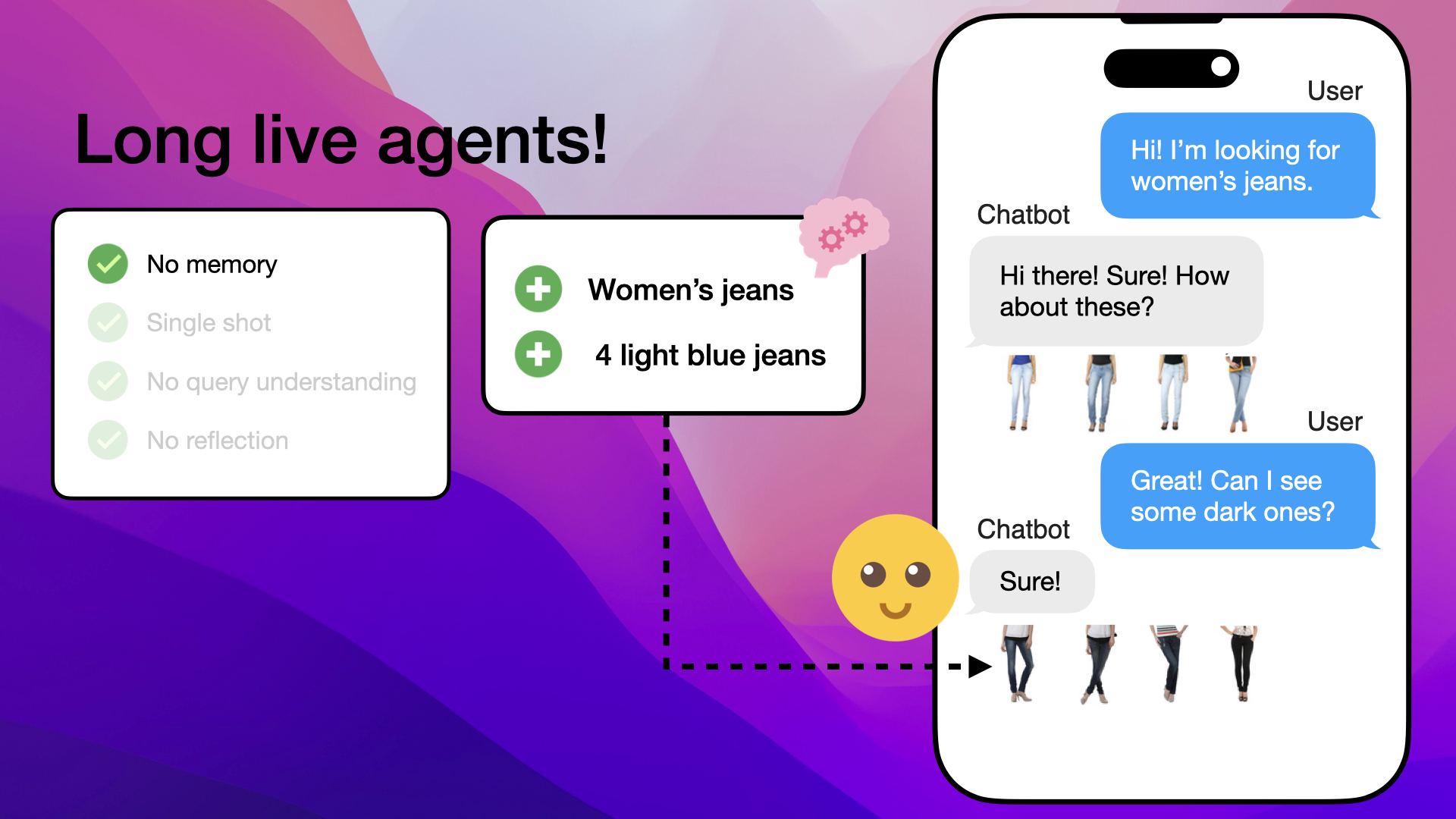

This allows the agent to be aware of everything that has been said and also keeps track of the products it pulled from the vector database.

So, when the customer says, "Great! Can I see some dark ones?" the agent knows from the previous messages in its memory that the customer is indeed looking for women's jeans.

It also knows that it showed the customer four light blue pairs, so the next message would naturally be to show four dark blue women's jeans:

Agent uses memory to provide contextually relevant recommendations, showing dark blue women's jeans

Now, what is memory in agents really?

Memory in agents is essentially a chat store that saves the conversation history. This chat store can be implemented in various ways, such as in-memory storage, Redis, or even non-relational databases like DynamoDB.

The key point is that the chat store maintains the sequence of messages, enabling the agent to refer back to previous interactions and provide contextually relevant responses.

Memory Code Example

Here's a basic example using LlamaIndex Chat Stores to show how easy it is to implement memory functionality in agents:

from llama_index.core.storage.chat_store import SimpleChatStore

from llama_index.core.memory import ChatMemoryBuffer

# Initialize a simple chat store

chat_store = SimpleChatStore()

# Create a chat memory buffer with the chat store

chat_memory = ChatMemoryBuffer.from_defaults(

token_limit=3000,

chat_store=chat_store,

chat_store_key="user1",

)

# Example of integrating the memory into an agent or chat engine

agent = OpenAIAgent.from_tools(tools, memory=chat_memory)

# OR

chat_engine = index.as_chat_engine(memory=chat_memory)

# Persisting the chat store to disk

chat_store.persist(persist_path="chat_store.json")

LlamaIndex is a handy open source tool for developers working with large language models. It helps you connect different data sources, like PDFs, databases, and apps, with your LLMs.

With LlamaIndex, you can easily set up memory and other features in your agents. It offers various tools to handle data, making it simple to work with different formats and applications.

This versatility allows you to create different types of indexes, like vector, tree, or keyword indexes, to meet your specific needs. LlamaIndex makes it easy to build smart, context-aware applications using large language models.

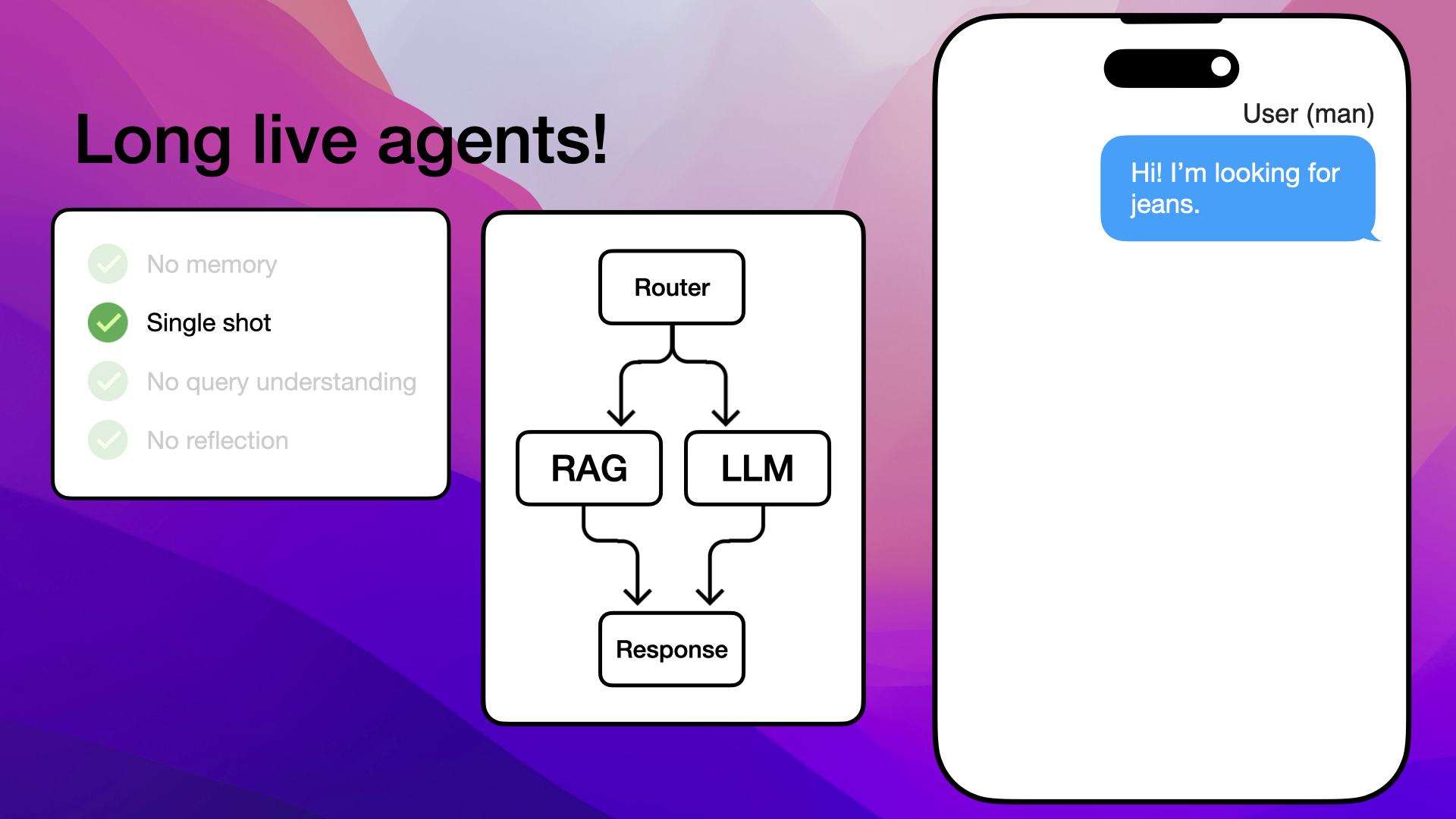

2. The Solution Single Shot Limitation

The next limitation we examined was single-shot generation. In this example, the naive RAG never asked for more information and just assumed the user, in this case a man, was looking for women's jeans:

Naive RAG assumes the user is looking for women's jeans without asking for more information

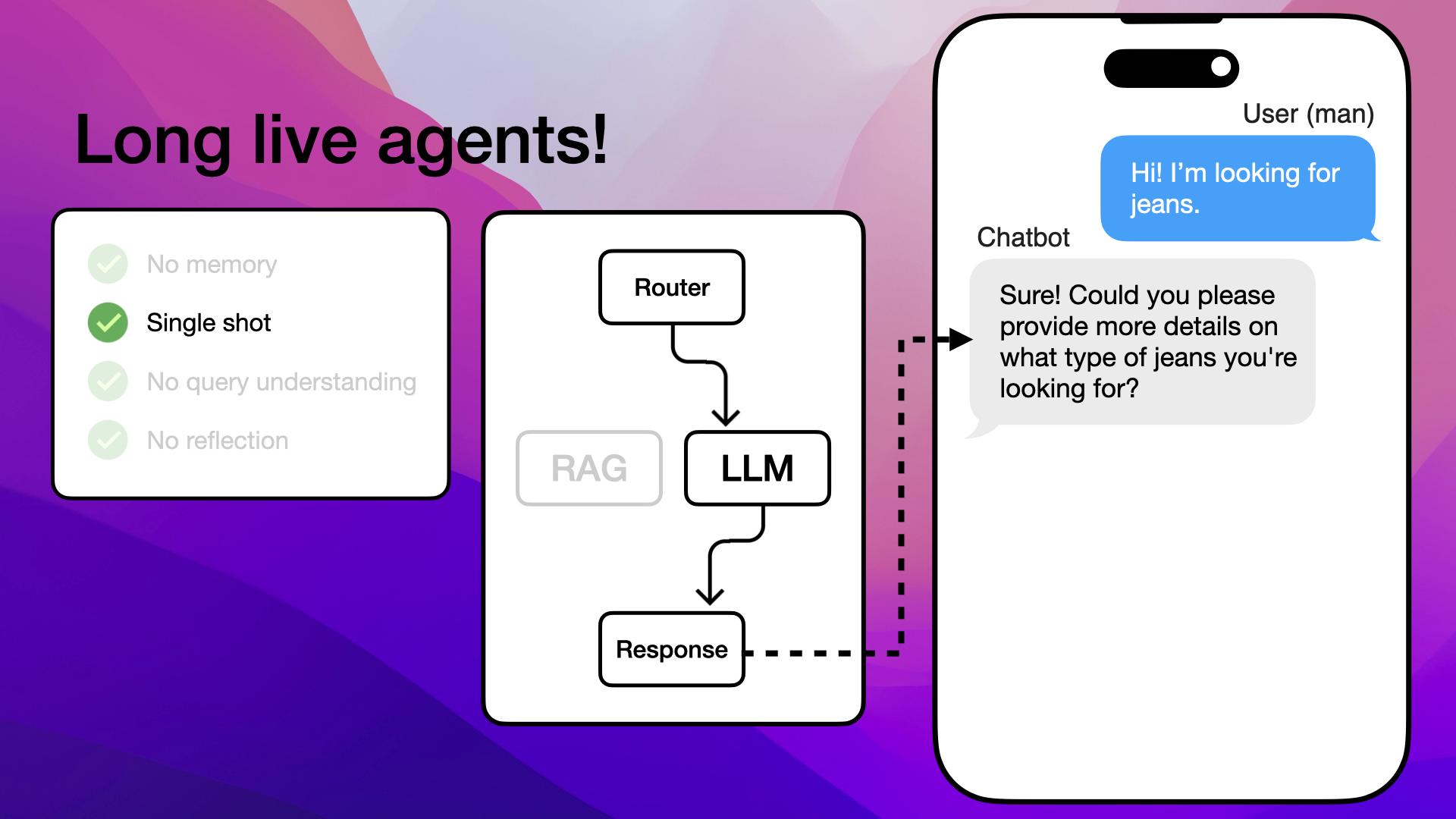

With agents, however, we solve this with routing. By using a router, the agent can choose to ask for more information when necessary, so instead of immediately looking for suitable products, it can decide to ask for more information:

Agent can use routing to ask for more information before making recommendations

As seen, the agent can choose to ask for more information instead of directly pulling products from the vector database:

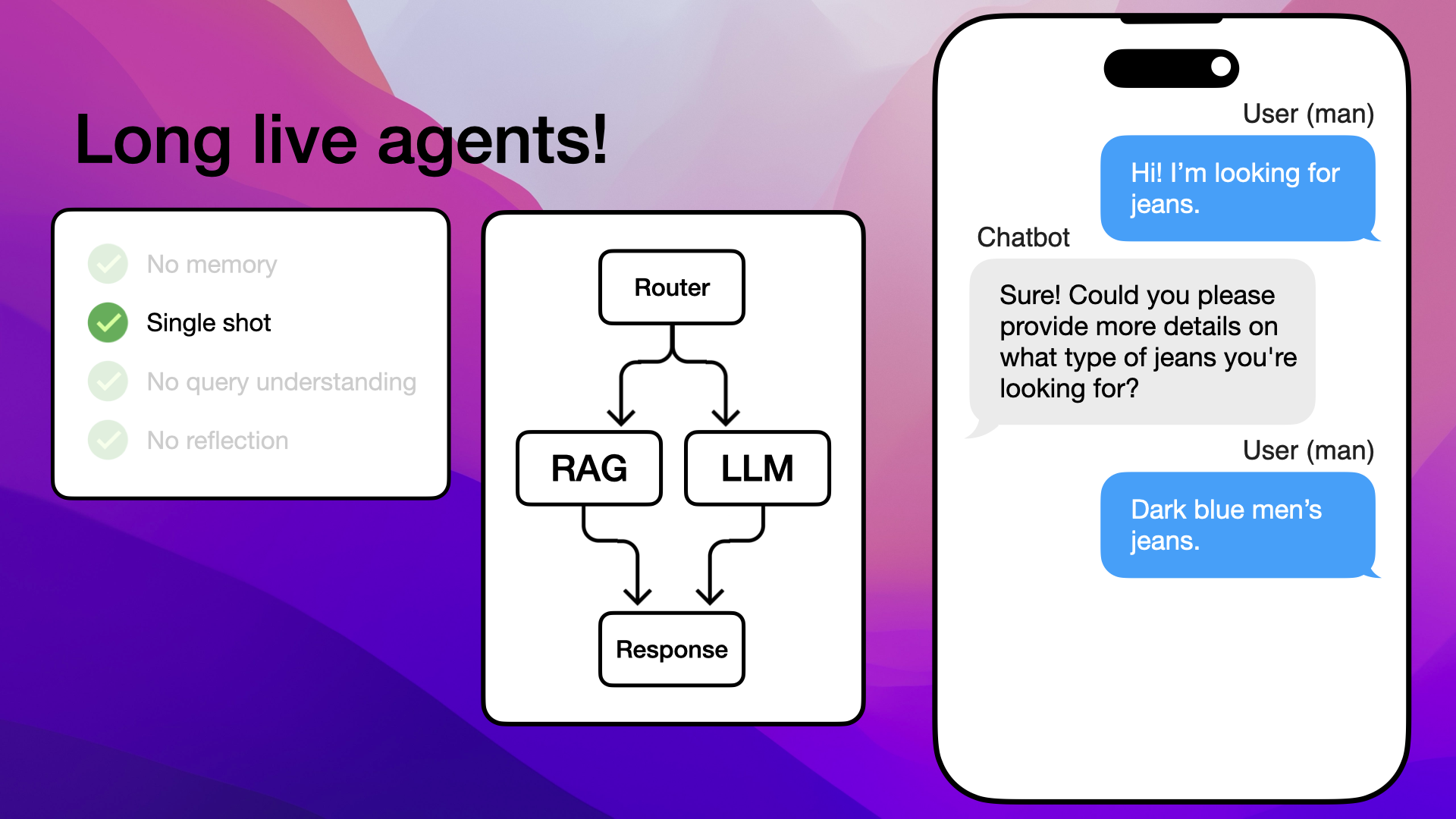

Agent inquires further to gather more details from the customer

This allows the customer to specify what they're looking for:

Agent inquires further to gather more details from the customer

This provides the agent with enough information to pull good recommendations and respond accurately:

Agent uses detailed customer input to offer accurate product recommendations

This way of asking the user clarifying questions is called a multi-turn conversation.

So, single-shot generation is solved with routing, where the agent can choose to use internal tools to better understand the challenge it is given.

Using Tools in Agents

To handle the single-shot limitation, agents use tools to gather more information. These tools can be simple functions or more complex query engines.

Here's an example of creating a tool with LlamaIndex Agent Tools:

from llama_index.core.tools import FunctionTool

# Define a simple function to be used as a tool

def get_weather(location: str) -> str:

"""Useful for getting the weather for a given location."""

...

# Convert the function into a tool

tool = FunctionTool.from_defaults(get_weather)

# Integrate the tool into an agent

agent = ReActAgent.from_tools([tool], llm=llm, verbose=True)

LlamaIndex makes it easy to create and manage tools, helping agents to ask for more information and make better decisions.

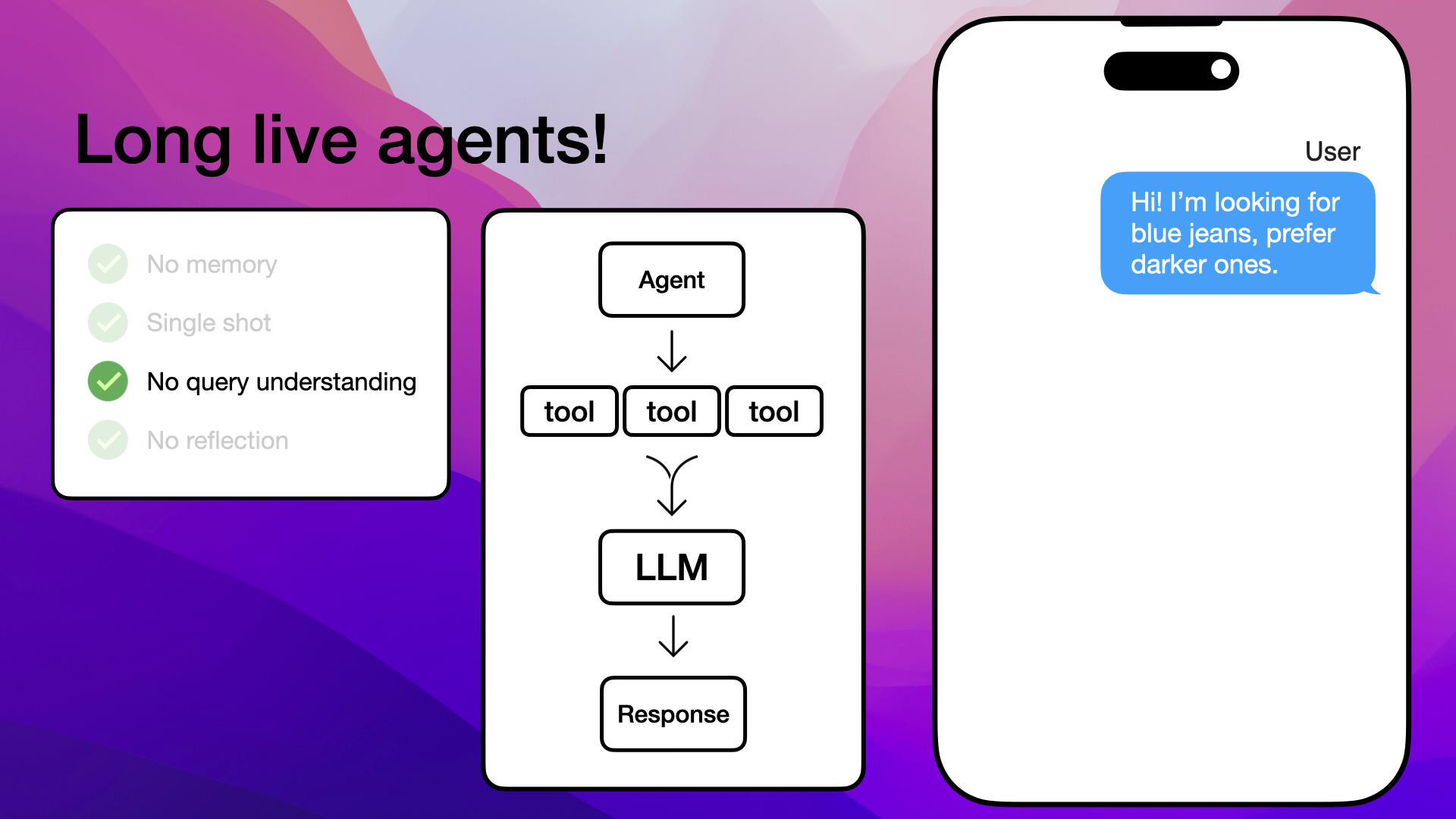

3. The Solution No Query Understanding Limitation

The next limitation of naive RAGs we examined was their inability to perform query understanding. In this previous example, the naive RAG got both light blue and dark blue jeans due to the mixed user inquiry and therefore responded with a mix of both:

Naive RAG responds with a mix of light blue and dark blue jeans due to mixed user inquiry

Agents solve this challenge by using tools. Essentially, we give the agents a set of tools they can freely choose from and pick which to use together with the data retrieval:

Agents utilize various tools to enhance data retrieval and understanding

A tool can be an API, a code interpreter, a database, or a file like a PDF or Excel sheet.

Tools can also interact with various data sources, including regular databases or vector databases, allowing agents to pull and process information seamlessly. This flexibility enables agents to handle a wide range of tasks and data types efficiently.

To illustrate, let's see what happens with naive RAGs. They will vectorize the entire customer inquiry and match it to products in our vector database:

Naive RAG vectorizes the entire customer inquiry for matching in the vector database

The problem with this approach is that we'll probably get semantic matches to both blue jeans and dark blue jeans, due to both being mentioned in the customer inquiry:

Semantic matches include both blue jeans and dark blue jeans due to mixed inquiry

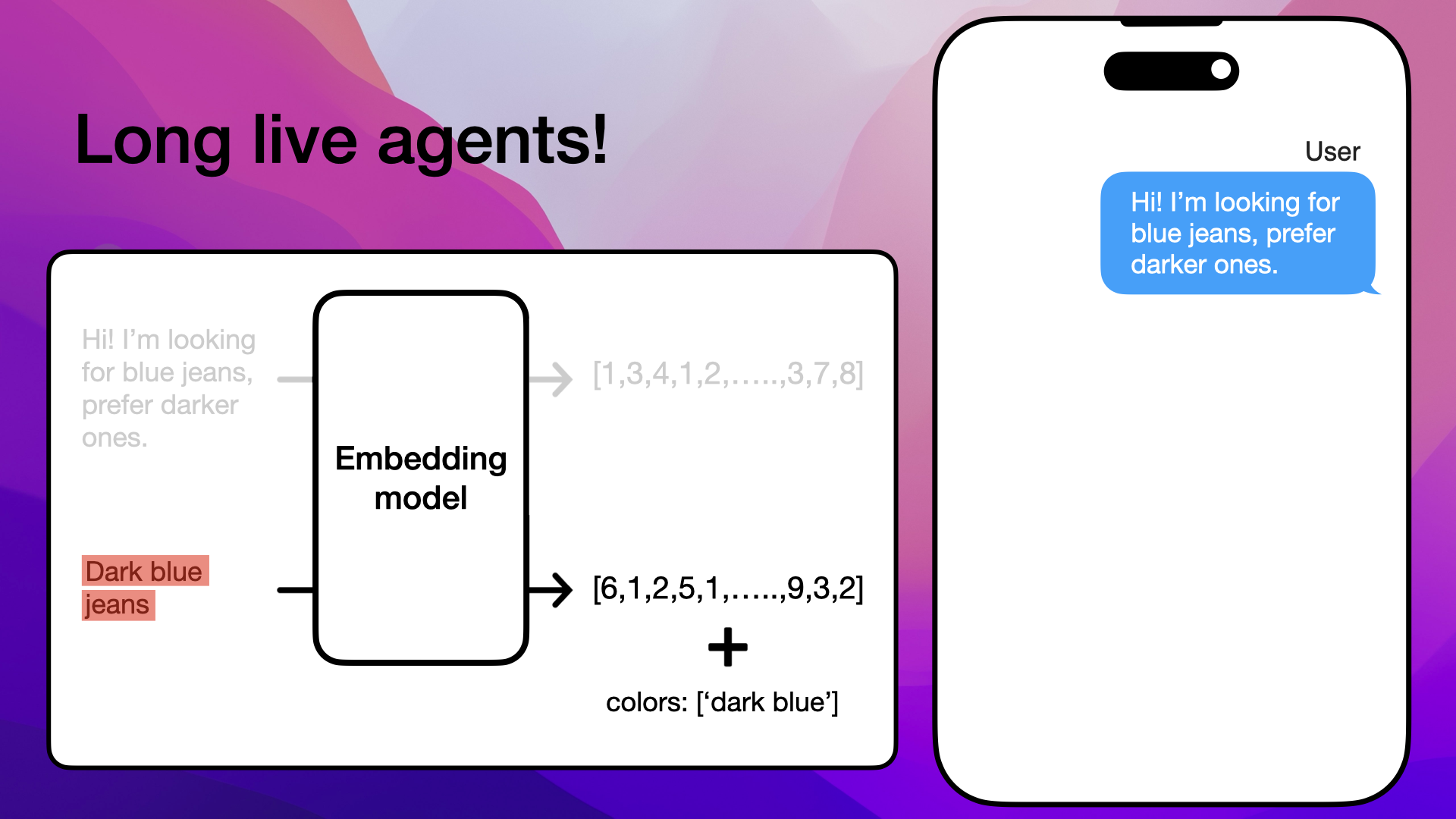

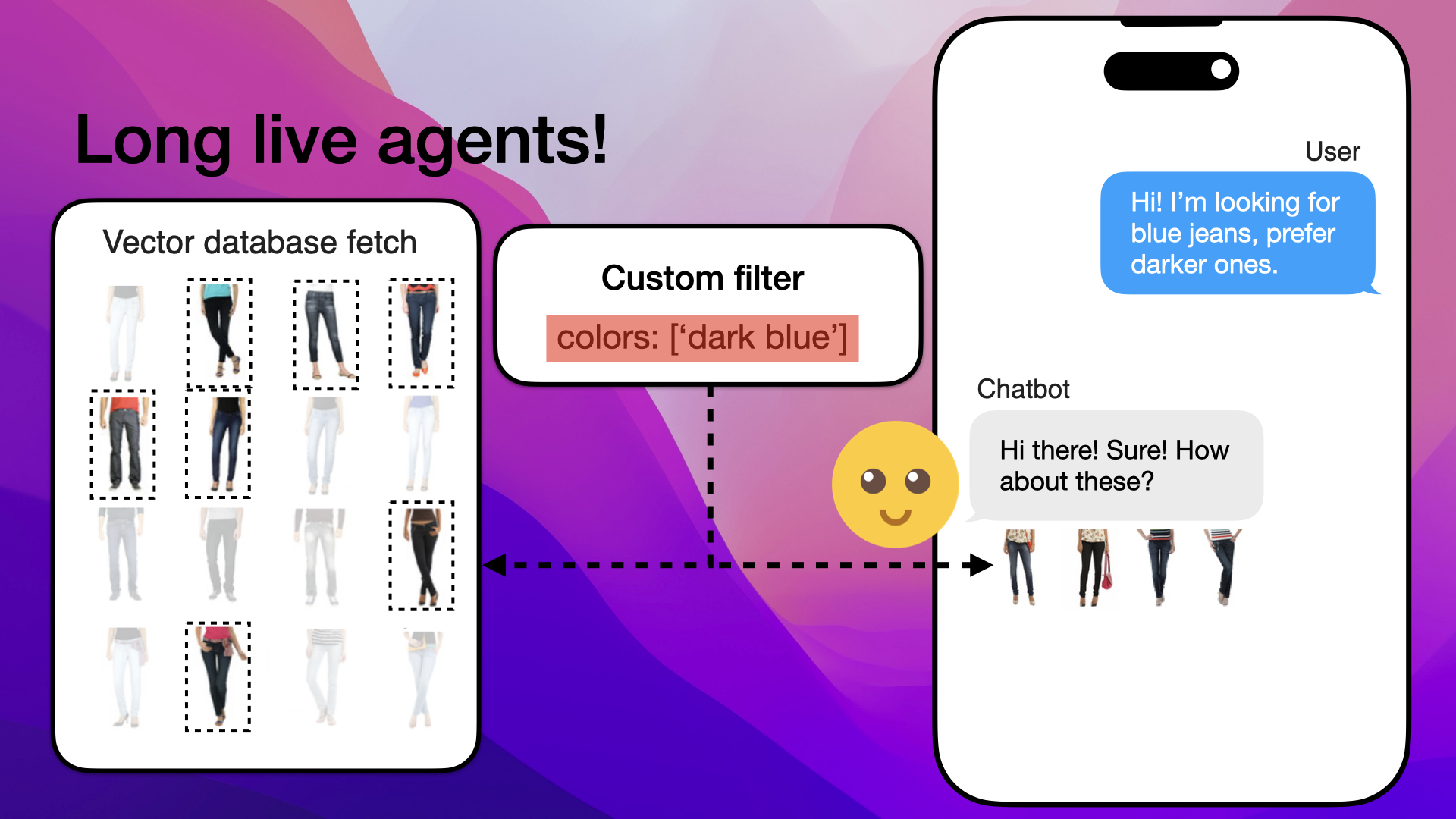

Since agents have access to different tools, they can choose to rephrase the customer inquiry. Instead of vectorizing "Hi! I'm looking for blue jeans, prefer darker ones", agents can choose to just vectorize the phrase "dark blue jeans":

Agent rephrases the inquiry to focus on 'dark blue jeans' for accurate vectorization

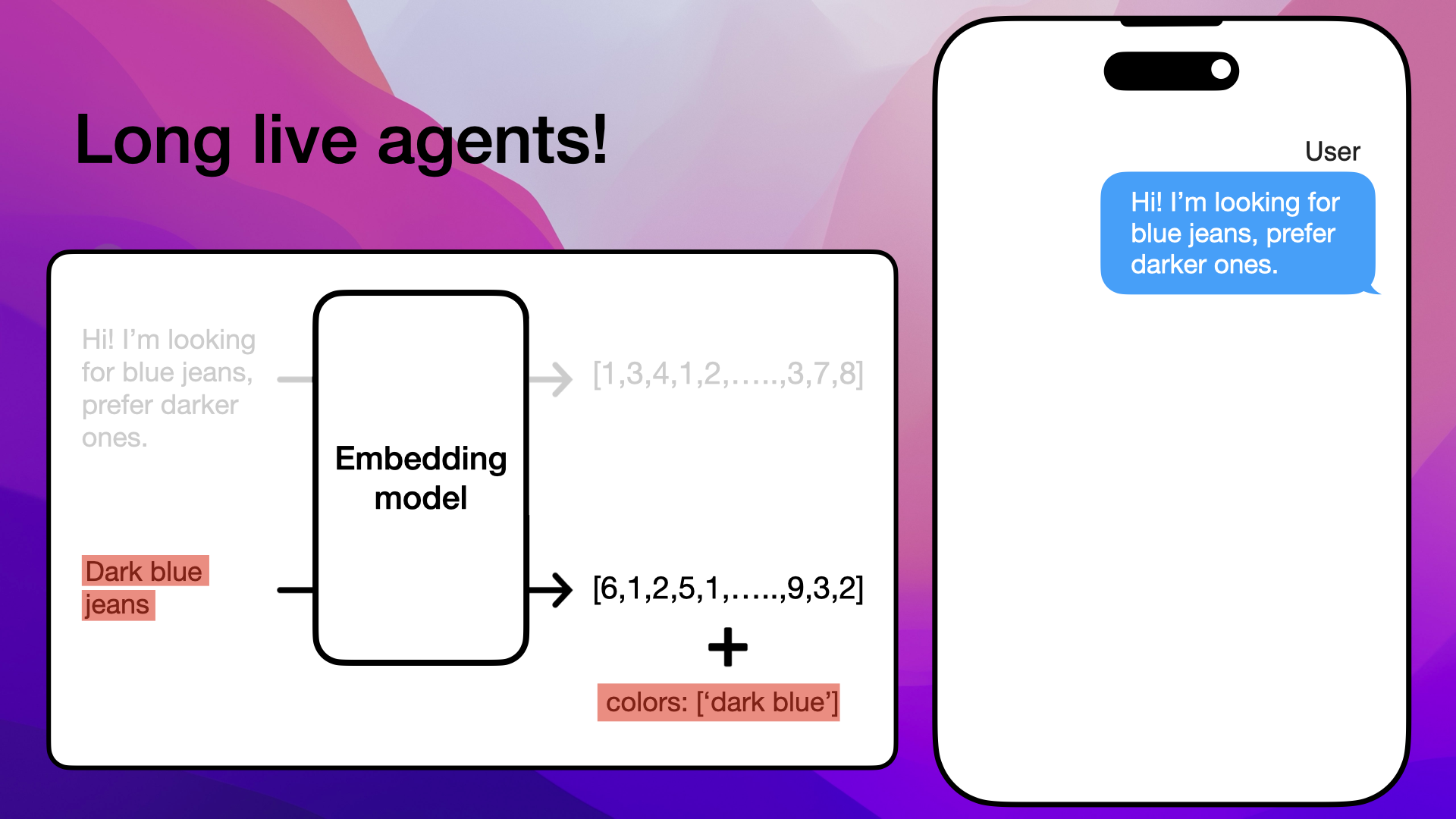

After vectorization, agents can also choose to filter the product data fetch with a custom filter:

Agent applies a custom filter to refine product data retrieval

So, agents are not forced to vectorize the entire customer inquiry; they can choose freely how to interact with the vector database.

Even if the vector database pull includes some light blue jeans:

Initial vector database pull could include light blue jeans neighbors

The custom filter applied by the agent filters out the light blue jeans and responds with product recommendations suitable to what the customer asked for:

Agent's custom filter ensures recommendations match the customer's request for dark blue jeans

In summary, the limitation of no query understanding is solved by giving agents access to custom tools and allowing them to interact with the vector database freely.

Using Advanced Tools in Agents

To tackle the query understanding limitation, agents use more complex functions. This allows them to filter and refine data retrieval based on the user's specific needs.

Here's an example function demonstrating how an agent can decide whether to filter the search results:

def vector_query(

query: str,

colors: List[str]

) -> str:

"""Perform a vector search over an index.

query (str): the string query to be embedded.

colors (List[str]): Filter by set of colors. Leave BLANK if we want to perform a vector search

over all colors. Otherwise, filter by the set of specified colors. Use lowercase.

"""

# Create metadata dictionaries for color filters

metadata_dicts = [

{"key": "color", "value": c} for c in colors

]

# Define the query object with embedding and optional filters

query_object = VectorStoreQuery(

query_str=query,

query_embedding=get_my_embedding(query),

alpha=0.8,

mode="hybrid",

similarity_top_k=5,

# Apply filters if provided

filters=MetadataFilters.from_dicts(

metadata_dicts,

condition=FilterCondition.OR

)

)

# Execute the query against the vector store

response = vector_store.query(query_object)

# Return the response

return response

# Convert the function into a tool

vector_query_tool = FunctionTool.from_defaults(

name="vector_tool",

fn=vector_query

)

# Integrate the tool into an agent

agent = ReActAgent.from_tools([tool], llm=llm, verbose=True)

This function allows the agent to perform a vector search with optional color filtering. By providing the flexibility to apply filters or not, the agent can better understand and respond to complex queries.

4. The Solution No Reflection Limitation

The last limitation we examined was the inability to reflect on the vector database product pull, leading to misunderstandings. For example, the RAG pipeline included men's jeans even though the customer explicitly asked for women's jeans:

Naive RAG includes men's jeans despite the customer's request for women's jeans

This reflection challenge is solved in agents by giving them the ability to first create a game plan and then reflect on the outcome of each step of the plan they made.

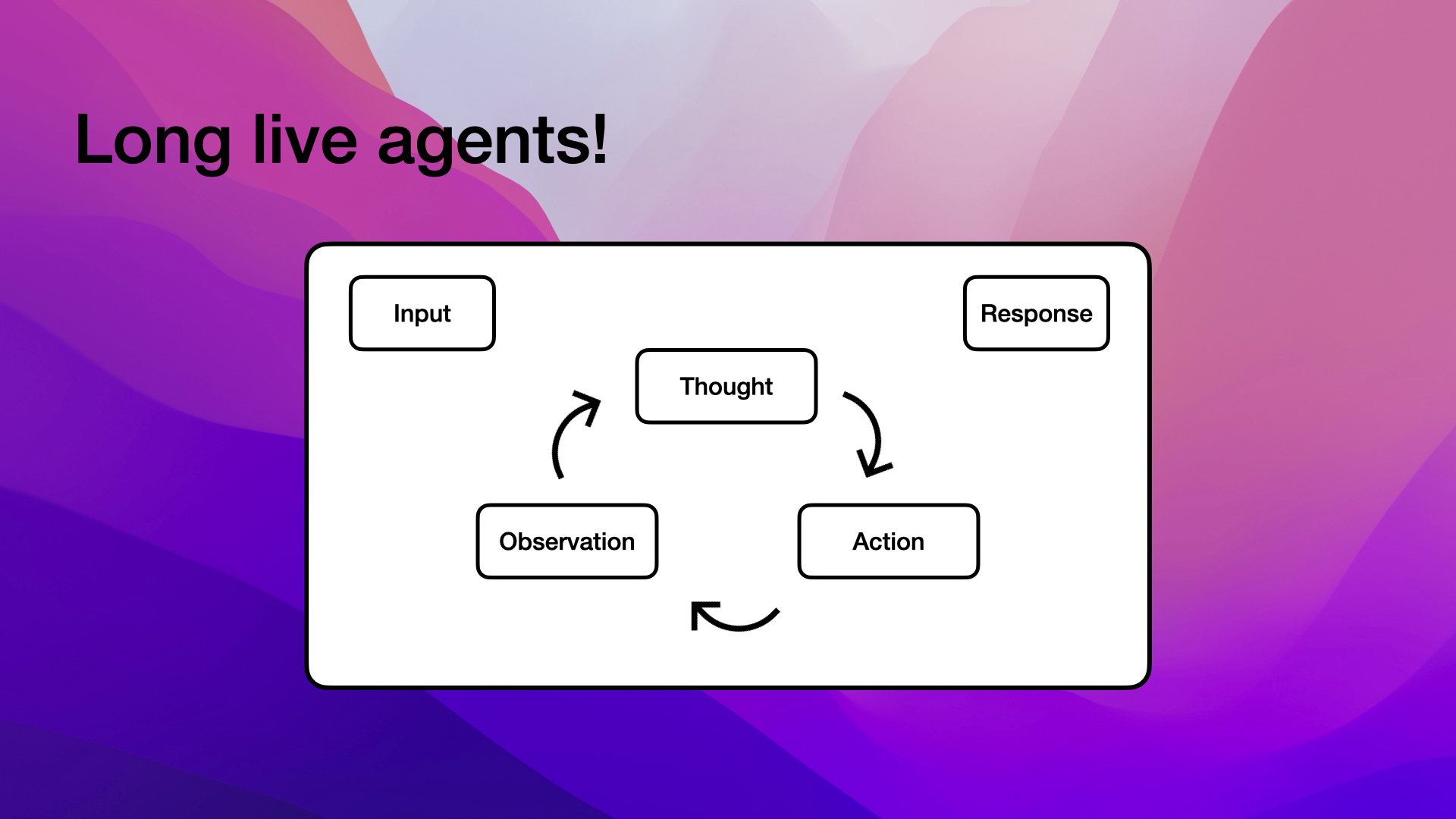

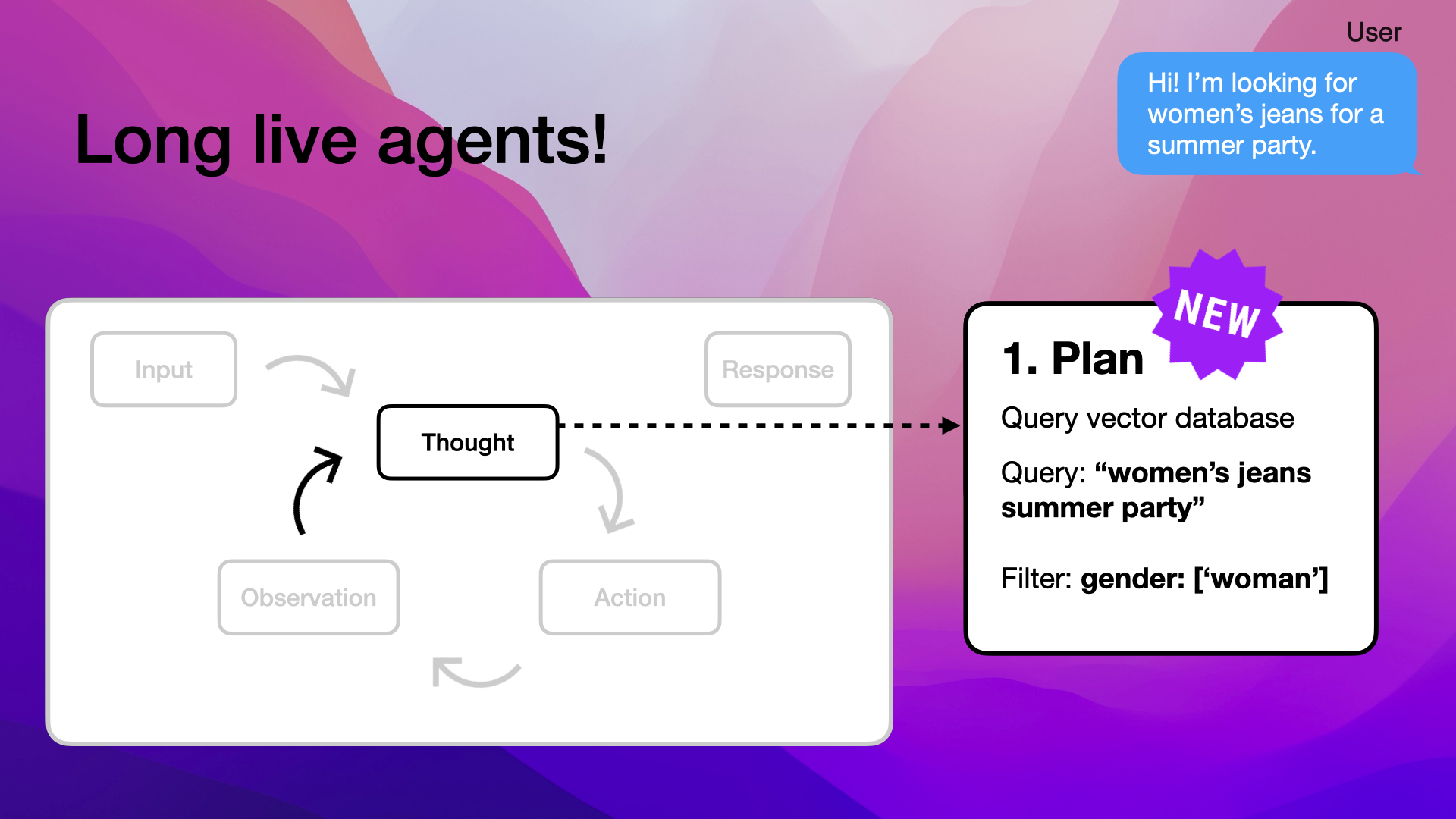

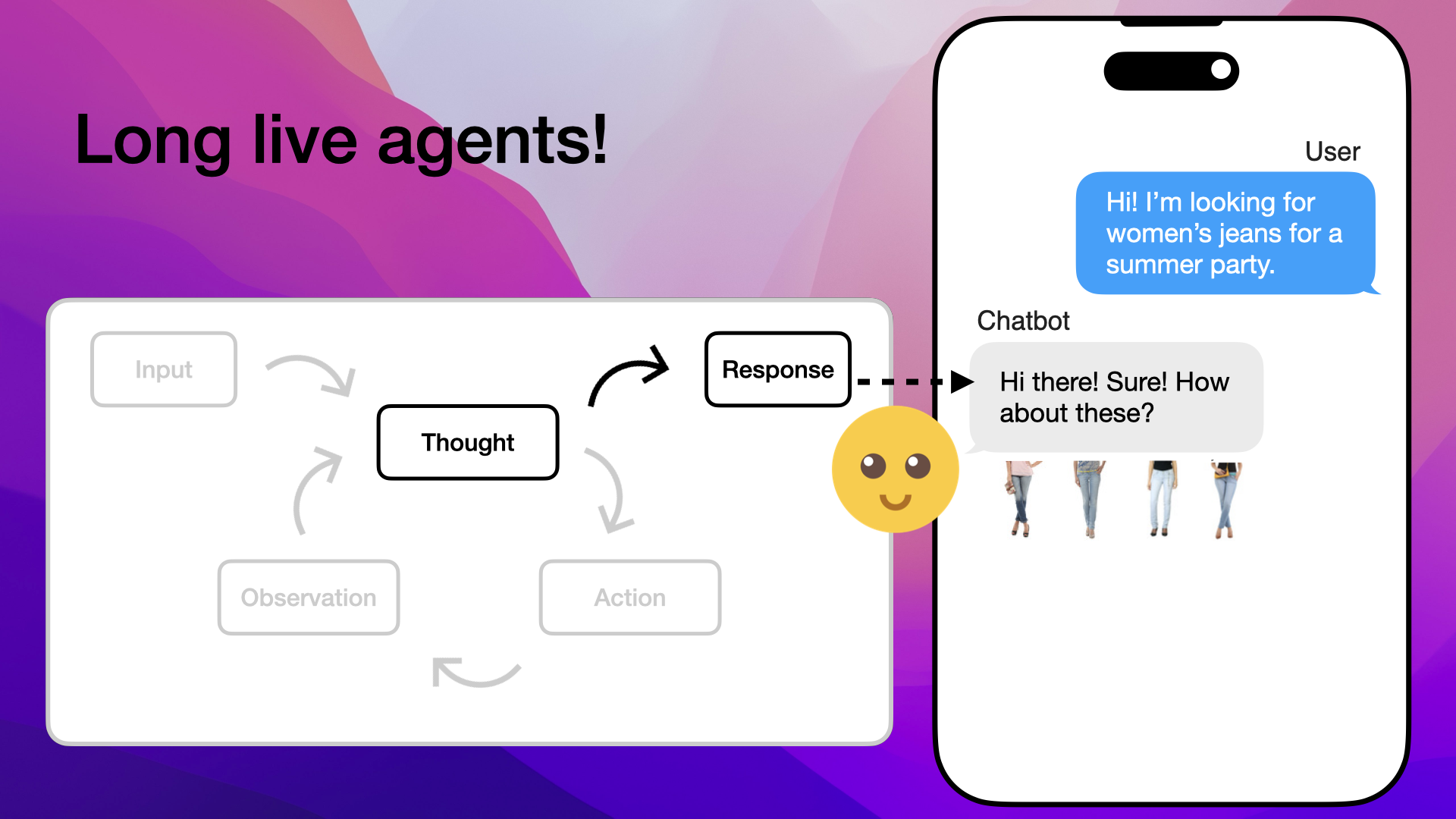

In our case, the agent has an internal loop consisting of thought, action, and observation. For each loop, the agent creates a plan and then evaluates how well each step went:

Agents use an internal loop of thought, action, and observation to reflect and plan

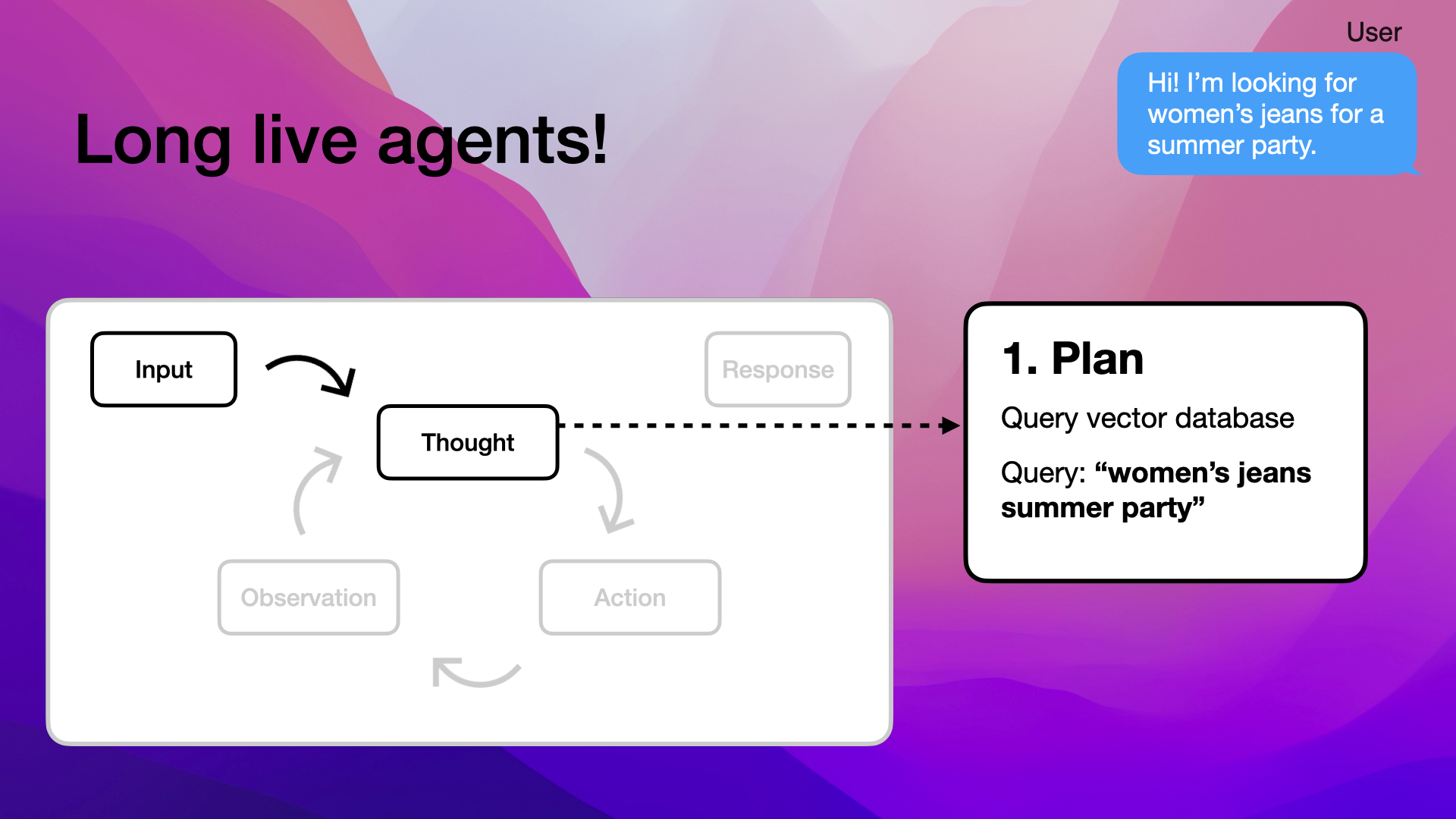

So in our case, we have the input "Hi! I'm looking for women's jeans for a summer party" as our input:

Customer's input: "Hi! I'm looking for women's jeans for a summer party"

That input is processed through the first step, which is the "thought step". This is the reasoning process where the agent tries to understand the task and make decisions on how to proceed.

In our case, the plan would be to query our vector database looking for women's jeans suitable for a summer party. The query could then be "women's jeans summer party":

Agent formulates a plan: Query the vector database with "women's jeans summer party"

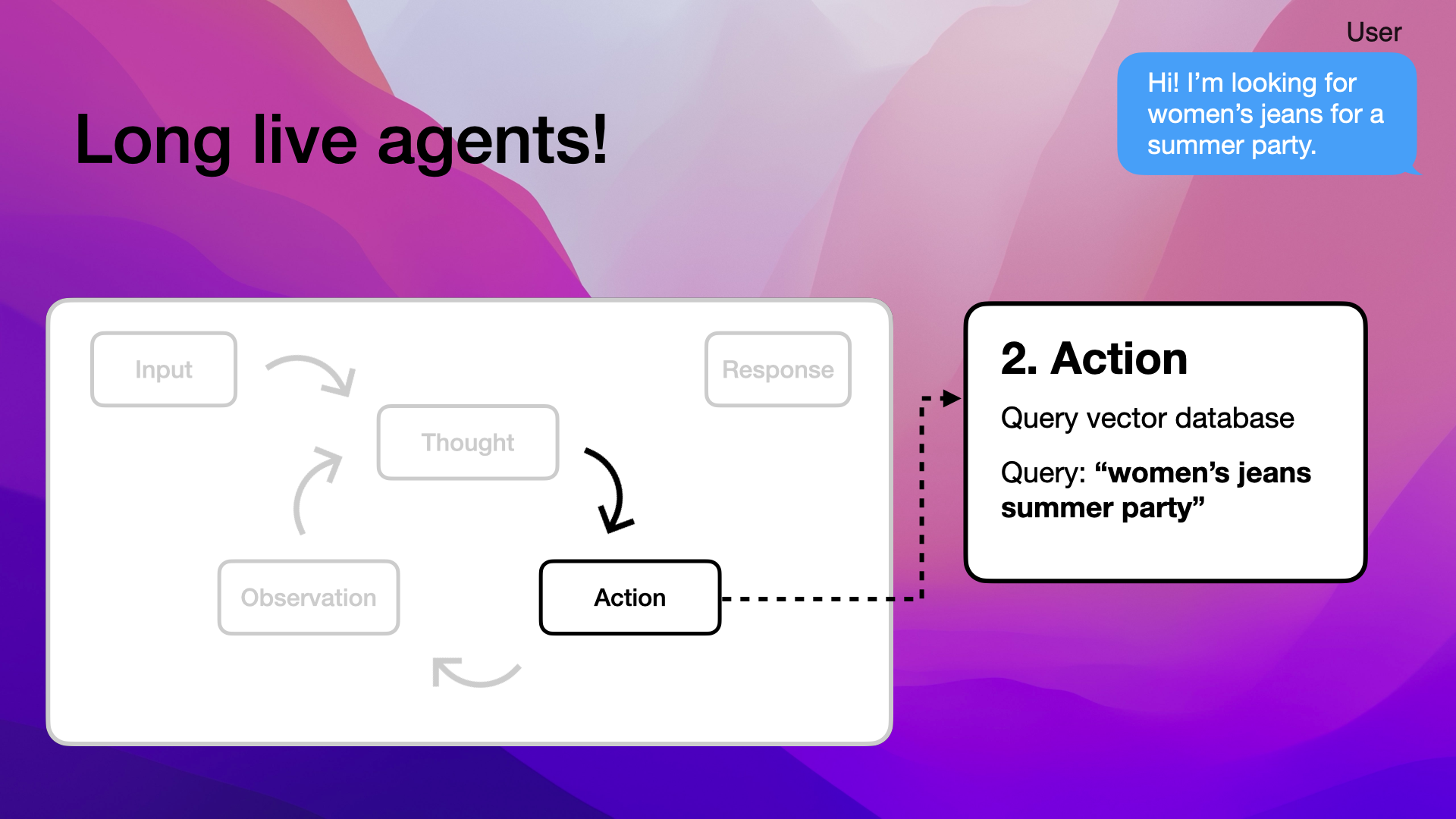

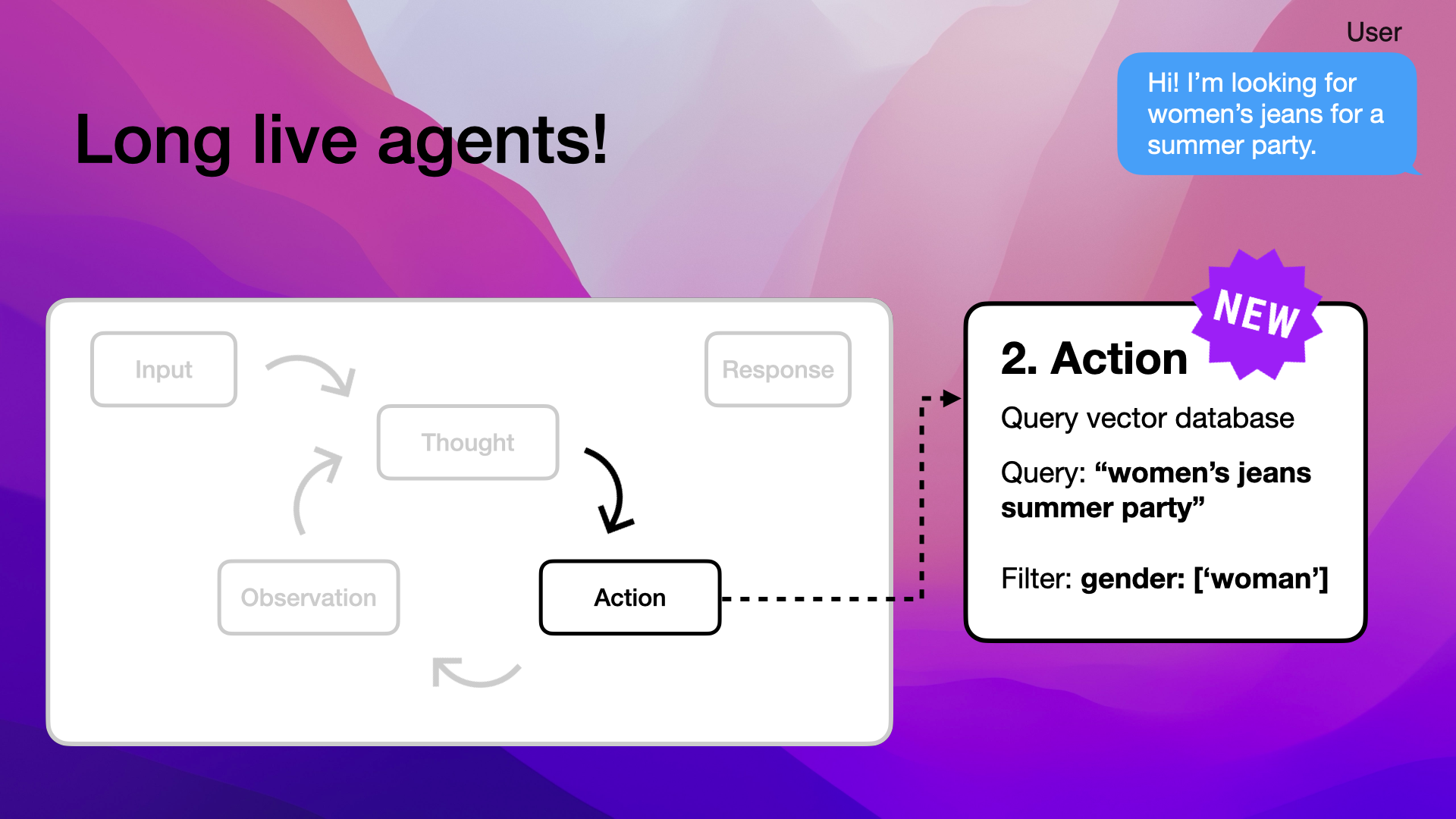

The next step is to act on the plan, in this case, actually querying our vector database and receiving products for the query the agent ran:

Agent executes the plan by querying the vector database

This gives us a range of products, and the agent will evaluate those products in the next step:

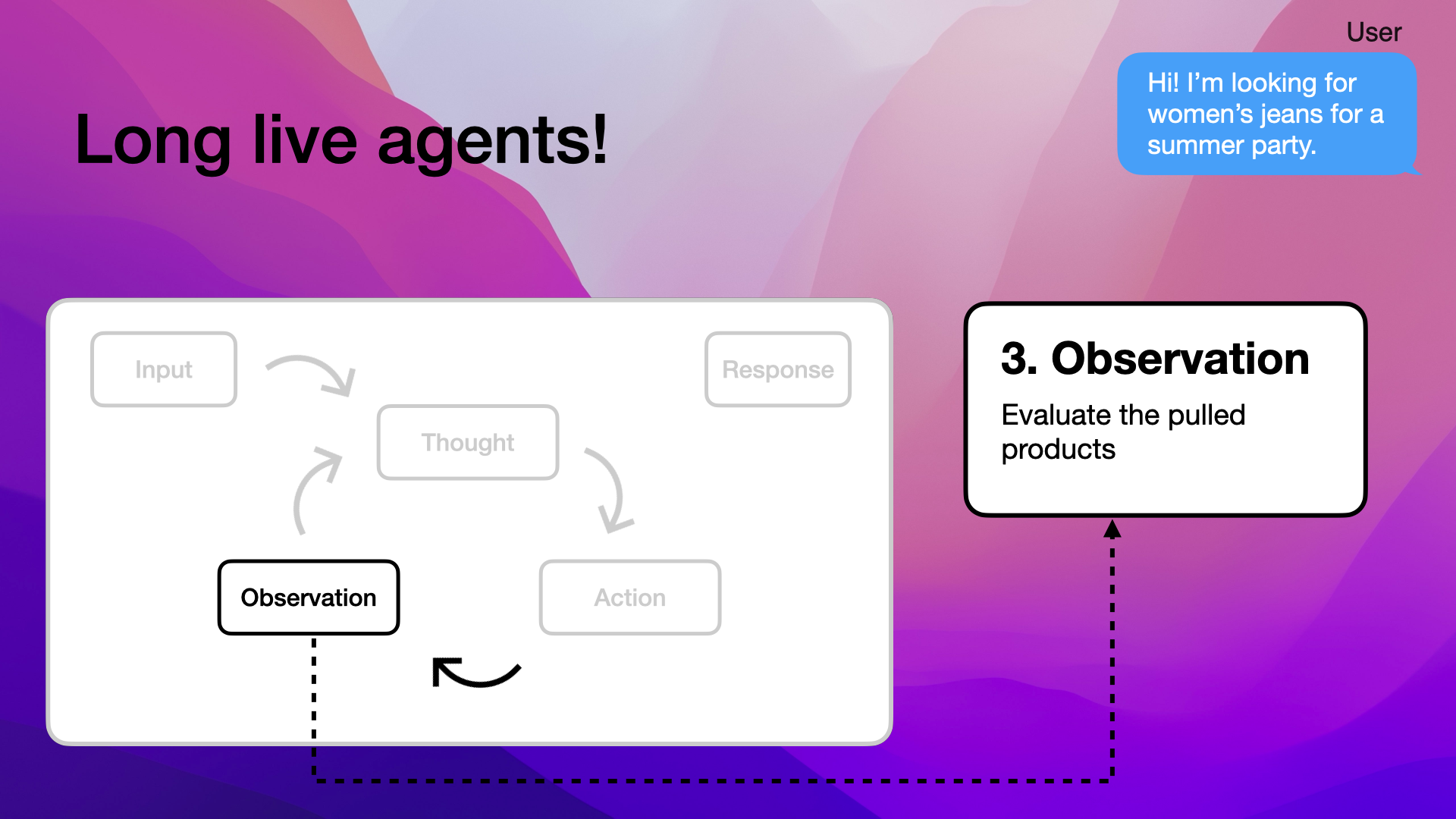

Agent evaluates the range of products retrieved from the vector database

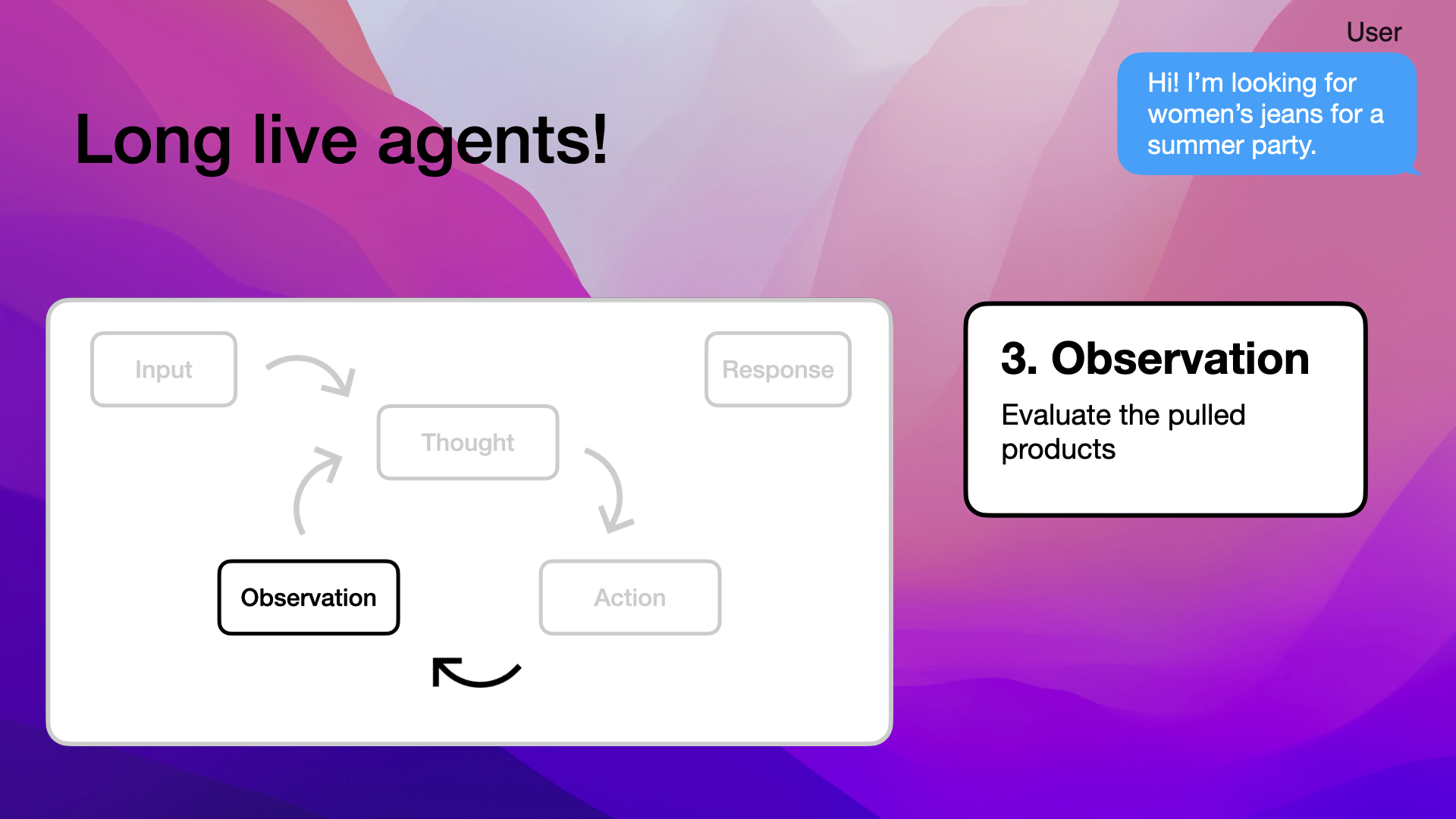

The next step is to observe the pulled products, so let's look at the data we pulled:

Agent observes the products pulled from the vector database

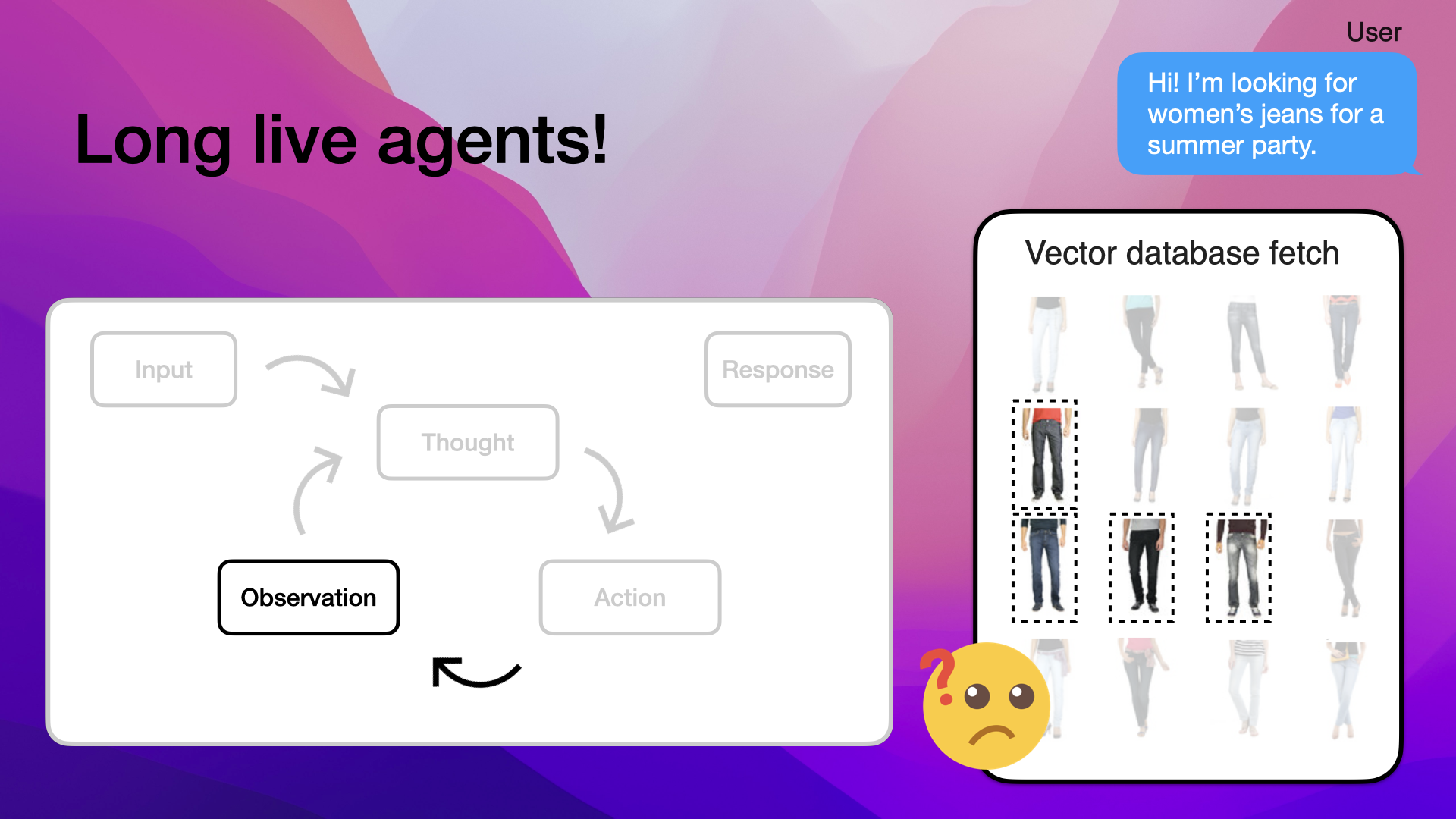

If we look at the data closely, we'll notice some men's jeans:

Observation reveals the inclusion of men's jeans in the product data

Therefore, let's investigate further by pulling up the product descriptions:

Agent examines product descriptions to understand why men's jeans were included in the vector database pull

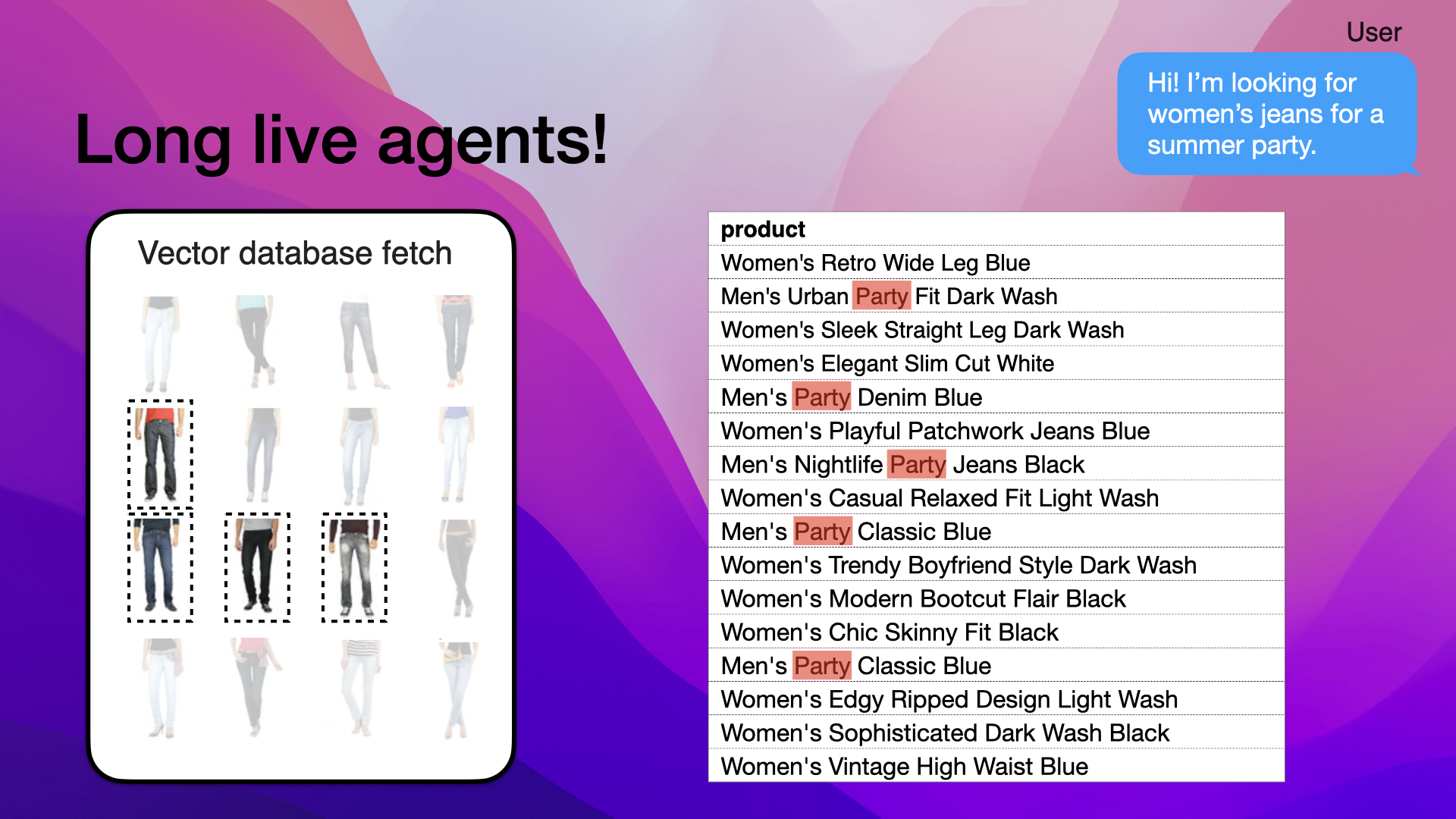

If we examine the product descriptions further, we'll notice that the four men's jeans all have the word "party" in their description:

Men's jeans included due to the keyword "party" in their descriptions

The vector database query women's jeans summer party involuntarily matched with men's jeans with the description "party":

Vector database query for summer party matched with men's jeans with party in their description

If we go back to our agent's thought process in the first step, the observation regarding the men's party jeans would tweak our plan when we reach the planning phase again:

Agent revises the plan based on observations of the men's jeans data

When the agent is back at the thought step, it might expand the query to still use "women's jeans summer party" but also add a post filter to include only women's jeans:

Revised plan includes a post filter to ensure only women's jeans are retrieved

This would be the reflection from the observation step when the agent examined the jeans data and saw the men's jeans:

Agent acts on the revised plan to query for women's jeans suitable for a summer party.

Looking at the new vector database fetch, we're seeing only women's jeans after pulling product data:

New vector database fetch includes only women's jeans

Moving on to the observation step, the agent again evaluates the vector database fetch:

Agent re-evaluates the products fetched from the vector database

Now, only seeing women's jeans, the agent moves on to the thought step again:

Agent confirms the relevance of the fetched products before finalizing the response

The thought step is the reasoning and planning phase, so here the agent decides it has enough information to answer the customer inquiry:

Agent decides it has sufficient information to respond to the customer

This decision makes the agent break out of the loop and generate a response. The new response only includes women's jeans, all suitable for a summer party:

Agent generates a final response with recommendations that match the customer's request

Solution: Reflection and Planning in Agents

To address the "No Reflection Limitation", agents can create a game plan and reflect on each step's outcome. This process involves an internal loop of thought, action, and observation, enabling the agent to dynamically adjust its strategy based on the results of each action.

Here's a code example using LlamaIndex to implement such a reflective agent:

from llama_index.core.tools import FunctionTool

from llama_index.core.query import VectorStoreQuery, MetadataFilters, FilterCondition

from llama_index.core.agent import FunctionCallingAgentWorker, StructuredPlannerAgent

# Define the function to perform a vector search with optional color filtering

def vector_query(

query: str,

colors: List[str]

) -> str:

"""Perform a vector search over an index.

query (str): the string query to be embedded.

colors (List[str]): Filter by set of colors. Leave BLANK if we want to perform a vector search

over all colors. Otherwise, filter by the set of specified colors. Use lowercase.

"""

# Create metadata dictionaries for color filters

metadata_dicts = [

{"key": "color", "value": c} for c in colors

]

# Define the query object with embedding and optional filters

query_object = VectorStoreQuery(

query_str=query,

query_embedding=get_my_embedding(query),

alpha=0.8,

mode="hybrid",

similarity_top_k=5,

# Apply filters if provided

filters=MetadataFilters.from_dicts(

metadata_dicts,

condition=FilterCondition.OR

)

)

# Execute the query against the vector store

response = vector_store.query(query_object)

# Return the response

return response

# Convert the function into a tool

vector_query_tool = FunctionTool.from_defaults(

name="vector_tool",

fn=vector_query

)

# Create the function calling worker for reasoning

agent_worker = FunctionCallingAgentWorker.from_tools(

[vector_query_tool], llm=llm,

verbose=True,

system_prompt="""\

You are an agent designed to answer customers looking for jeans.\

Please always use the tools provided to answer a question. Do not rely on prior knowledge.\

Drive sales and always feel free to ask a user for more information.\

"""

)

# Wrap the worker in the top-level planner

agent = StructuredPlannerAgent(

agent_worker, tools=[vector_query_tool], verbose=True

)

# Example usage of the agent to handle a customer inquiry

response = agent.chat("I'm looking for women's jeans for a summer party")

print(response)

This example shows how to create an agent that can reflect and adjust its strategy dynamically:

-

Function Definition: The

vector_queryfunction performs a vector search with optional color filtering. This function allows the agent to refine its search based on the user's specific needs. -

Tool Conversion: The function is converted into a tool using

FunctionTool.from_defaults, making it accessible to the agent. -

Agent Worker: The

FunctionCallingAgentWorkeris created with the tool and a system prompt. This worker is responsible for using the tool to answer customer inquiries. The system prompt guides the agent's behavior, instructing it to always use the provided tools and to drive sales by asking for more information when needed. -

Top-Level Planner: The

StructuredPlannerAgentwraps the worker, adding a planning layer that allows the agent to dynamically plan and reflect on its actions. -

Example Usage: The agent processes a customer inquiry ("I'm looking for women's jeans for a summer party"), demonstrating how it uses the tool to provide a relevant response.

This setup shows how simple it is to fix the "No Reflection Limitation" by enabling the agent to plan, act, and reflect on its actions using tools and a structured approach.

Summary

That's how an agent would handle the last example with reflection. I know we talked about a lot of concepts, so let's summarize agents versus naive RAGs one last time.

I'm saying that naive RAGs are dead because they have numerous limitations:

![]()

- They don't have a conversation memory

![]()

- They only have single-shot generation

![]()

- They don't have the ability to understand queries

![]()

- And they can't reflect on user input

Agents

Agents, on a high level, span from very simple pipelines that are low cost and low latency, all the way to advanced applications that are more costly to run and have significantly higher latency.

Agent Ingredients vs. Full Agents

We can categorize the agentic pipeline into two distinct categories: the first being agent ingredients, meaning pipelines or products that have some agentic behaviors, like memory, query planning, and access to tools. These products or pipelines can have one or multiple agent ingredients. The other category is real full agents, which have access to all the agentic tools and are also capable of dynamic planning.

Long Live Agents

In conclusion, we're solving the limitations of naive RAGs with agents by giving them:

- Conversation memory

- The ability to route between internal tools, fixing the single-shot generation

- Access to external tools, addressing the inability to understand queries

- The ability to create a plan and dynamically adjust it, solving the issue of reflection

Book Me as Your AI Consultant

Want to implement AI agents for your e-commerce business?

I specialize in using AI agents to improve product recommendations and customer interactions. Let me show you how agents can transform your e-commerce experience.

Ready to see the impact?

Book a free call to discuss how we can work together to boost your e-commerce with AI agents: