Advent Calendar Day 8: How AI Agents Handle Negations in Queries

This December, I'm showing how naive chatbots fail and how AI agents make shopping easier.

We've seen naive chatbots fail at clarifying questions, context shifts, numerical requirements, multiple requests in one query, price filters, style suggestions and unavailable colors.

Today, the customer wants men's casual shoes but not in black. The naive chatbot still includes black shoes.

The AI agent, however, filters out black shoes and shows other colors.

Source Code

For a deeper dive into this example, check out my GitHub repository for a detailed, step-by-step implementation in Jupyter Notebooks, breaking down the code and methodology used by the AI agent in today's scenario.

Introducing SoleMates

SoleMates is our fictional online shoe store we'll use to illustrate these concepts:

SoleMates is our fictional online shoe store

Today's Challenge: Negation in Queries

Scenario

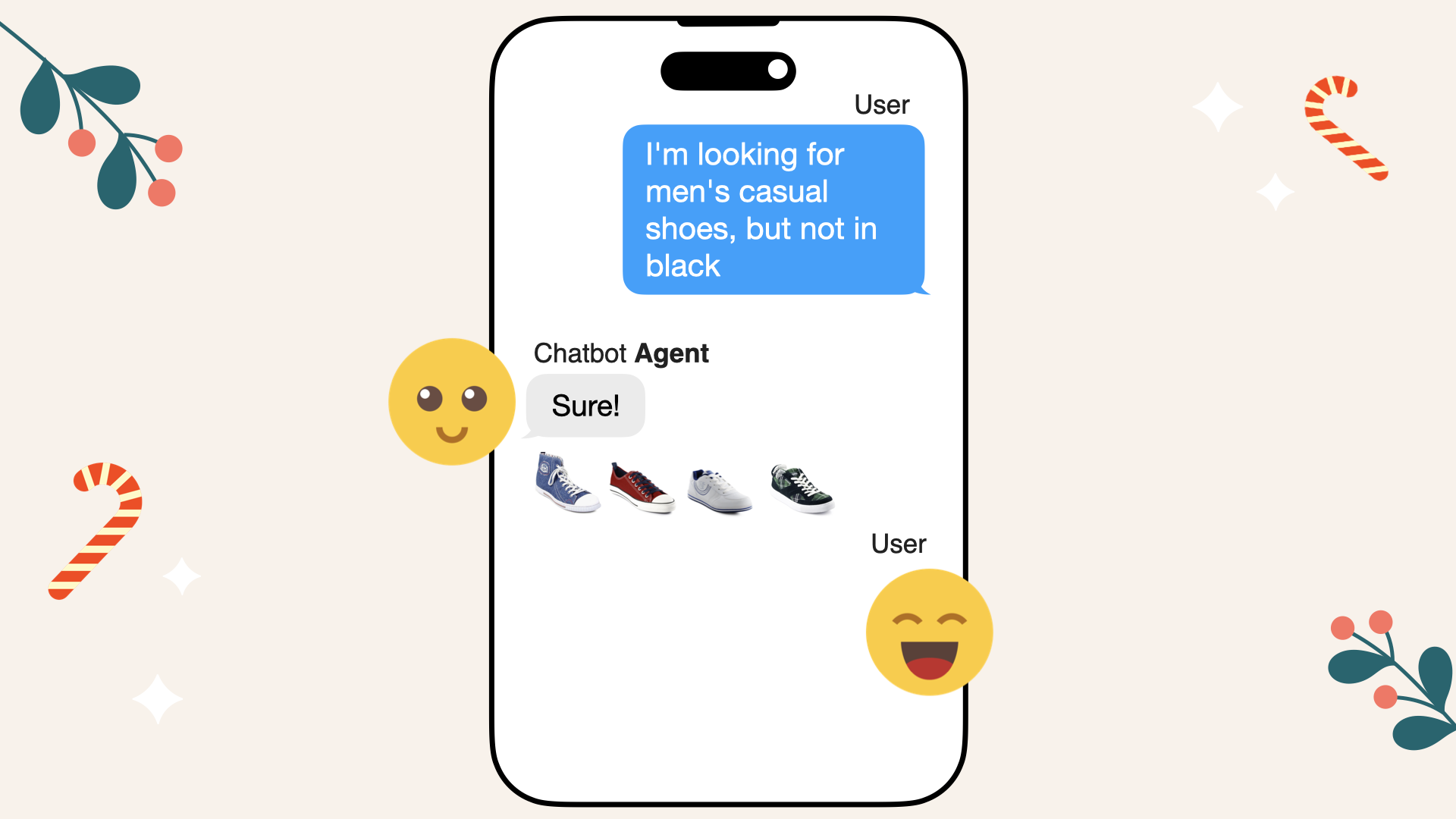

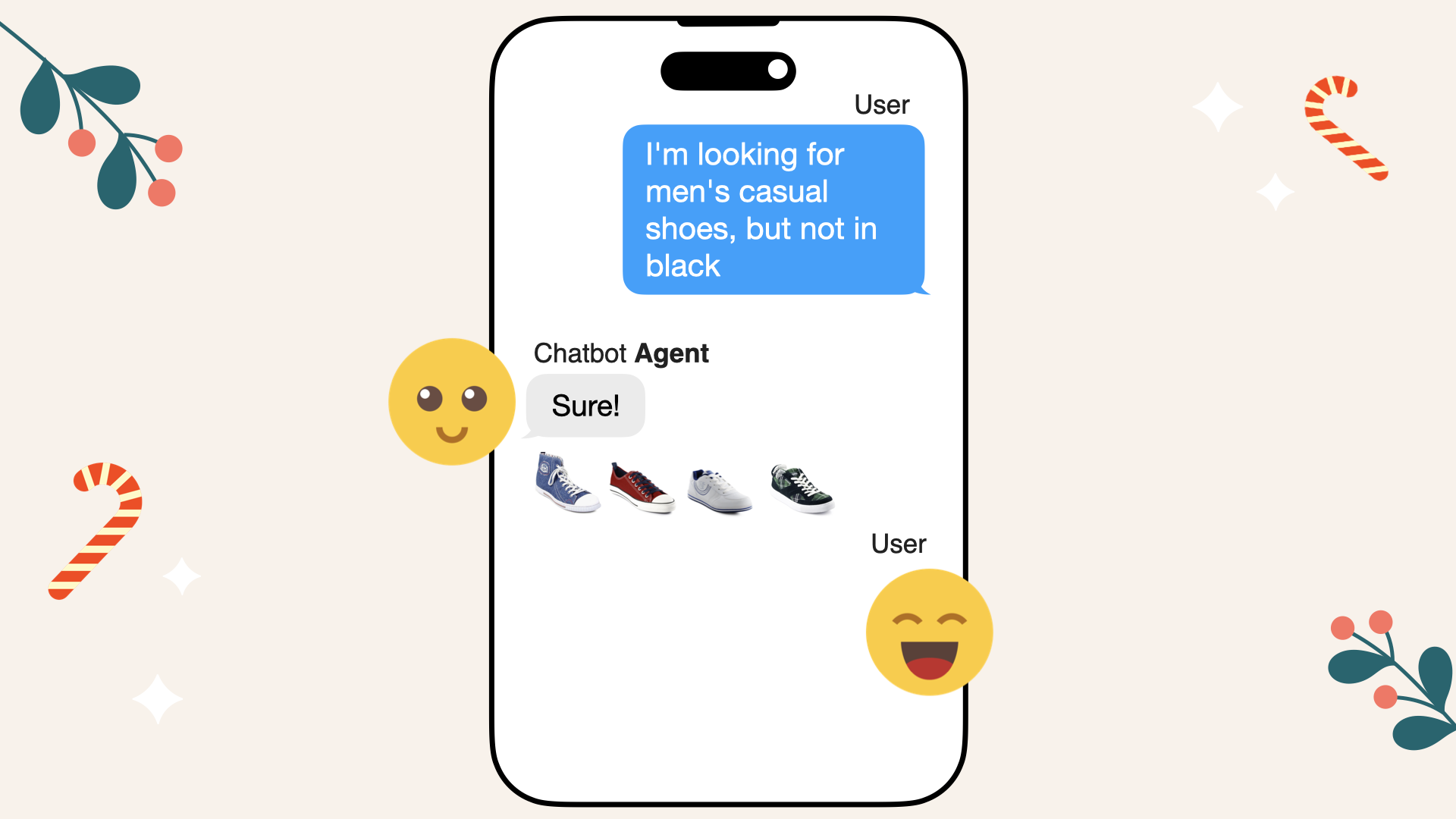

Customer: "I'm looking for men's casual shoes, but not in black":

A customer initiates a chat with SoleMates and asks about men's casual shoes, but not in black

Naive Chatbot Response

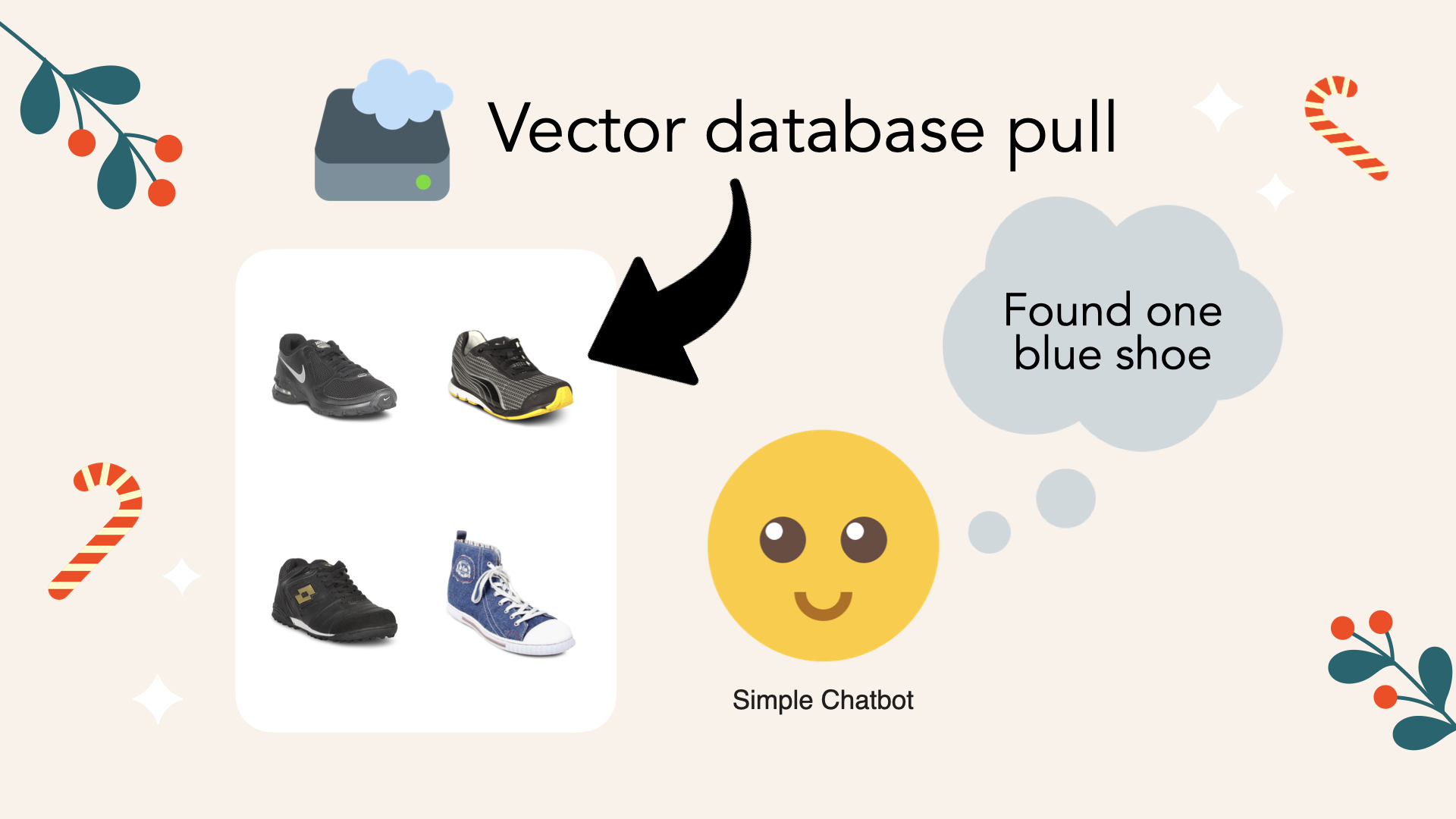

The naive chatbot turns this into one vector and doesn't understand the negation.

It returns a list dominated by black shoes. Maybe it gets lucky and finds one blue option, but mostly it fails to exclude black shoes:

The naive chatbot tries to pull shoes that are not black from the vector database

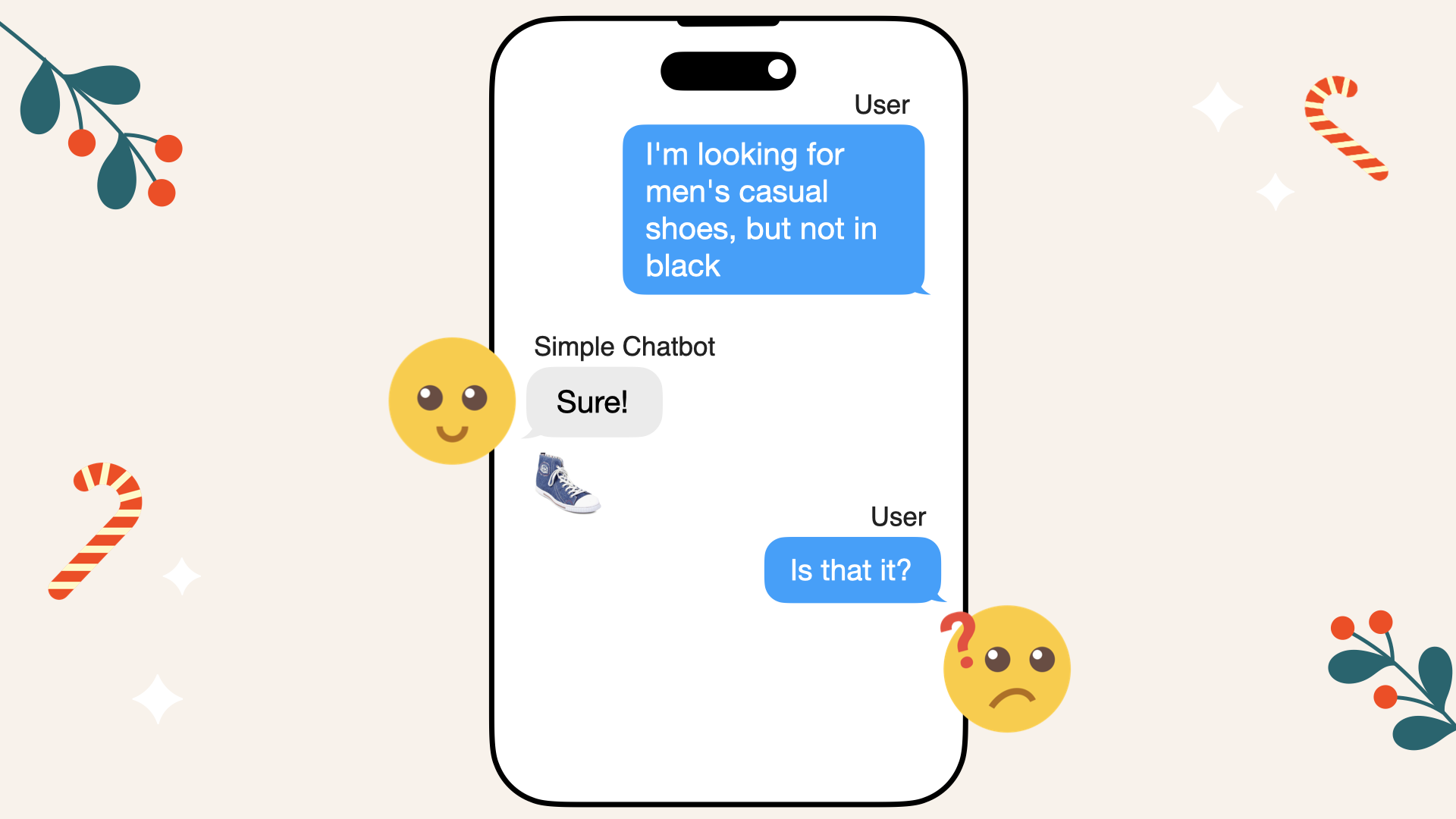

The naive chatbot happened to pull a blue shoe together with mostly black shoes from the vector database so it replies:

Naive chatbot: "You might be interested in the Lee Cooper men's LC casual denim blue shoe, which is a casual shoe available in blue."

The naive chatbot found only one blue option

This shows that a naive chatbot does not handle negation properly.

Why Did the Naive Chatbot Fail?

- Ignores the "not in black" part

- Treats the request as a general search for men's casual shoes

- Fails to filter out unwanted attributes

Limitations Highlighted

- No true negation or exclusion logic

- Stuck with semantic similarity alone, missing user preferences

AI Agent Solution

The AI agent sees "not in black" and converts it into a filter that excludes black shoes. It applies:

- gender = men

- usage = casual

- color != black

Then it returns a range of men's casual shoes in other colors, perfectly matching the user's needs.

Agent's Interaction:

- Customer: "I'm looking for men's casual shoes, but not in black"

- Agent: "Understood, let me exclude black shoes from your results"

- Agent returns brown, green, blue, red, and white casual shoes:

AI agent listing men's casual shoes in various colors excluding black, like red, green, or blue

How Did the AI Agent Succeed?

- Interprets negation and applies a "not equal to" filter

- Leverages metadata filtering to exclude black color shoes

- Delivers a curated list that respects user preferences

Key Takeaways

Naive Chatbot Limitation

- Does not handle negation properly, returning unwanted results or is getting lucky and finds a single option

AI Agent Advantages

- Applies exclusion filters based on user input

- Understands what the user does NOT want and removes it

- Shows only relevant, acceptable products

Conclusion

By handling negations, the AI agent provides a better user experience.

Instead of showing black shoes or a single option, it filters them out and offers suitable alternatives. This attention to user instructions sets AI agents apart.

About This Series

Each day in December, I highlight a scenario where naive chatbots fail, and AI agents deliver.

Follow along to learn how to build better chatbots.

Coming Up: Understanding Context Shifts

In tomorrow's issue, we'll explore how AI Agents Improve Naive Chatbots by Remembering Color Requirements

I'm preparing a course to teach you how to build and deploy your own AI agent chatbot. Sign up here!

Behind the Scenes: Code Snippets

Here's a simplified illustration of how the AI agent processes the query.

We're giving the agent access to two tools it can use freely:

- Vector database metadata filtering

- Vector database query

1. Vector database metadata filtering tool

def create_metadata_filter(filter_string):

# For "men's casual shoes -black":

# Returns:

# [{"key": "gender", "value": "men", "operator": "=="},

# {"key": "usage", "value": "casual", "operator": "=="},

# {"key": "color", "value": "black", "operator": "!="}]

filters = parse_filters(filter_string)

return filters

2. Vector database query

def search_footwear_database(query_str, filters_json):

embedded_query = embed_query_aws_titan(query_str)

results = vector_db.search(embedded_query, filters=filters_json)

return results

I use AWS Titan, a multimodal embedding model that converts both product texts and images into vectors, integrated as the function embed_query_aws_titan into the AI agent tool search_footwear_database.

This means the AI agent can process a query like "red heels" and match it to not only product descriptions but also actual images of red heels in the database.

By combining text and image data, the model helps the AI agent provide more relevant and visually aligned recommendations based on the customer's input.

Agent workflow

Construct agent worker with access to the two tools and initiate the agent:

from llama_index.core.agent import AgentRunner, FunctionCallingAgentWorker

agent_worker = FunctionCallingAgentWorker.from_tools(

[

create_metadata_filters_tool,

query_vector_database_tool,

])

agent = AgentRunner(

agent_worker,

)

Initiate conversation with agent:

agent.chat("I'm looking for men's casual shoes, but not in black")

Agent workflow:

Agent:

- Calls

create_metadata_filter("men's casual shoes -black") - Gets filter:

[

{"key": "gender", "value": "men", "operator": "=="},

{"key": "usage", "value": "casual", "operator": "=="},

{"key": "color", "value": "black", "operator": "!="}

]

- Applies the returned filters and calls the database query

- Lists men's casual shoes in colors other than black:

AI agent listing men's casual shoes in various colors excluding black, like red, green, or blue

Additional Resources

For a deeper dive into this example, check out my GitHub repository where I break down the code and methodology used by the AI agent in today's scenario.

Want to build an AI agent that respects user preferences and exclusions? Sign up here and create smarter chatbots.

Why use AI agents instead of simple chatbots?

If you need an AI chatbot that handles negations and exclusions, consider using an AI agent with metadata filtering.

By understanding what users do not want, AI agents can refine search results, increasing user satisfaction and conversion rates in e-commerce.